Taming the Measurement Overhead in ADAPT-VQE: Strategies for Scalable Quantum Chemistry on NISQ Devices

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) is a leading algorithm for molecular simulation on near-term quantum computers, promising compact circuits and resilience to barren plateaus.

Taming the Measurement Overhead in ADAPT-VQE: Strategies for Scalable Quantum Chemistry on NISQ Devices

Abstract

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) is a leading algorithm for molecular simulation on near-term quantum computers, promising compact circuits and resilience to barren plateaus. However, its practical application is hindered by a significant measurement overhead, which arises from the need to evaluate numerous commutator operators for its adaptive ansatz construction. This article provides a comprehensive analysis of the ADAPT-VQE measurement overhead for an audience of researchers and drug development professionals. We explore the fundamental sources of this overhead, review state-of-the-art mitigation strategies including informationally complete measurements and shot-optimization techniques, and present validation data from recent studies on molecular systems. The article concludes by synthesizing the path toward practical quantum advantage in drug discovery through reduced-measurement ADAPT-VQE protocols.

What is ADAPT-VQE Measurement Overhead? Defining the Core Challenge for NISQ-Era Quantum Chemistry

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) has emerged as a leading algorithm for molecular simulations on Noisy Intermediate-Scale Quantum (NISQ) devices. By dynamically constructing circuit ansätze, it addresses critical limitations of static approaches like the Unitary Coupled Cluster (UCCSD) and hardware-efficient ansätze, which often yield deep circuits or suffer from trainability issues like barren plateaus [1] [2]. This greedy, iterative algorithm builds compact, problem-tailored ansätze, significantly reducing circuit depth and CNOT counts compared to fixed-ansatz approaches [2] [3].

However, this advantage introduces a significant challenge: a substantial measurement overhead [4] [5] [6]. The very process of iteratively selecting operators and optimizing parameters requires a polynomially scaling number of observable evaluations, creating a bottleneck for practical implementations on current quantum hardware [4]. This whitepaper examines the core ADAPT-VQE algorithm, analyzes the sources of its measurement overhead, and synthesizes current research strategies aimed at mitigating this bottleneck, framing the discussion within the broader context of achieving quantum advantage for chemical simulations.

The ADAPT-VQE Algorithm: Core Mechanics and Workflow

ADAPT-VQE belongs to a class of adaptive variational algorithms that systematically grow an ansatz one operator at a time from a predefined pool. The algorithm's strength lies in its iterative two-step process, which tailors the ansatz to the specific molecule and Hamiltonian being simulated [3].

Algorithmic Foundation and Workflow

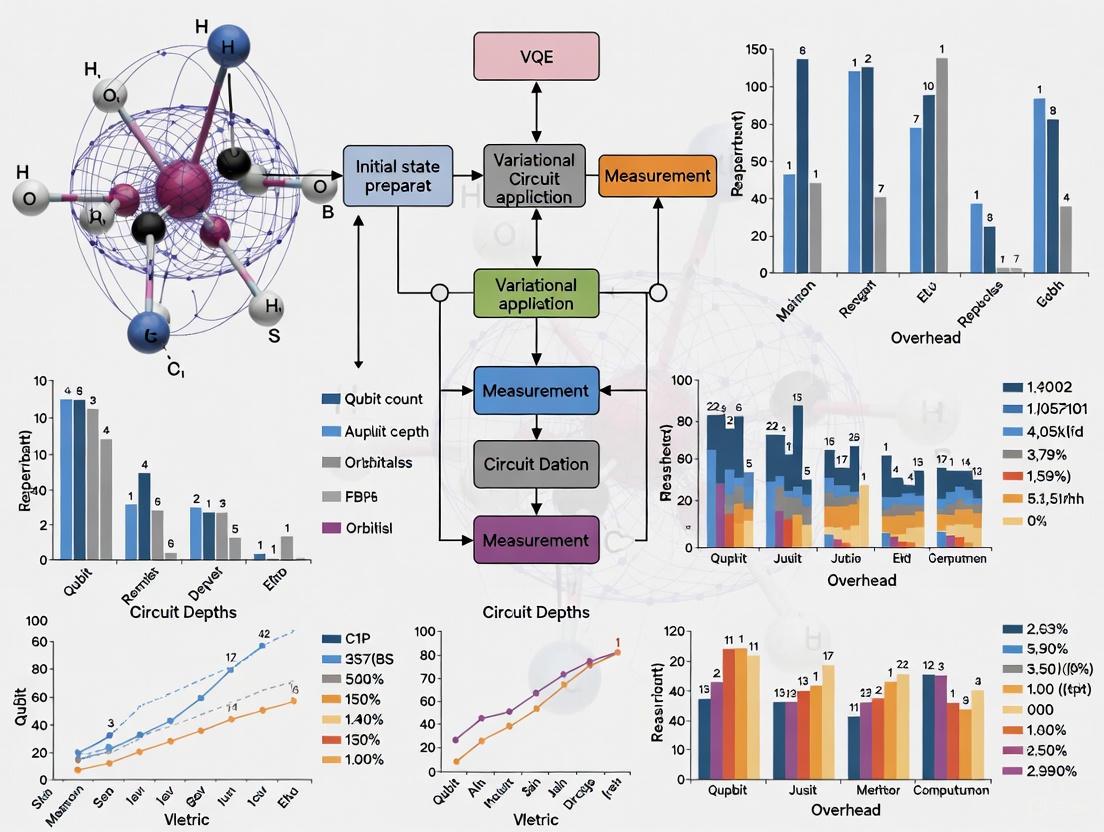

The algorithm begins with a simple reference state, typically the Hartree-Fock state. At each iteration ( m ), the algorithm executes two critical steps, as outlined in Algorithm 1 and illustrated in Figure 1.

Step 1: Operator Selection. The algorithm computes the energy gradient with respect to every parameterized unitary operator ( \mathscr{U} ) in a pre-defined operator pool ( \mathbb{U} ). The selection criterion identifies the operator ( \mathscr{U}^* ) with the largest gradient magnitude [4]: $$ \mathscr{U}^*= \underset{\mathscr{U} \in \mathbb{U}}{\text{argmax}} \left| \frac{d}{d\theta} \Big \langle \Psi^{(m-1)} \left| \mathscr{U}(\theta)^\dagger \widehat{A} \mathscr{U}(\theta) \right| \Psi^{(m-1)} \Big \rangle \Big \vert _{\theta=0} \right|. $$ This operator is then appended to the current ansatz with its parameter initially set to zero [4] [1].

Step 2: Global Optimization. All parameters in the new, longer ansatz—including the newly added one and those recycled from the previous iteration—are optimized variationally to minimize the energy expectation value [4] [1]: $$ (\theta1^{(m)}, \ldots, \thetam^{(m)}):=\underset{\theta1, \ldots, \theta{m}}{\operatorname {argmin}} \Big \langle {\Psi}^{(m)}(\vec{\theta}) \left| \; \widehat{A} \;\right| {\Psi}^{(m)}(\vec{\theta})\Big \rangle. $$

These steps repeat until a convergence criterion, such as the gradient norm falling below a threshold, is met [1].

Figure 1. The ADAPT-VQE Workflow. This flowchart illustrates the iterative process of growing an ansatz in ADAPT-VQE, from initial state preparation to final convergence.

The Scientist's Toolkit: Key Components of an ADAPT-VQE Simulation

Table 1: Essential Components for ADAPT-VQE Experiments

| Component | Function & Description | Common Types & Examples |

|---|---|---|

| Operator Pool ((\mathbb{U})) | A predefined set of parameterized unitary operators from which the ansatz is built. | Fermionic Pool [1] [3]: UCCSD-type operators (e.g., ( \hat{a}i^a - \hat{a}a^i )); Qubit Pool [2] [7]: Individual Pauli strings; CEO Pool [2]: Novel coupled exchange operators for reduced circuit depth. |

| Reference State | The initial state from which the adaptive ansatz is constructed. | Typically the Hartree-Fock state [1] [3]. |

| Measurement Strategy | The method for estimating expectation values and gradients on quantum hardware. | Naive (Direct) [6], Grouping (Qubit-Wise Commutativity) [6] [7], Informationally Complete POVMs [8], Variance-based Shot Allocation [5] [6]. |

| Classical Optimizer | The classical algorithm used to minimize the energy with respect to the variational parameters. | Gradient-based methods (e.g., BFGS [1]). |

| Classical Simulator | High-performance computing (HPC) resource for pre-optimization or noisy emulation. | Sparse Wavefunction Circuit Solver (SWCS) [9], HPC emulators for statistical noise simulation [4]. |

| Estragole | Estragole (Methyl Chavicol) | |

| 2-Amino-5-formylthiazole | 2-Amino-5-formylthiazole, CAS:1003-61-8, MF:C4H4N2OS, MW:128.15 g/mol | Chemical Reagent |

The Measurement Bottleneck: A Quantitative Analysis

The adaptive nature of ADAPT-VQE, while beneficial for ansatz compactness, directly leads to its primary pitfall: a dramatically increased number of quantum measurements, often referred to as "shot overhead."

The overhead stems from two primary sources:

- Operator Selection (Gradient Evaluation): At every iteration, the gradient for every operator in the pool must be estimated. This involves measuring the expectation values of commutators

[H, A_i]for all pool operators ( A_i ) [6] [8]. For large pools (e.g., UCCSD pool scales as ( \mathcal{O}(N^2 n^2) )), this requires tens of thousands of noisy measurements [4]. - Parameter Optimization: Each iteration introduces a new parameter, and a global optimization over all parameters is performed. This requires repeated evaluations of the energy (the Hamiltonian expectation value), which itself is a sum of many Pauli terms, each requiring numerous measurements [4] [6].

The impact of this overhead is stark, as shown in Table 2. Noisy simulations demonstrate that measurement noise can cause the algorithm to stagnate well above chemical accuracy, unlike in ideal noiseless conditions [4].

Resource Evolution: From Original to State-of-the-Art ADAPT-VQE

Research since the inception of ADAPT-VQE has dramatically reduced its resource requirements. Table 2 quantifies this evolution, highlighting the significant reduction in quantum resources achieved through improved operator pools and subroutines.

Table 2: Quantitative Evolution of ADAPT-VQE Resource Requirements [2]

| Molecule (Qubits) | Algorithm Version | CNOT Count | CNOT Depth | Measurement Cost (Relative) |

|---|---|---|---|---|

| LiH (12 qubits) | Original (GSD) ADAPT-VQE | 100% (Baseline) | 100% (Baseline) | 100% (Baseline) |

| CEO-ADAPT-VQE* | 27% | 8% | 2% | |

| H6 (12 qubits) | Original (GSD) ADAPT-VQE | 100% (Baseline) | 100% (Baseline) | 100% (Baseline) |

| CEO-ADAPT-VQE* | 12% | 4% | 0.4% | |

| BeH2 (14 qubits) | Original (GSD) ADAPT-VQE | 100% (Baseline) | 100% (Baseline) | 100% (Baseline) |

| CEO-ADAPT-VQE* | 13% | 4% | 0.6% |

Mitigation Strategies: A Multi-Faceted Approach to Shot Reduction

The research community has developed several innovative strategies to mitigate the measurement overhead, which can be broadly categorized into three approaches.

Measurement Reuse and Efficient Sequencing

This strategy focuses on reconfiguring the measurement process itself to extract more information from each quantum state preparation.

- Reusing Pauli Measurements: Pauli measurement outcomes obtained during the VQE parameter optimization can be stored and reused in the subsequent operator selection step. This leverages the overlap between the Pauli strings in the Hamiltonian and those in the commutators

[H, A_i]used for gradient estimation [5] [6]. - Informationally Complete Generalized Measurements: Methods like Adaptive Informationally Complete POVMs (AIM) allow for the reconstruction of the quantum state from a single type of measurement. The resulting data can be reused to estimate all the gradients for the operator pool via classical post-processing, potentially eliminating the dedicated quantum measurement overhead for operator selection [8].

- Batched Operator Addition: Instead of adding a single operator per iteration, multiple operators with the largest gradients are added simultaneously. This reduces the total number of iterative steps and, consequently, the number of costly gradient evaluations over the entire pool [7].

Advanced Shot Allocation and Commutativity Grouping

This approach optimizes how a fixed measurement budget (number of "shots") is distributed among the various terms that need to be estimated.

- Variance-Based Shot Allocation: This technique allocates more shots to measure Pauli terms with higher estimated variance, reducing the overall statistical error for a given total shot budget. This can be applied to both Hamiltonian and gradient measurements [5] [6]. One study demonstrated shot reductions of 43.21% for H2 and 51.23% for LiH compared to a uniform shot distribution [6].

- Measurement Grouping: Pauli terms that commute can be measured simultaneously. Techniques like Qubit-Wise Commutativity (QWC) group the Hamiltonian terms and the gradients of the pool operators into mutually commuting sets, drastically reducing the number of distinct state preparations required [6] [7].

Classical Pre- and Post-Processing

These strategies leverage classical high-performance computing to reduce the workload on the quantum processor.

- Classical Pre-optimization: Algorithms like the Sparse Wavefunction Circuit Solver (SWCS) can perform approximate ADAPT-VQE optimizations on classical computers to identify a compact, problem-tailored ansatz. This pre-optimized ansatz can then be loaded onto the quantum hardware for final refinement, minimizing the expensive quantum measurement cycles [9].

- Improved Operator Pools: Designing more efficient operator pools, such as the Coupled Exchange Operator (CEO) pool, leads to faster convergence. This means fewer iterations are needed to reach chemical accuracy, directly reducing the total measurement count [2].

The relationships between these strategies and the components of the ADAPT-VQE workflow they optimize are summarized in Figure 2.

Figure 2. Strategies for Mitigating ADAPT-VQE's Measurement Overhead. This diagram categorizes the main mitigation strategies according to whether they optimize the use of quantum measurements or improve the efficiency of the ansatz construction process itself.

Experimental Protocols and Benchmarks

To evaluate the effectiveness of mitigation strategies, researchers use well-defined numerical experiments on classical simulators and emerging hardware demonstrations.

Protocol for Benchmarking Shot-Efficiency

A typical protocol for assessing shot reduction methods involves the following steps [6]:

- System Selection: Choose a set of test molecules (e.g., Hâ‚‚, LiH, BeHâ‚‚) of increasing qubit size (4 to 16 qubits).

- Baseline Establishment: Run the standard ADAPT-VQE algorithm for each molecule, recording the total number of shots used to achieve chemical accuracy (typically 1.6 mHa or 1 kcal/mol). This establishes a baseline.

- Strategy Implementation: Run the ADAPT-VQE algorithm again for the same molecules, incorporating the shot-efficient strategy (e.g., measurement reuse and variance-based allocation).

- Performance Metric: Calculate the percentage reduction in the total number of shots required to reach chemical accuracy compared to the baseline. Fidelity of the final energy and wavefunction must be maintained.

Key Numerical Results

Recent studies employing these protocols have yielded promising results:

- Reused Pauli Measurements + Grouping: This combined approach reduced the average shot usage to 32.29% of the naive full measurement scheme across molecules from Hâ‚‚ to BeHâ‚‚ [6].

- Variance-Based Shot Allocation: Applied to ADAPT-VQE for Hâ‚‚ and LiH, this method achieved shot reductions of 43.21% and 51.23%, respectively, compared to uniform shot distribution [6].

- CEO-ADAPT-VQE*: This state-of-the-art variant, which combines an improved operator pool with other enhancements, reduced measurement costs by over 99% for the H₆ molecule compared to the original fermionic ADAPT-VQE formulation [2].

- AIM-ADAPT-VQE: Numerical simulations for Hâ‚‚, Hâ‚„, and 1,3,5,7-octatetraene Hamiltonians indicated that the measurement data for energy evaluation could be reused for gradients with no additional measurement overhead, while still producing circuits with CNOT counts close to the ideal case [8].

ADAPT-VQE represents a profound shift in algorithm design for the NISQ era, directly addressing the challenges of circuit depth and trainability that plague fixed-structure ansätze. Its promise of compact, problem-tailored circuits is, however, tempered by the significant pitfall of measurement overhead. Research into mitigating this overhead is a vibrant and critical field, as it bridges the gap between the algorithm's theoretical advantages and its practical implementation on hardware.

The path forward lies not in a single solution but in the integration of multiple strategies. Combining classically-inspired, compact operator pools like the CEO pool with advanced quantum measurement techniques such as variance-optimized allocation and measurement reuse presents a holistic approach. Furthermore, leveraging classical HPC resources via pre-optimization to minimize the quantum processor's workload is a promising and practical direction. Through these combined efforts, ADAPT-VQE continues to be a leading candidate for demonstrating a quantum advantage in simulating molecular systems, turning its initial pitfall into a manageable and surmountable challenge.

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) represents a significant advancement in variational quantum algorithms for quantum chemistry by dynamically constructing compact, problem-tailored ansätze. Unlike fixed-structure approaches like the Unitary Coupled Cluster (UCCSD), which often produce deep circuits, ADAPT-VQE builds the ansatz iteratively, adding parameterized gates selected from a predefined operator pool based on their potential to lower the energy [6] [2]. This adaptive construction reduces circuit depth and helps avoid trainability issues like barren plateaus [6]. However, this advantage comes at a significant cost: a substantial measurement overhead introduced by the gradient evaluations required for operator selection at each iteration [6] [10] [11].

This measurement overhead constitutes a major bottleneck for practical implementations on near-term quantum hardware. The core of the problem lies in the need to estimate the gradients for all operators in the pool, which involves measuring the expectation values of commutators between the system Hamiltonian and each pool operator [12]. This process can require the estimation of a large number of observables, scaling poorly with system size if not optimized. This technical guide deconstructs the sources of this overhead, surveys the latest mitigation strategies, and provides a detailed analysis of experimental protocols, framing the discussion within the broader context of ADAPT-VQE measurement overhead research.

The Mathematical Core of Gradient Measurement

Fundamental Equations and Their Physical Interpretation

The ADAPT-VQE algorithm grows its ansatz iteratively. At the n-th iteration, the wavefunction is given by ( \lvert \psi^{(n)} \rangle = \prodi e^{\thetai \hat{A}i} \lvert \psi0 \rangle ), where ( \hat{A}i ) are anti-Hermitian operators from the pool. The critical step for selecting the next operator involves calculating the energy gradient with respect to the parameter of each candidate operator before it is added to the circuit. For a parameter ( \thetaN ) associated with operator ( \hat{A}_N ), this gradient is given by:

[ \frac{\partial E^{(n)}}{\partial \thetaN} = \langle \psi^{(n)} \rvert [\hat{H}, \hat{A}N] \lvert \psi^{(n)} \rangle ]

This equality is derived by considering the energy expectation value ( E = \langle \psi \rvert \hat{H} \lvert \psi \rangle ) for a state prepared as ( \lvert \psi \rangle = e^{\theta \hat{A}} \lvert \psi0 \rangle ). Differentiating with respect to ( \theta ) and applying the product rule leads to the commutator expression [12]. This gradient reflects the sensitivity of the energy to a small rotation generated by the operator ( \hat{A}N ). The operator in the pool with the largest magnitude gradient is considered the most promising and is selected for the next iteration [6].

The Primary Source of Overhead

The overhead in ADAPT-VQE stems from the need to evaluate ( \langle [\hat{H}, \hat{A}k] \rangle ) for every operator ( \hat{A}k ) in the pool during each iteration. The Hamiltonian ( \hat{H} ) is typically a sum of Pauli strings ( \hat{H} = \sumi ci Pi ). Similarly, the pool operators ( \hat{A}k ) are also composed of Pauli strings. Their commutator ( [\hat{H}, \hat{A}_k] ) is itself a Hermitian operator that can be expressed as a sum of new Pauli terms. A major challenge is that the number of unique Pauli terms resulting from these commutators can be very large [13]. In hardware-efficient pools, the number of observables that need to be measured can scale as poorly as ( O(N^8) ), where ( N ) is the number of qubits, creating a significant measurement bottleneck [13].

Strategic Approaches to Overhead Mitigation

Recent research has focused on innovative strategies to reduce this measurement burden. The following diagram illustrates the logical relationships between the core problem and the primary mitigation strategies.

Figure 1. A conceptual map of the primary strategies for mitigating the measurement overhead in ADAPT-VQE, as identified in current research.

The strategies can be categorized into several key approaches:

Measurement Reuse and Commutativity Exploitation: This involves reusing Pauli measurement outcomes obtained during the VQE energy evaluation phase for the subsequent gradient estimation [6]. Furthermore, simultaneously measuring commuting observables contained in the commutator expressions can drastically reduce the number of distinct circuit executions required [13].

Efficient Operator Pools: Using carefully designed, minimal operator pools can reduce the number of gradients that need to be evaluated in each iteration. Research has shown that complete pools of size ( 2n-2 ) exist, which is the minimal size required to represent any state and is a linear reduction in pool size compared to some traditional pools [11]. Novel pools, such as the Coupled Exchange Operator (CEO) pool, have been shown to reduce measurement costs by up to 99.6% compared to original ADAPT-VQE formulations [2].

Informationally Complete Generalized Measurements: This approach uses adaptive informationally complete positive operator-valued measures (IC-POVMs) to measure the quantum state. The resulting data can be reused to estimate all commutators in the pool via classical post-processing, potentially eliminating the dedicated quantum measurement overhead for gradients [10] [8].

Variance-Based Shot Allocation: Instead of distributing measurement shots (samples) uniformly across all Pauli terms, this technique allocates more shots to terms with higher estimated variance, reducing the total number of shots required to achieve a desired precision [6].

Quantitative Analysis of Mitigation Performance

The effectiveness of these strategies is quantified through numerical simulations on various molecular systems. The table below summarizes key performance metrics reported in recent studies.

Table 1: Quantitative Performance of Overhead Reduction Strategies

| Strategy | Molecular System | Key Metric | Performance Improvement | Source |

|---|---|---|---|---|

| Pauli Measurement Reuse & Grouping | Hâ‚‚ to BeHâ‚‚ (4-14 qubits), Nâ‚‚Hâ‚„ (16 qubits) | Average Shot Usage | Reduced to 32.29% (with grouping & reuse) and 38.59% (grouping only) vs. naive measurement. | [6] |

| Variance-Based Shot Allocation | Hâ‚‚ | Total Shot Reduction | 6.71% (VMSA) and 43.21% (VPSR) relative to uniform shot distribution. | [6] |

| Variance-Based Shot Allocation | LiH | Total Shot Reduction | 5.77% (VMSA) and 51.23% (VPSR) relative to uniform shot distribution. | [6] |

| CEO Pool & Improved Subroutines | LiH, H₆, BeH₂ (12-14 qubits) | Measurement Costs | Reduction of up to 99.6% vs. original ADAPT-VQE. | [2] |

| CEO Pool & Improved Subroutines | LiH, H₆, BeH₂ (12-14 qubits) | CNOT Count / Depth | Reduction of up to 88% (count) and 96% (depth) vs. original ADAPT-VQE. | [2] |

| Efficient Gradient Measurement | General | Measurement Cost Scaling | Gradient measurement is only O(N) times more expensive than a naive VQE energy evaluation. | [13] |

These results demonstrate that a combination of strategies—such as using efficient pools, reusing measurements, and optimizing shot allocation—can lead to dramatic reductions in resource requirements, bringing ADAPT-VQE closer to feasibility on near-term devices.

Experimental Protocols and Methodologies

Protocol A: Reused Pauli Measurements with Variance-Based Allocation

This protocol integrates two techniques to minimize the shot budget [6].

Initial Setup:

- Input: Molecular geometry, basis set, fermion-to-qubit mapping (e.g., Jordan-Wigner).

- Step 1 — Hamiltonian Preparation: Generate the qubit Hamiltonian ( \hat{H} = \sumi ci P_i ).

- Step 2 — Operator Pool Preparation: Define the pool of operators ( { \hat{A}k } ). For each ( \hat{A}k ), compute the commutator ( [\hat{H}, \hat{A}k] ), which is expanded into a sum of Pauli terms ( \sumj d{kj} Qj ).

- Step 3 — Commutativity Grouping: Perform qubit-wise commutativity (QWC) or other grouping on the combined set of all Pauli strings from the Hamiltonian and all commutator expansions. This creates a set of groups ( {G_m} ), where all Paulis within a group commute and can be measured simultaneously.

Iterative ADAPT-VQE Loop:

- Step 4 — VQE Energy Evaluation: For the current ansatz ( U(\vec{\theta}) ), measure the energy. This involves measuring all groups ( {Gm} ) with a variance-based shot allocation. The results for each Pauli string ( \langle Pi \rangle ) are stored.

- Step 5 — Gradient Estimation via Reuse: For each pool operator ( \hat{A}k ), the gradient is ( \langle [\hat{H}, \hat{A}k] \rangle = \sumj d{kj} \langle Qj \rangle ). Classically compute this sum by reusing the stored measurement results ( \langle Qj \rangle ) from Step 4, whenever ( Q_j ) is a Pauli string that was already measured as part of the Hamiltonian or another commutator.

- Step 6 — Operator Selection and Ansatz Update: Select the operator ( \hat{A}k ) with the largest gradient magnitude, append ( e^{\theta \hat{A}k} ) to the ansatz, and optimize the new parameter.

Protocol B: AIM-ADAPT-VQE with IC-POVMs

This protocol replaces computational basis measurements with informationally complete generalized measurements [10] [8].

Initial Setup:

- Step 1 — POVM Design: Choose an informationally complete POVM. This is a set of operators ( {M\nu} ) such that the probabilities ( p\nu = \langle \psi \rvert M_\nu \lvert \psi \rangle ) fully characterize the quantum state ( \lvert \psi \rangle ).

Iterative ADAPT-VQE Loop:

- Step 2 — IC State Tomography: Prepare the current ansatz state ( \lvert \psi^{(n)} \rangle ) and perform the IC-POVM measurement. This yields a set of frequencies ( f\nu ) that approximate the true probabilities ( p\nu ).

- Step 3 — Classical Shadow Reconstruction: From the IC measurement data, construct a "classical shadow" of the quantum state. This shadow is a classical data structure that can be used to efficiently estimate the expectation values of a large number of observables.

- Step 4 — Gradient Estimation via Post-processing: For every operator ( \hat{A}k ) in the pool, use the classical shadow to compute the expectation value ( \langle [\hat{H}, \hat{A}k] \rangle ) entirely through classical computation. No further quantum measurements are required for the gradients.

- Step 5 — Operator Selection and Ansatz Update: Proceed as in Protocol A.

The following workflow diagram contrasts these two distinct experimental protocols.

Figure 2. A comparative workflow of two primary experimental protocols for mitigating measurement overhead in ADAPT-VQE.

The Scientist's Toolkit: Essential Research Reagents

Table 2: Key Components for ADAPT-VQE Overhead Research

| Tool / Component | Function / Role | Implementation Notes |

|---|---|---|

| Qubit Hamiltonian | Encodes the electronic structure problem of the target molecule. Serves as ( \hat{H} ) in the gradient expression. | Derived via electronic structure theory (e.g., Hartree-Fock) and a fermion-to-qubit mapping (Jordan-Wigner, Bravyi-Kitaev). |

| Operator Pool | A predefined set of operators (( { \hat{A}_k } )) from which the adaptive ansatz is built. | Choice of pool (fermionic, qubit-excitation, CEO, minimal complete) critically impacts convergence and overhead [2] [11]. |

| Commutativity Grouping Algorithm | Groups Pauli operators from ( \hat{H} ) and ( [\hat{H}, \hat{A}_k] ) into commuting families to minimize circuit executions. | Qubit-Wise Commutativity (QWC) is common; more advanced grouping (e.g., based on graph coloring) can offer further gains [6] [13]. |

| Variance-Based Shot Allocator | A classical routine that dynamically distributes a shot budget among Pauli terms based on their variance to minimize total statistical error. | Can be applied to both Hamiltonian and gradient measurement [6]. |

| IC-POVM Scheme | An informationally complete measurement strategy that allows full reconstruction of the quantum state for classical post-processing. | Replaces standard Pauli measurements. Enables gradient estimation without additional quantum resources after the initial IC measurement [10] [8]. |

| Classical Optimizer | A numerical optimization algorithm used to minimize the energy with respect to the ansatz parameters. | While not directly part of gradient measurement, its efficiency affects the total number of ADAPT-VQE iterations and thus the overall measurement cost. |

| Hexyl gallate | Hexyl gallate, CAS:1087-26-9, MF:C13H18O5, MW:254.28 g/mol | Chemical Reagent |

| Water-18O | Water-18O, CAS:14314-42-2, MF:H2O, MW:20.015 g/mol | Chemical Reagent |

Significant progress has been made in deconstructing and mitigating the measurement overhead associated with gradient evaluations in ADAPT-VQE. The research community has moved from identifying the problem of ( O(N^8) ) measurement scaling to developing sophisticated strategies that can reduce shot requirements by over 99% and pool sizes to a linear ( O(N) ) scaling [2] [11] [13]. The core insight is that the overhead is not an immutable feature of the algorithm but can be dramatically reduced through strategic Pauli reuse, efficient pooling, advanced measurement techniques, and optimized resource allocation.

Future research will likely focus on the integration and co-optimization of these strategies. Promising directions include tailoring minimal pools to specific molecular symmetries to prevent convergence issues [11], combining classical shadow techniques with efficient pools, and developing hardware-aware shot allocation that also accounts for device-specific error rates. As these techniques mature, the path to realizing ADAPT-VQE's potential for accurate quantum chemistry simulations on near-term hardware becomes increasingly clear.

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) has emerged as a promising algorithm for molecular simulations on noisy intermediate-scale quantum (NISQ) devices. Unlike fixed-structure ansätze, ADAPT-VQE iteratively constructs a problem-tailored quantum circuit by selecting operators from a predefined pool, typically achieving higher accuracy with fewer quantum gates [2]. However, this advantage comes at a significant cost: a substantial measurement overhead that hinders practical implementation on current hardware. This measurement overhead scales poorly due to its direct dependence on two key factors—the number of qubits (n) and the size of the operator pool (Npool).

Within the broader context of ADAPT-VQE measurement overhead research, understanding this scaling relationship is crucial for developing resource-efficient quantum simulations. The algorithm's iterative nature requires extensive quantum measurements for both the operator selection step and energy evaluation, creating a bottleneck that grows rapidly with system size [14] [4]. This article analyzes the fundamental scaling problems, reviews recent mitigation strategies with quantitative comparisons, and details experimental protocols that are pushing these algorithms toward practical utility on near-term quantum devices.

The Fundamental Scaling Challenge

The poor scaling of measurement costs in ADAPT-VQE arises from specific algorithmic steps that depend on the qubit count and operator pool size.

Operator Selection and its Dependence on Pool Size

The core of the ADAPT-VQE algorithm involves iteratively growing an ansatz by selecting the most beneficial operator from a pool at each step. The selection criterion, for a pool operator Ai, is often based on the gradient of the energy with respect to the new parameter: gi = ∣∂E/∂θi∣θi=0∣ [10]. Evaluating this gradient for every operator in the pool during each iteration requires a number of measurements that scales linearly with the pool size Npool [11]. Early implementations of ADAPT-VQE used fermionic operator pools (e.g., UCCGSD) whose size scales as O(n4) or worse, creating a massive measurement bottleneck even for modest system sizes [2].

Qubit Count and Hamiltonian Measurement

The second major source of overhead stems from evaluating the energy expectation value E = ⟨ψ∣H∣ψ⟩. The molecular Hamiltonian H is decomposed into a linear combination of NPauli Pauli operators. The number of these fundamental terms scales as O(n4) with the number of qubits n [15]. Although measurement strategies can group commuting Pauli operators to reduce the number of distinct circuit executions, the overall measurement budget required to achieve a target energy precision still grows significantly with the number of qubits [10].

Table 1: Fundamental Sources of Measurement Overhead in ADAPT-VQE

| Overhead Source | Scaling Relationship | Description |

|---|---|---|

| Operator Pool Size | O(n4) for UCCGSD pools | Number of candidate operators evaluated each iteration |

| Hamiltonian Term Count | O(n4) | Number of Pauli terms in the molecular Hamiltonian |

| Gradient Evaluation | Linear with Npool | Measurements required for operator selection per iteration |

The following diagram illustrates how these factors contribute to the overall measurement overhead in a standard ADAPT-VQE workflow:

Mitigation Strategies and Their Quantitative Impact

Research has produced three primary strategies to combat the measurement overhead: using more compact operator pools, employing efficient measurement techniques, and modifying the optimization process itself.

Minimal Complete Pools and Symmetry Adaptation

A fundamental breakthrough came from the identification of minimal complete pools. It has been proven that operator pools of size 2n - 2 can represent any state in the Hilbert space if chosen appropriately, and that this is the minimal size for such "complete" pools [11]. This reduces the pool size scaling from O(n4) to O(n), offering a dramatic reduction in the number of gradients to evaluate each iteration. Furthermore, incorporating symmetry rules into these pools ensures they respect the conservation laws of the system being simulated, preventing convergence issues [11].

Efficient Energy and Gradient Estimation Techniques

Beyond shrinking the pool, advanced measurement techniques significantly reduce the cost of evaluating the energy and gradients:

- AIM-ADAPT-VQE: This approach uses Adaptive Informationally complete generalised Measurements (AIM) to evaluate the energy. The key advantage is that the same IC measurement data can be reused to estimate all the commutators for the operator pool gradients using only classically efficient post-processing, potentially eliminating the measurement overhead for operator selection [10].

- Classical Shadows and Grouping: Other methods leverage classical shadows and Hamiltonian term grouping to reduce the number of distinct measurements required for energy evaluation, though these are more general VQE improvements that also benefit ADAPT-VQE [2].

Algorithmic Modifications: GGA-VQE

The Greedy Gradient-free Adaptive VQE (GGA-VQE) algorithm addresses the overhead by modifying the core adaptive process. Instead of the standard gradient-based selection followed by global optimization, GGA-VQE uses an analytic, gradient-free approach. It determines the best operator and its optimal parameter simultaneously by exploiting the fact that the energy landscape for a single-parameter gate is a simple trigonometric function [14] [4]. This avoids the high-dimensional global optimization and can be more resilient to statistical shot noise, though it may produce longer circuits.

Table 2: Quantitative Resource Reduction from Improved ADAPT-VQE Variants

| Molecule (Qubits) | ADAPT-VQE Variant | Reduction in CNOT Count | Reduction in CNOT Depth | Reduction in Measurement Cost |

|---|---|---|---|---|

| LiH (12 qubits) | CEO-ADAPT-VQE* | Up to 88% | Up to 96% | Up to 99.6% |

| H6 (12 qubits) | CEO-ADAPT-VQE* | Up to 88% | Up to 96% | Up to 99.6% |

| BeH2 (14 qubits) | CEO-ADAPT-VQE* | Up to 88% | Up to 96% | Up to 99.6% |

The table above demonstrates the dramatic resource reductions achieved by state-of-the-art variants like CEO-ADAPT-VQE*, which combines a novel "Coupled Exchange Operator" pool with other improvements [2]. The updated workflow incorporating these mitigation strategies is shown below:

Experimental Protocols and Research Toolkit

For researchers aiming to implement or benchmark these strategies, understanding the experimental protocols is essential.

Protocol for AIM-ADAPT-VQE

The key experiment demonstrating the mitigation of measurement overhead using informationally complete measurements involves the following steps [10]:

- Initialization: Begin with a reference state (e.g., Hartree-Fock) and an empty ansatz.

- Informationally Complete Measurement: At each ADAPT iteration, perform an IC measurement of the current quantum state ψ(k). This involves projecting the state onto a basis defined by a specific positive operator-valued measure (POVM).

- Classical Post-processing: Use the classical snapshot of the state (the "classical shadow") obtained from the IC measurement to estimate the energy expectation value E = ⟨ψ(k)∣H∣ψ(k)⟩.

- Gradient Estimation from Shadows: Crucially, reuse the same classical shadow to compute the gradients gi for all operators Ai in the pool. This is possible because the gradients can be expressed as expectation values of other observables derived from the commutator [Ai, H], which can be estimated from the existing data.

- Ansatz Growth and Iteration: Select the operator with the largest gi, append it to the ansatz with an initial parameter of zero, and proceed to the next iteration until convergence.

This protocol was validated numerically for several H4 Hamiltonians, showing that the measurement data for energy evaluation could be reused for operator selection with no additional quantum measurement overhead [10].

Protocol for GGA-VQE

The experiment for the gradient-free GGA-VQE follows a different protocol, designed for noise resilience [14] [4]:

- Landscape Function Construction: At iteration k, for each operator Uj(θ) in the pool, construct its energy landscape function fj(θ) = ⟨ψ(k)∣Uj†(θ) H Uj(θ)∣ψ(k)⟩. This is done by measuring the energy for a few specific values of θ (e.g., θ = 0 and θ = π/2).

- Analytical Minimization: For each fj(θ), determine the optimal angle θj that minimizes the function. Since *fj(θ) is a simple trigonometric function for standard pool operators, this minimization is classically trivial and exact.

- Operator and Angle Selection: Identify the operator Uj and corresponding angle θ that together yield the lowest energy: {Uj, θ} = argminj, θ fj(θ).

- Greedy Appending: Directly append Uj(θ) to the current ansatz. Do not re-optimize previous parameters.

- Iteration: Repeat until convergence. This protocol was successfully executed on a 25-qubit error-mitigated QPU for a 25-body Ising model [4].

The Scientist's Toolkit: Key Research Reagents

Table 3: Essential Components for ADAPT-VQE Measurement Overhead Research

| Component | Function & Role in Overhead Reduction |

|---|---|

| Minimal Complete Pools | Reduced-size operator pools (e.g., of size 2n-2) that are provably complete, directly addressing the O(nâ´) pool size scaling [11]. |

| Symmetry-Adapted Pools | Pools designed to respect system symmetries (e.g., particle number, spin). Essential for preventing convergence issues and ensuring efficient state preparation [11]. |

| Coupled Exchange Operator (CEO) Pool | A novel pool designed for hardware efficiency, contributing to significant reductions in CNOT counts and measurement costs [2]. |

| Informationally Complete (IC) POVMs | Specialized generalized measurements whose data provides a full description of the quantum state, enabling the reuse of measurement data for multiple observables [10]. |

| Classical Shadows | Classical snapshots of the quantum state derived from IC measurements. Enable the estimation of many operator gradients through classical post-processing [10] [2]. |

| 1-(2-Cyanophenyl)-3-phenylurea | 1-(2-Cyanophenyl)-3-phenylurea |

| Diaporthin | Diaporthin|CAS 10532-39-5|For Research Use |

The measurement overhead in ADAPT-VQE, once a major roadblock, is being systematically addressed through a multi-faceted research effort. The poor scaling with qubit count and operator pool size is being mitigated at its root by developing minimal O(n)-sized pools, reusing measurement data via informational completeness, and redesigning algorithms for noise resilience. Quantitative results are striking, with state-of-the-art implementations demonstrating up to 99.6% reduction in measurement costs while simultaneously reducing circuit depths by up to 96% [2]. While challenges remain, particularly in the simulation of large, strongly correlated molecules, these advances have significantly bridged the gap between theoretical algorithm design and practical implementation on near-term quantum hardware. The ongoing research into ADAPT-VQE measurement overhead continues to be a critical enabler for the ultimate goal of achieving a quantum advantage in quantum chemistry and materials science.

ADAPT-VQE faces a critical measurement overhead barrier from gradient evaluations and energy estimation, making simulations of chemically relevant systems infeasible on near-term devices. Research focuses on strategic Pauli reuse, optimized operator pools, and modified algorithms to overcome this.

| Strategy | Core Principle | Key Improvement | Experimental Validation |

|---|---|---|---|

| Pauli Reuse & Shot Allocation [6] | Reusing Pauli measurements from VQE optimization in subsequent gradient evaluations; allocating shots based on variance. | Shot reduction to 32.29% of naive approach [6]. | Tested on Hâ‚‚ (4 qubits) to BeHâ‚‚ (14 qubits) and Nâ‚‚Hâ‚„ (16 qubits) [6]. |

| Informationally Complete POVMs [10] [8] | Using adaptive informationally complete generalized measurements (AIM); IC measurement data is reused to estimate all commutators classically. | Eliminates dedicated measurements for gradient evaluations for some systems [10] [8]. | Demonstrated for Hâ‚„ and octatetraene Hamiltonians; converges with no extra overhead [10] [8]. |

| Minimal Complete Pools [11] | Using rigorously proven, minimal-sized operator pools of size 2n-2 that are complete for any state in Hilbert space. | Reduces measurement overhead from quartic O(nâ´) to linear O(n) in the number of qubits [11]. | Classically simulated for several strongly correlated molecules [11]. |

| Greedy Gradient-Free Approach [14] | Replacing gradient-based selection with analytical, gradient-free optimization of one-dimensional "landscape functions." | Improved resilience to statistical noise; demonstrated on a 25-qubit error-mitigated QPU for a 25-body Ising model [14]. | |

| Coupled Exchange Operator (CEO) Pool [2] | Novel operator pool that produces hardware-efficient circuits and converges with fewer measurements and iterations. | Combined with other improvements, reduces CNOT count by up to 88% and measurement costs by up to 99.6% for 12-14 qubit molecules [2]. | Tested on LiH, H₆, and BeH₂ [2]. |

| Batched Operator Addition [7] | Adding multiple operators with the largest gradients to the ansatz in a single iteration ("batched ADAPT-VQE"). | Significantly reduces the number of gradient computation cycles, thereby reducing total measurement overhead [7]. | Applied to Oâ‚‚, CO, and COâ‚‚ molecules involved in carbon monoxide oxidation [7]. |

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) is a leading algorithm for molecular simulations on Noisy Intermediate-Scale Quantum (NISQ) devices [2]. Its strength lies in its ability to construct compact, problem-specific ansätze iteratively, which helps to mitigate issues like deep quantum circuits and the barren plateaus that plague fixed-structure ansätze [6] [2]. However, a major drawback hindering its practical application is the prohibitively high measurement (shot) overhead [6].

This overhead originates from the algorithm's core iterative cycle [14]:

- Operator Selection: In each iteration, the algorithm must evaluate the energy gradient with respect to every operator in a predefined "pool." This involves measuring the expectation values of the commutator

[H, A_i]for each pool operatorA_i, a process that requires a massive number of quantum measurements [6] [7]. - Parameter Optimization: After an operator is selected and added to the ansatz, the variational parameters must be optimized. This requires repeated measurements of the molecular Hamiltonian's expectation value, which is itself a sum of many Pauli strings [6] [15].

The measurement cost of the operator selection step scales with the size of the operator pool. Early ADAPT-VQE implementations used fermionic pools (e.g., UCCSD) whose size grows as a polynomial O(N²n²), where N is the number of spin-orbitals and n is the number of electrons [7]. This high scaling creates a critical barrier for simulating chemically relevant systems like industrially important molecules or complex reaction pathways [7].

Detailed Experimental Protocols and Methodologies

Protocol A: Shot-Efficient ADAPT-VQE via Reused Pauli Measurements

This protocol integrates two strategies to minimize the number of shots (measurements) required [6].

- Pauli Measurement Reuse: The Pauli strings measured during the VQE parameter optimization step are stored. In the subsequent ADAPT-VQE iteration, the operator selection step reuses any identical Pauli strings required for the gradient measurements, avoiding redundant measurements [6].

- Variance-Based Shot Allocation: For both the Hamiltonian and the gradient observables, the total shot budget is distributed non-uniformly among the measurable Pauli terms. The number of shots for a term

iis proportional to√(Var_i / |α_i|), whereVar_iis the variance of the term andα_iis its coefficient. This minimizes the overall statistical error in the estimated expectation value [6].

Procedure:

- Initialization: Prepare the initial reference state (e.g., Hartree-Fock). Group the Hamiltonian Pauli terms and the gradient observable Pauli terms (derived from the commutator

[H, A_i]) using qubit-wise commutativity (QWC). - VQE Optimization Loop:

- For the current ansatz, measure the energy expectation value using variance-based shot allocation. Store all measured Pauli outcomes.

- Update classical parameters to minimize the energy.

- ADAPT Operator Selection:

- For each operator in the pool, construct the gradient observable

[H, A_i]and identify its constituent Pauli strings. - For each required Pauli string, check if it was measured in Step 2. If not, measure it with variance-based shot allocation.

- Calculate the gradient for each operator using the (reused or new) measurement results.

- For each operator in the pool, construct the gradient observable

- Ansatz Update: Select the operator with the largest gradient magnitude, append it to the ansatz with an initial parameter of zero, and return to Step 2.

Protocol B: AIM-ADAPT-VQE with Informationally Complete POVMs

This protocol replaces standard computational basis measurements with Adaptive Informationally complete generalized Measurements (AIM) to enable extensive data reuse [10] [8].

- Informationally Complete (IC) POVMs: These are generalized measurements whose outcomes provide sufficient information to reconstruct the entire quantum state (density matrix).

- Data Reuse for Gradients: The same IC-POVM measurement data used to estimate the energy can be classically post-processed to compute the gradients for all operators in the pool simultaneously, eliminating the need for dedicated gradient measurements [10] [8].

Procedure:

- Initialization: Prepare the initial reference state. Choose an IC-POVM scheme (e.g., dilated POVMs).

- State Tomography: On the quantum computer, perform the IC-POVM on the current ansatz state to collect outcome statistics.

- Classical Post-Processing:

- Energy Estimation: Reconstruct the expectation value of the Hamiltonian from the POVM data.

- Gradient Estimation: For every operator

A_iin the pool, reconstruct the expectation value of the commutator[H, A_i]from the same POVM data.

- Ansatz Update: Select the operator with the largest gradient, append it to the ansatz, and return to Step 2.

Protocol C: Qubit-ADAPT with Minimal Complete Pools

This protocol reduces the pool size itself, which directly cuts the number of gradients that need evaluation each iteration [11].

- Minimal Complete Pools: The pool is constructed from Pauli strings that form a set of size

2n-2(wherenis the number of qubits), which is proven to be the minimal size required to represent any state in the Hilbert space [11]. - Symmetry Adaptation: The pool must be chosen to obey the symmetries of the simulated molecule (e.g., particle number, spin symmetry). Ignoring this leads to "symmetry roadblocks" that prevent convergence [11].

Procedure:

- Pool Generation: Prior to the calculation, generate a minimal complete pool of Pauli operators that also respects the known symmetries of the molecular Hamiltonian.

- ADAPT-VQE Loop: Run the standard ADAPT-VQE algorithm, but only compute gradients for the small number of operators in this minimal pool.

Diagram 1: Core ADAPT-VQE workflow. The "Measure Observables" step is the primary source of measurement overhead, encompassing both energy and gradient estimations.

The Scientist's Toolkit: Key Research Reagents

In the context of ADAPT-VQE research, "research reagents" refer to the fundamental algorithmic components whose choice critically determines the performance and resource requirements of an experiment.

| Reagent | Function | Example & Rationale |

|---|---|---|

| Operator Pool | The dictionary of operators from which the ansatz is built; determines convergence and circuit efficiency [11] [2]. | Coupled Exchange Operator (CEO) Pool: A novel qubit pool that leads to hardware-efficient circuits and reduced measurement costs [2]. Minimal Complete Pools: Size 2n-2, reduces overhead to linear scaling [11]. |

| Measurement Protocol | The strategy for estimating expectation values on the quantum device. | Variance-Based Shot Allocation: Dynamically distributes a limited shot budget to minimize statistical error [6]. Informationally Complete POVMs: Allows full state reconstruction, enabling maximal data reuse [10] [8]. |

| Classical Optimizer | The algorithm that adjusts variational parameters to minimize energy. | Gradient-Free Optimizers: Used in GGA-VQE to avoid the noise associated with numerical gradient estimation, improving resilience [14]. |

| Qubit Tapering | A pre-processing technique to reduce the problem size by leveraging symmetries. | Tapering off qubits: Reduces the number of physical qubits required by identifying and removing symmetry qubits, simplifying the problem [7]. |

| Commutation Grouping | A technique to reduce the number of distinct quantum circuits required for measurement. | Qubit-Wise Commutativity (QWC): Groups Pauli terms that are measurable in the same circuit basis, reducing the number of circuit executions [6]. |

| Zirconium pyrophosphate | Zirconium pyrophosphate, CAS:13565-97-4, MF:O7P2Zr, MW:265.17 g/mol | Chemical Reagent |

| Bitipazone | Bitipazone, CAS:13456-08-1, MF:C20H38N8S2, MW:454.7 g/mol | Chemical Reagent |

Diagram 2: A taxonomy of strategies for mitigating the ADAPT-VQE measurement overhead problem, linking high-level approaches to specific methodologies found in the literature.

Performance Benchmarks and Comparative Analysis

The performance of different overhead mitigation strategies is quantified through classical numerical simulations, measuring reductions in shot count, circuit depth, and overall resource requirements.

Quantitative Performance of Key Strategies

The table below summarizes the resource reductions achieved by state-of-the-art approaches compared to earlier ADAPT-VQE baselines.

| Method / System | Hâ‚‚ (4q) | LiH (12q) | BeHâ‚‚ (14q) | Key Metric & Reduction |

|---|---|---|---|---|

| Original ADAPT-VQE (Baseline) | Baseline | Baseline | Baseline | (Reference for comparison) |

| Pauli Reuse + Shot Allocation [6] | 38.59% (grouping) & 32.29% (grouping+reuse) of baseline shots | N/A | N/A | Shot Reduction (vs. naive measurement) |

| CEO-ADAPT-VQE* [2] | N/A | ~99.6% reduction | ~99.6% reduction | Measurement Cost Reduction |

| CEO-ADAPT-VQE* [2] | N/A | 88% reduction | 88% reduction | CNOT Gate Count Reduction |

| CEO-ADAPT-VQE* [2] | N/A | 96% reduction | 96% reduction | CNOT Circuit Depth Reduction |

| Variance-Based Shot Allocation (VPSR for LiH) [6] | N/A | 51.23% of uniform shots | N/A | Shot Reduction (vs. uniform shot distribution) |

The research community has made significant strides in understanding and mitigating the critical measurement overhead barrier in ADAPT-VQE. Strategies have evolved from isolated improvements to integrated approaches that combine optimized operator pools, measurement strategies, and algorithmic modifications. The most promising results, such as those achieved with the CEO pool and integrated shot reduction methods, demonstrate reductions in measurement costs by up to 99.6% and in CNOT counts by up to 88% for molecules of 12-14 qubits, bringing the simulation of chemically relevant systems closer to feasibility on emerging hardware [2]. Future research will likely focus on further hybrid strategies, the co-design of algorithms for specific hardware, and pushing the boundaries of simulations for larger, more complex molecular systems like those involved in industrially critical processes such as carbon monoxide oxidation [7].

Modern Strategies for Measurement Reduction: From Algorithmic Innovations to Practical Implementations

Leveraging Informationally Complete (IC) Measurements for Data Reuse

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) has emerged as a promising algorithm for molecular simulations on Noisy Intermediate-Scale Quantum (NISQ) devices. Unlike fixed-structure ansätze such as Unitary Coupled Cluster (UCC), ADAPT-VQE iteratively constructs a compact, problem-tailored ansatz by appending parameterized unitary operators from a predefined pool [2] [14]. This adaptive construction significantly reduces quantum circuit depth and helps mitigate trainability issues like barren plateaus, which often plague hardware-efficient ansätze [6] [2]. However, this advantage comes at a significant cost: a substantial measurement overhead required for the operator selection and parameter optimization steps [6] [8] [7].

This measurement overhead constitutes a major bottleneck for practical implementations of ADAPT-VQE on current quantum hardware. Each iteration of the algorithm requires estimating energy gradients for all operators in the pool to identify the most promising candidate, typically involving measurements of numerous commutator operators [8] [10]. As system size grows, this overhead increases substantially, potentially scaling quartically with the number of qubits in naive implementations [11]. Consequently, intensive research efforts have focused on developing strategies to mitigate this measurement bottleneck, including operator pooling, commutator grouping, and measurement reuse techniques [6] [2] [11].

Among these strategies, approaches leveraging Informationally Complete (IC) measurements have shown remarkable potential by enabling maximal data reuse. This technical guide explores the theoretical foundation, implementation methodology, and experimental performance of IC measurement techniques for reducing the quantum resource requirements of ADAPT-VQE algorithms.

Theoretical Foundation of Informationally Complete Measurements

Basic Principles of IC-POVMs

Informationally Complete Positive Operator-Valued Measures (IC-POVMs) represent a fundamental concept in quantum information science. A POVM is a set of positive semidefinite operators {Eᵢ} that sum to the identity, satisfying the condition ∑ᵢEᵢ = I. When these operators form a basis for the space of density matrices, the POVM is deemed informationally complete, meaning that measurement statistics uniquely determine the quantum state [8] [10].

For a system of N qubits, the space of density matrices has dimension 4á´º, requiring an IC-POVM with at least 4á´º elements. In practice, IC-POVMs enable full quantum state tomography, as the probabilities páµ¢ = Tr(ÏEáµ¢) obtained from measurements provide sufficient information to reconstruct the complete density matrix Ï. This property is particularly valuable for variational quantum algorithms, where the same measurement data can be repurposed for multiple computational tasks [8].

Adaptive IC Measurements (AIM)

A significant advancement in this domain is the development of Adaptive Informationally complete generalized Measurements (AIM). This approach adaptively constructs IC-POVMs tailored to specific quantum states, optimizing the measurement process for practical applications [8] [10]. Unlike fixed POVMs, AIM dynamically adjusts measurement bases based on prior results, potentially reducing the number of measurements required for accurate energy and gradient estimations in variational algorithms.

The AIM framework maintains the informational completeness property while potentially enhancing measurement efficiency by focusing resources on the most informative measurement directions. This adaptivity is particularly beneficial for molecular systems where the quantum state has specific structural properties that can be exploited for measurement optimization [8].

The AIM-ADAPT-VQE Protocol

Core Algorithmic Framework

The AIM-ADAPT-VQE protocol integrates Adaptive IC Measurements with the standard ADAPT-VQE algorithm to mitigate measurement overhead through strategic data reuse [8] [10]. The algorithm proceeds through the following key steps:

Initialization: Prepare a reference state (typically Hartree-Fock) and define an operator pool appropriate for the molecular system.

Iterative Growth Cycle:

- Energy Estimation: Perform adaptive IC measurements on the current variational state to estimate the energy expectation value.

- Gradient Estimation: Reuse the IC measurement data to classically compute gradients for all operators in the pool without additional quantum measurements.

- Operator Selection: Identify the operator with the largest gradient magnitude.

- Ansatz Expansion: Append the selected operator (with initial parameter zero) to the growing ansatz.

- Parameter Optimization: Optimize all parameters in the expanded ansatz using conventional VQE approaches.

Convergence Check: Terminate when gradient norms fall below a predefined threshold, indicating approximation of the ground state.

Data Reuse Mechanism

The crucial innovation in AIM-ADAPT-VQE lies in the reuse of IC-POVM data obtained for energy estimation to also compute the gradients for operator selection. For a pool operator Aáµ¢, the gradient component is given by:

[ \frac{\partial E}{\partial \thetai} = \langle \psi | [H, Ai] | \psi \rangle ]

where H is the molecular Hamiltonian [10]. In standard ADAPT-VQE, estimating these commutators requires additional quantum measurements for each pool operator. In AIM-ADAPT-VQE, however, the complete set of IC measurement statistics enables classical computation of these gradients through post-processing, effectively eliminating the measurement overhead for the operator selection step [8] [10].

Table 1: Key Components of the AIM-ADAPT-VQE Framework

| Component | Description | Role in Measurement Reduction |

|---|---|---|

| IC-POVM | Set of measurement operators forming a basis for density matrices | Enables complete characterization of quantum state from measurement statistics |

| AIM Framework | Adaptive construction of IC-POVMs tailored to specific states | Optimizes measurement efficiency for target states |

| Data Reuse | Using same measurement data for both energy and gradient estimation | Eliminates need for additional measurements for operator selection |

| Classical Post-processing | Computation of commutators from IC measurement data | Shifts computational burden from quantum to classical resources |

Experimental Performance and Validation

Numerical Simulation Results

The AIM-ADAPT-VQE approach has been validated through numerical simulations on various molecular systems. Research by Nykänen et al. demonstrated that for several H₄ Hamiltonians and different operator pools, the measurement data obtained for energy evaluation could be reused to implement ADAPT-VQE with no additional measurement overhead [8] [10]. The simulations confirmed that when the energy is measured within chemical precision (1.6 mHa or 1 kcal/mol), the CNOT gate counts in the resulting circuits closely approximate the ideal values achievable with noiseless computations.

Notably, the AIM-ADAPT-VQE protocol maintained robust performance even with scarce measurement data, though in some cases this led to increased circuit depths. The approach successfully converged to the ground state with high probability across the tested systems, demonstrating the practical viability of the method for near-term quantum devices [8].

Comparative Performance Analysis

Table 2: Performance Comparison of ADAPT-VQE Variants for Molecular Simulations

| Algorithm | Measurement Overhead | Circuit Depth | Key Advantages | Limitations |

|---|---|---|---|---|

| Standard ADAPT-VQE [6] | High (gradient measurements scale with pool size) | Low | Simple implementation, guaranteed convergence | Measurement-intensive |

| AIM-ADAPT-VQE [8] [10] | Minimal (reuses energy measurement data) | Low to Moderate | Dramatic reduction in measurement requirements | Requires implementation of IC-POVMs |

| Qubit-ADAPT-VQE [7] | Moderate (pool size can be reduced) | Very Low | Hardware-efficient operators | May require more iterations |

| Batched ADAPT-VQE [7] | Reduced (adds multiple operators per iteration) | Moderate | Fewer gradient computation cycles | Potential ansatz redundancy |

The performance advantages of AIM-ADAPT-VQE are particularly pronounced when compared to conventional ADAPT-VQE implementations. For the Hâ‚„ system, AIM-ADAPT-VQE achieved identical convergence patterns to standard ADAPT-VQE while eliminating the measurement overhead for operator selection entirely [10]. This result holds significant implications for scaling quantum computational chemistry to larger molecular systems where measurement costs would otherwise become prohibitive.

Research Reagent Solutions: Essential Methodological Components

Table 3: Essential Research Components for IC Measurement Implementation

| Component | Function | Implementation Considerations |

|---|---|---|

| Dilation POVMs | Practical implementation of IC-POVMs | Uses auxiliary qubits to realize generalized measurements |

| Operator Pools | Set of operators for ansatz construction | Can be fermionic (UCCSD-based) or qubit (Pauli strings) |

| Classical Reconstruction Algorithms | Estimating expectation values from IC data | Statistical analysis techniques for efficient estimation |

| Symmetry-Adapted Pools [11] | Incorporating molecular symmetries | Reduces pool size while maintaining convergence |

| Qubit Tapering Techniques [7] | Reducing qubit requirements | Exploits conservation laws to reduce problem size |

Experimental Protocol for AIM-ADAPT-VQE Implementation

Step-by-Step Implementation Guide

Molecular System Preparation:

- Compute molecular integrals (one- and two-electron integrals) using classical electronic structure methods

- Transform the Hamiltonian to qubit representation using Jordan-Wigner or Bravyi-Kitaev transformation

- Apply qubit tapering techniques to reduce the problem size by exploiting symmetries [7]

IC-POVM Configuration:

- Select appropriate IC-POVM implementation (dilation POVMs recommended for practicality)

- Configure adaptive measurement protocol with initial measurement directions based on reference state

Operator Pool Design:

- Construct symmetry-adapted complete pool following methodologies from Shkolnikov et al. [11]

- Ensure pool completeness while maintaining minimal size (2n-2 for n qubits is theoretically minimal)

- Verify that pool operators respect molecular symmetries to avoid convergence roadblocks

Iterative AIM-ADAPT-VQE Execution:

- For each iteration, perform AIM measurements on current variational state

- Reuse measurement data to compute energy and all pool operator gradients

- Select operator with largest gradient magnitude for ansatz expansion

- Optimize all parameters in the expanded ansatz using classical optimizers

- Check convergence criteria (gradient norms < threshold)

Result Validation:

- Compare final energy with classical reference values (Full CI when feasible)

- Verify achievement of chemical accuracy (1.6 mHa or 1 kcal/mol)

- Analyze circuit efficiency metrics (CNOT count, circuit depth)

Workflow Visualization

Integration with Broader ADAPT-VQE Research Landscape

The development of IC measurement techniques represents one of several complementary approaches to reducing the resource requirements of ADAPT-VQE. Recent research has demonstrated dramatic improvements across multiple dimensions:

Operator Pool Optimizations: The introduction of Coupled Exchange Operator (CEO) pools has reduced CNOT counts by up to 88%, CNOT depths by up to 96%, and measurement costs by up to 99.6% for molecules represented by 12-14 qubits compared to early ADAPT-VQE versions [2].

Gradient-Free Approaches: Greedy Gradient-free Adaptive VQE (GGA-VQE) eliminates the need for gradient measurements entirely, instead using analytical landscape functions determined from a fixed number of measurements [14].

Batching Strategies: Adding multiple operators per iteration ("batched ADAPT-VQE") reduces the number of gradient computation cycles, significantly lowering measurement overhead [7].

Pool Completeness Theories: Establishing minimal complete pool sizes (2n-2 for n qubits) and symmetry-adaptation rules ensures convergence while minimizing measurement requirements [11].

IC measurement techniques integrate synergistically with these developments. For instance, AIM frameworks can be combined with optimized operator pools to achieve multiplicative reductions in quantum resource requirements. Similarly, IC data reuse principles could potentially enhance gradient-free approaches by providing more comprehensive information for operator selection.

Leveraging Informationally Complete measurements for data reuse represents a transformative approach to mitigating the measurement overhead in ADAPT-VQE algorithms. By enabling the reuse of energy measurement data for gradient estimations, the AIM-ADAPT-VQE protocol achieves dramatic reductions in quantum resource requirements while maintaining the accuracy and convergence properties of the standard algorithm.

The experimental validation of this approach on small molecular systems demonstrates its potential for practical quantum computational chemistry applications. As quantum hardware continues to advance, integrating IC measurement strategies with other resource reduction techniques—including optimized operator pools, batched operator additions, and symmetry exploitation—will be crucial for scaling quantum simulations to industrially relevant molecular systems.

Future research directions should focus on developing more efficient IC-POVM implementations tailored specifically for molecular systems, optimizing classical post-processing algorithms for gradient computations, and exploring hybrid approaches that combine the strengths of IC measurements with other measurement reduction strategies. Such integrated approaches will be essential for realizing the potential of quantum computers to solve challenging problems in quantum chemistry and drug development.

Reusing Pauli Measurements Between VQE Optimization and ADAPT Selection

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) represents a significant advancement in quantum algorithms for molecular simulation on noisy intermediate-scale quantum (NISQ) devices. Unlike standard VQE approaches that use a fixed, pre-selected circuit ansatz, ADAPT-VQE dynamically constructs the ansatz by iteratively adding parameterized unitary gates from a predefined operator pool [3]. This adaptive construction generates circuits with significantly reduced depth compared to methods like unitary coupled cluster singles and doubles (UCCSD), while maintaining high accuracy and potentially avoiding the barren plateau problem that plagues many hardware-efficient ansätze [2] [3]. However, this improved performance comes at a substantial cost: a dramatically increased quantum measurement overhead compared to standard VQE [11].

The measurement overhead in ADAPT-VQE arises from two computationally expensive processes that occur at each iteration: the variational optimization of circuit parameters to minimize energy, and the evaluation of gradients for all operators in the pool to select the next operator to add to the circuit [6]. In classical simulations using state-vector methods, these measurements are effectively exact, but on actual quantum hardware, they require numerous repeated circuit executions (shots) to achieve sufficient statistical precision [16]. This overhead presents a major bottleneck for practical applications of ADAPT-VQE on current quantum devices, sparking significant research interest in mitigation strategies [2].

Among the various approaches proposed to reduce this measurement burden, one particularly promising strategy involves reusing Pauli measurement outcomes obtained during the VQE parameter optimization phase for the subsequent operator selection step. This approach, recently investigated by Ikhtiarudin et al., leverages the inherent redundancy in measurement requirements between these two stages of the algorithm [6]. By systematically repurposing previously collected measurement data, this method can significantly reduce the total shot count required for ADAPT-VQE convergence without compromising the accuracy of the final result.

The Mechanism of Pauli Measurement Reuse

Theoretical Foundation

The core insight behind Pauli measurement reuse stems from analyzing the mathematical structure of the measurements required in different stages of ADAPT-VQE. In the standard ADAPT-VQE algorithm, each iteration involves two distinct measurement-intensive phases:

Parameter optimization: The energy expectation value ( \langle \psi(\theta) | H | \psi(\theta) \rangle ) is measured for the current ansatz state ( |\psi(\theta)\rangle ) with parameters ( \theta ), where ( H = \sumk ck Pk ) is the qubit-mapped molecular Hamiltonian expressed as a sum of Pauli strings ( Pk ) with coefficients ( c_k ) [6].

Operator selection: The gradients ( \frac{\partial E}{\partial \thetai} = \langle \psi | [H, Ai] | \psi \rangle ) are measured for all operators ( Ai ) in the pool, where ( [H, Ai] ) is the commutator of the Hamiltonian with pool operator ( A_i ) [6].

The key observation is that the commutator ( [H, Ai] ) can itself be expressed as a sum of Pauli strings, and there is often significant overlap between the Pauli strings appearing in ( H ) and those appearing in the various commutators ( [H, Ai] ) [6]. Therefore, measurement outcomes obtained for energy estimation during parameter optimization can be directly reused for gradient calculations in the operator selection step, provided the same Pauli strings appear in both expressions.

Implementation Methodology

The implementation of Pauli measurement reuse involves the following steps:

Pauli string analysis: Before running the ADAPT-VQE algorithm, analyze the Hamiltonian ( H ) and all commutators ( [H, Ai] ) for operators ( Ai ) in the pool to identify overlapping Pauli strings. This analysis needs to be performed only once during the initial setup [6].

Measurement collection during VQE: During the parameter optimization phase, collect and store measurement outcomes for all Pauli strings in the Hamiltonian.

Data reuse for gradient estimation: When estimating gradients for operator selection, reuse the stored measurement outcomes for any Pauli string that appears in both ( H ) and ( [H, Ai] ), only performing new measurements for the unique Pauli strings in ( [H, Ai] ) that are not present in ( H ).

This strategy differs fundamentally from alternative approaches like those using informationally complete generalized measurements (AIM-ADAPT-VQE), which employ specialized positive operator-valued measures (POVMs) to reconstruct the entire quantum state [10] [8]. The Pauli reuse method retains measurements in the standard computational basis and specifically targets the redundancy between Hamiltonian and commutator measurements [6].

Figure 1: Workflow of Pauli measurement reuse between VQE optimization and operator selection phases in ADAPT-VQE

Integrated Shot Reduction Framework

Complementary Measurement Optimization Strategies

The Pauli measurement reuse strategy is particularly effective when combined with other shot-reduction techniques. Ikhtiarudin et al. demonstrated that integrating Pauli reuse with variance-based shot allocation creates a powerful framework for minimizing overall measurement costs [6]. This combined approach addresses different aspects of the measurement overhead problem:

Pauli measurement reuse reduces the number of distinct measurements required by eliminating redundant evaluations of the same Pauli strings across different stages of the algorithm [6].

Variance-based shot allocation optimizes the distribution of a fixed shot budget across the necessary measurements, assigning more shots to terms with higher variance and fewer to terms with lower variance [6].

This integrated methodology can be further enhanced by employing commutativity-based grouping techniques, such as qubit-wise commutativity (QWC), which allows multiple Pauli measurements to be performed simultaneously [6]. The compatibility of Pauli reuse with such grouping methods creates a comprehensive strategy for shot efficiency.

Comparative Performance of Shot Reduction Methods

Table 1: Comparison of shot reduction techniques for ADAPT-VQE

| Method | Key Mechanism | Reported Shot Reduction | Limitations/Considerations |

|---|---|---|---|

| Pauli Measurement Reuse [6] | Reuses Pauli measurements from VQE optimization in gradient evaluation | 32.29% of naive approach (when combined with grouping) | Requires overlapping Pauli strings between Hamiltonian and commutators |

| Variance-Based Shot Allocation [6] | Allocates shots based on term variance | 43.21% for Hâ‚‚, 51.23% for LiH (vs uniform allocation) | Requires variance estimation |

| AIM-ADAPT-VQE [10] [8] | Uses informationally complete POVMs to reconstruct state | Near elimination of overhead for small systems | Scalability challenges for large systems |

| Minimal Complete Pools [11] | Reduces pool size to 2n-2 operators | Linear instead of quartic scaling | Must account for molecular symmetries |

| CEO-ADAPT-VQE* [2] | Novel operator pool with improved subroutines | 99.6% reduction in measurement costs | Combined effect of multiple optimizations |

The performance of Pauli measurement reuse has been quantitatively evaluated across various molecular systems. Numerical simulations demonstrate that when combined with measurement grouping, this approach reduces average shot usage to approximately 32.29% of the naive full measurement scheme [6]. Even without reuse, measurement grouping alone (using qubit-wise commutativity) reduces shots to about 38.59% of the baseline, indicating that both strategies contribute significantly to overall efficiency [6].

Experimental Protocols and Validation

Implementation Framework

To implement and validate the Pauli measurement reuse strategy, researchers have established specific experimental protocols:

System Preparation:

- Select molecular systems ranging from simple (Hâ‚‚, 4 qubits) to more complex (BeHâ‚‚, 14 qubits; Nâ‚‚Hâ‚„, 16 qubits) to test scalability [6].

- Generate the molecular Hamiltonian in the second quantized form and map it to qubit operators using Jordan-Wigner or Bravyi-Kitaev transformation [6].

- Prepare an operator pool, typically consisting of fermionic excitation operators or their qubit-adapted versions [6].

Algorithm Execution:

- Initialize with a reference state (typically Hartree-Fock) [17].

- For each ADAPT-VQE iteration:

- Perform VQE parameter optimization with comprehensive Pauli measurement collection

- Store all measurement outcomes with associated Pauli strings

- Identify overlapping Pauli strings between Hamiltonian and commutator operators

- Reuse relevant measurements for gradient calculations

- Select operator with largest gradient for ansatz expansion [6]

Performance Assessment:

- Compare total shot count against baseline ADAPT-VQE without reuse

- Verify convergence to chemically accurate energies (1.6 mHa or ~1 kcal/mol error)

- Evaluate circuit depth and parameter count in the final ansatz [6]

The Scientist's Toolkit: Essential Research Components

Table 2: Key components for implementing Pauli measurement reuse in ADAPT-VQE

| Component | Function | Implementation Notes |

|---|---|---|

| Pauli String Analyzer | Identifies overlapping Pauli terms between Hamiltonian and commutators | Precomputation step; uses symbolic algebra |

| Measurement Storage Database | Archives Pauli measurement outcomes with metadata | Efficient indexing for rapid retrieval |

| Commutator Calculator | Computes [H, Aáµ¢] for all pool operators Aáµ¢ | Symbolic computation; can be resource-intensive for large pools |

| Variance Estimator | Estimates variances of Pauli terms for shot allocation | Can use initial samples or historical data |

| Qubit-Wise Commutativity (QWC) Grouper | Groups commuting Pauli terms for simultaneous measurement | Compatible with Pauli reuse strategy |

| Shot Allocation Optimizer | Distributes shot budget based on term variances | Implements optimal allocation formulas |

| Diallyl 2,2'-oxydiethyl dicarbonate | Diallyl 2,2'-oxydiethyl dicarbonate, CAS:142-22-3, MF:C12H18O7, MW:274.27 g/mol | Chemical Reagent |

| Phenformin | Phenformin, CAS:114-86-3, MF:C10H15N5, MW:205.26 g/mol | Chemical Reagent |

Implications for Quantum Computational Chemistry