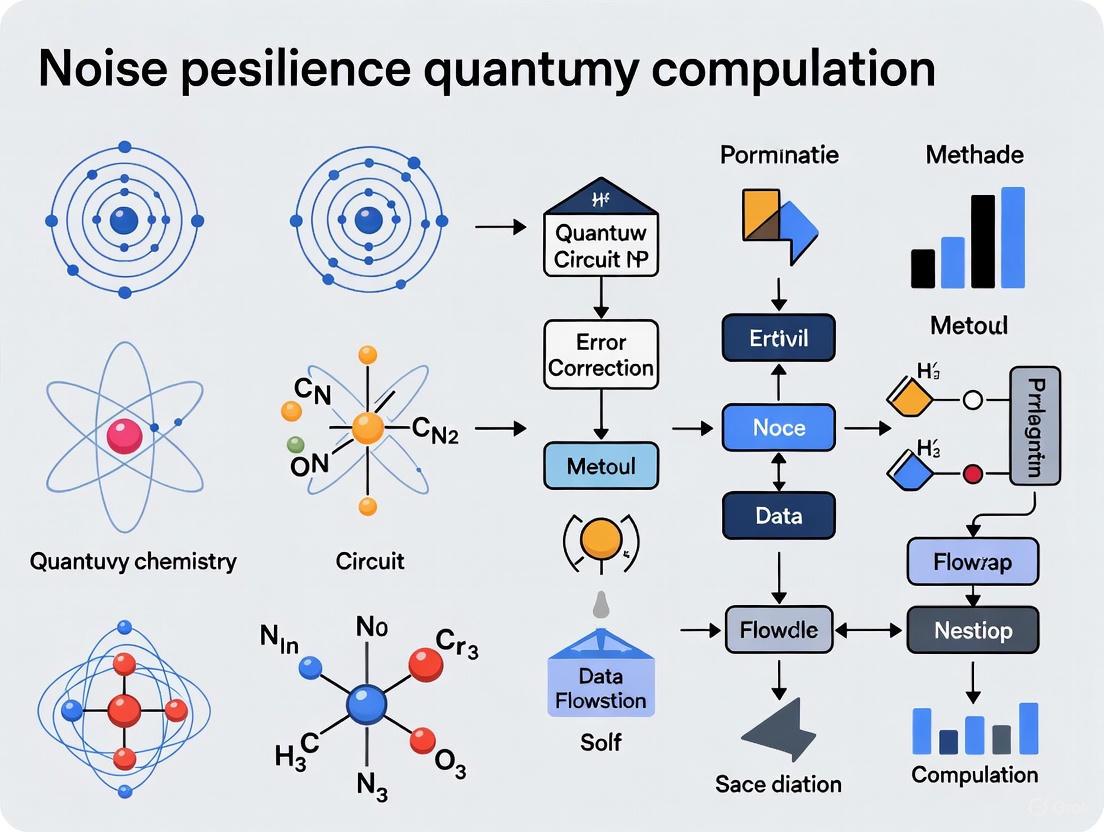

Noise-Resilient Quantum Chemistry: Overcoming NISQ Limitations for Drug Discovery and Materials Design

This article explores the critical challenge of noise resilience in quantum computational chemistry, a fundamental barrier to achieving practical quantum advantage on Noisy Intermediate-Scale Quantum (NISQ) devices.

Noise-Resilient Quantum Chemistry: Overcoming NISQ Limitations for Drug Discovery and Materials Design

Abstract

This article explores the critical challenge of noise resilience in quantum computational chemistry, a fundamental barrier to achieving practical quantum advantage on Noisy Intermediate-Scale Quantum (NISQ) devices. We systematically examine foundational noise sources impacting variational algorithms, detail emerging methodological breakthroughs in hardware design and algorithmic error mitigation, and provide optimization strategies for enhancing computational fidelity. Through validation case studies from real-world drug discovery pipelines, we demonstrate how hybrid quantum-classical approaches are already enabling accurate chemical reaction modeling and binding affinity prediction. This comprehensive analysis equips researchers and pharmaceutical professionals with a roadmap for leveraging current quantum computing capabilities while outlining the path toward fault-tolerant quantum chemistry simulations.

Understanding Quantum Noise: The Fundamental Challenge in Chemical Computations

Welcome to the Technical Support Center for Quantum Computational Chemistry. This resource is designed for researchers, scientists, and drug development professionals navigating the challenges of the Noisy Intermediate-Scale Quantum (NISQ) era. Current quantum hardware, typically comprising 50 to 1,000 qubits with gate fidelities between 95-99.9%, is inherently prone to errors from decoherence, gate imperfections, and environmental interference [1] [2]. For quantum chemistry applications, this noise manifests as significant errors in calculating critical properties like molecular ground-state energies and spectroscopic signals, often overwhelming the desired computational result [1] [3]. This guide provides actionable troubleshooting methodologies and error mitigation protocols to enhance the reliability of your computations within a research framework focused on noise resilience.

Frequently Asked Questions (FAQs)

Q1: What are the primary sources of noise affecting variational quantum eigensolver (VQE) calculations on NISQ devices?

The performance of VQE, a key algorithm for finding molecular ground-state energies, is degraded by several interconnected noise sources [1]:

- Gate Infidelities: Single- and two-qubit gate operations are imperfect. With two-qubit gate fidelities typically at 95-99%, errors accumulate rapidly in deep quantum circuits [1].

- Decoherence: Qubits lose their quantum state due to interactions with the environment over time, limiting the maximum feasible circuit depth (coherence time) [1] [2].

- State Preparation and Measurement (SPAM) Errors: Errors occur when initializing qubits into a starting state and when reading out the final state [4].

- Pauli Errors: The average noise in a multi-qubit system can be approximated as a Pauli channel, where multiqubit Pauli operators (e.g., ( \sigmaz^{(1)} \otimes \mathbf{1}^{(2)} \otimes \sigmax^{(3)} )) introduce correlated errors across the processor [4].

Q2: Our results from the Quantum Approximate Optimization Algorithm (QAOA) for molecular configuration are inconsistent. How can we determine if the problem is hardware noise or the algorithm itself?

Diagnosing the source of inconsistency requires a structured benchmarking approach:

- Classical Simulation: First, run the same QAOA circuit on a classical simulator without noise. If the results are consistent, it strongly points to hardware noise as the culprit [5].

- Vary Circuit Depth ((p)): On the quantum hardware, run your problem for different QAOA depths (p). Noise effects accumulate with deeper circuits. If performance degrades significantly as

pincreases, hardware noise is a likely factor [1]. - Use Error Mitigation: Apply a simple error mitigation technique like Zero-Noise Extrapolation (ZNE) to your circuit [1]. If the results become more stable and accurate, it confirms that hardware noise was a major contributor to the inconsistency [5].

Q3: What is the practical difference between Quantum Error Correction (QEC) and Quantum Error Mitigation (QEM) for near-term chemistry experiments?

The choice between QEC and QEM is a fundamental one in the NISQ era, dictated by current hardware limitations [6].

Table: QEC vs. QEM for Chemistry Applications

| Feature | Quantum Error Correction (QEC) | Quantum Error Mitigation (QEM) |

|---|---|---|

| Core Principle | Actively detects and corrects errors during circuit execution using redundant logical qubits [1]. | Applies post-processing to measurement results from noisy circuits; no correction during execution [1] [4]. |

| Hardware Overhead | Very high (requires many physical qubits per logical qubit) [1]. | Low (uses the same number of qubits as the original circuit). |

| Current Feasibility | Not yet scalable for general algorithms; proof-of-concept demonstrations exist [5]. | The primary, practical tool for NISQ-era chemistry computations [1] [6]. |

| Best For | Long-term, fault-tolerant quantum computing. | Near-term experiments on today's hardware to improve result accuracy [3]. |

Q4: Can we use entangled qubits for more sensitive quantum sensing of molecular properties, and how does noise impact this?

Yes, leveraging entanglement can significantly enhance the sensitivity of quantum sensors for detecting subtle molecular fields (e.g., weak magnetic fields). A group of ( N ) entangled qubits can be up to ( N ) times more sensitive than a single qubit, outperforming a group of unentangled qubits, which only provide a ( \sqrt{N} ) improvement [7] [8]. However, entangling qubits also makes them more vulnerable to collective environmental noise. Recent theoretical advances suggest that using partial quantum error correction—designing the entangled sensor to correct only the most critical errors—creates a robust sensor that maintains a quantum advantage over unentangled approaches, even if it sacrifices a small amount of peak sensitivity [7] [8].

Troubleshooting Guides

Guide 1: Mitigating State Preparation and Measurement (SPAM) Errors

SPAM errors can skew your results from the very beginning and end of a computation. This protocol helps characterize and correct for them [4].

Symptoms: Inconsistent results even for very shallow circuits; significant deviation from simulated results in state tomography.

Step-by-Step Protocol:

- Construct the Measurement Error Matrix ((E{meas})):

- For an (n)-qubit system, prepare each of the (2^n) computational basis states (e.g., (|00...0\rangle), (|00...1\rangle), ..., (|11...1\rangle)).

- For each prepared state, run a simple "do-nothing" circuit and immediately measure.

- Repeat each measurement many times to build a probability distribution. The outcome for preparing state (i) and measuring state (j) forms the matrix element ((E{meas}){ji}).

- This results in a column-stochastic matrix (E{meas}) that describes the probability of mis-measuring one state as another [4].

- Apply the Inverse to Mitigate Errors:

- For any subsequent experiment, let ( \vec{C}_{noisy} ) be the vector of measured probabilities.

- The error-mitigated probability vector is obtained by solving the linear system: ( E{meas} \vec{C}{mitigated} = \vec{C}{noisy} ) for ( \vec{C}{mitigated} ) [4].

Visualization of the SPAM Error Mitigation Workflow:

Guide 2: Implementing Zero-Noise Extrapolation (ZNE) for VQE Energy Calculations

ZNE is a powerful technique to infer the noiseless value of an observable from measurements taken at different noise levels [1].

Symptoms: The computed energy from VQE is significantly higher than the exact ground-state energy; the energy estimate drifts as circuit depth increases.

Step-by-Step Protocol:

- Choose a Noise Scaling Method:

- Pulse-Level Scaling: If available, scale the duration of the physical control pulses to directly increase decoherence. This is the most physically accurate method.

- Unitary Folding: A more common digital approach. For each gate (or set of gates) in the circuit, replace it with ( G^\dagger G G ). This leaves the ideal logic unchanged but increases the circuit's exposure to noise [1].

Execute at Multiple Scales:

- Define a set of scale factors, e.g., ( \lambda = [1.0, 2.0, 3.0] ), where ( \lambda=1.0 ) is the original circuit.

- For each scale factor, implement the scaled circuit and run the VQE measurement routine to obtain the expectation value of the Hamiltonian ( \langle H(\lambda) \rangle ).

Extrapolate to Zero Noise:

- Plot the measured ( \langle H(\lambda) \rangle ) against the noise scale factor ( \lambda ).

- Fit a curve (e.g., linear, exponential, or Richardson) to these data points.

- Extrapolate the fitted curve to ( \lambda = 0 ) to estimate the zero-noise, mitigated energy value [1].

Visualization of the ZNE Workflow:

Guide 3: Applying Symmetry Verification for Quantum Chemistry Simulations

Many molecular Hamiltonians possess inherent symmetries, such as particle number conservation. This protocol detects and discouts results that violate these symmetries due to errors [1] [3].

Symptoms: Computed molecular states violate known physical constraints (e.g., the number of electrons in the system is not conserved).

Step-by-Step Protocol:

- Identify the Symmetry:

- For a typical molecular electronic structure problem, the total number of electrons is a conserved quantity. The corresponding symmetry operator is the particle number operator ( \hat{N} ).

Add Symmetry Measurement:

- Append your VQE ansatz circuit with additional gates that measure the symmetry operator ( \hat{N} ) without disturbing the state in the computational subspace. This often requires additional ancilla qubits.

Post-Select Data:

- Run the complete circuit (ansatz + symmetry measurement) many times.

- For each shot (circuit run), you will get two results: the energy measurement outcome and the symmetry measurement outcome.

- Discard all energy measurement outcomes where the symmetry measurement does not match the known, correct value (e.g., the number of electrons in your molecule of interest). This is called post-selection [1].

- Use only the post-selected data to compute the final expectation value of the energy.

The Scientist's Toolkit: Essential Reagents & Protocols

This table details key algorithmic "reagents" and computational protocols essential for conducting noise-resilient quantum chemistry experiments.

Table: Key Resources for Noise-Resilient Quantum Chemistry

| Tool / Protocol | Function / Purpose | Key Reference / Implementation |

|---|---|---|

| Variational Quantum Eigensolver (VQE) | Hybrid quantum-classical algorithm to find molecular ground-state energies. Resilient to some noise by using shallow circuits [1] [9]. | Peruzzo et al. (2014) [2] |

| Quantum Approximate Optimization Algorithm (QAOA) | Hybrid algorithm for combinatorial problems; can be adapted for chemistry. Performance improves with circuit depth (p), but so does noise susceptibility [1]. | Farhi et al. (2014) [1] [2] |

| Zero-Noise Extrapolation (ZNE) | Error mitigation technique that artificially increases noise to extrapolate back to a zero-noise result [1]. | Implemented in software like Qiskit, PennyLane. |

| Symmetry Verification | Error mitigation that uses conservation laws to detect and discard erroneous results [1] [3]. | Applicable to any problem with a known symmetry (particle number, spin, etc.). |

| Pauli Channel Learning (EL Protocol) | Efficiently characterizes the spatial correlations of noise in a multi-qubit device, which is critical for optimizing QEM and QEC [4]. | Gough et al. (2023) [Scientific Reports] [4] |

| Root Space Decomposition Framework | A novel mathematical framework for classifying and characterizing how noise propagates through a quantum system over space and time, simplifying error diagnosis [10]. | Quiroz & Watkins (2025) [Johns Hopkins APL] [10] |

| Aluminum triphosphate dihydrate | Aluminum triphosphate dihydrate, CAS:17375-35-8, MF:AlH6O12P3, MW:317.939 | Chemical Reagent |

| (4-Bromopyrimidin-2-yl)cyclopentylamine | (4-Bromopyrimidin-2-yl)cyclopentylamine|CAS 1269291-43-1 | Explore (4-Bromopyrimidin-2-yl)cyclopentylamine, a versatile pyrimidine building block for antimicrobial and anticancer research. For Research Use Only. Not for human use. |

Troubleshooting Guides

Diagnosing and Mitigating Decoherence

Observed Problem: Quantum state fidelity degrades rapidly with increasing circuit depth, or quantum memory lifetimes are shorter than expected.

Diagnostic Methodology:

- Step 1: Characterize T1 and T2 Times. Measure the energy relaxation time (T1) and phase coherence time (T2) for all qubits. A significant discrepancy between T1 and T2 indicates the presence of pure dephasing noise, not just energy relaxation [11].

- Step 2: Map Crosstalk. Execute single-qubit gates on one qubit while simultaneously idling neighboring qubits. Measure the phase accumulation on the idle qubits to identify and quantify coherent crosstalk, a common source of decoherence [12].

- Step 3: Analyze Noise Temporal Correlations. Perform repeated T2 measurements (e.g., using Hahn echo sequences) with varying time intervals. If the results fluctuate, it suggests the presence of non-Markovian, non-static noise, such as that from two-level systems in the substrate [10].

Mitigation Protocols:

- Dynamic Decoupling: For idling qubits, insert sequences of Pauli-X pulses (e.g., XY4, CPMG) to refocus the phase evolution caused by low-frequency noise. Recent demonstrations on 100+ qubit systems have shown this can reduce errors by up to 25% [12].

- Decoherence-Free Subspaces (DFS): Encode logical information into a subspace of multiple physical qubits that is immune to collective noise. Experiments on trapped-ion systems have used DFS to extend quantum memory lifetimes by more than a factor of 10 compared to single physical qubits [11].

- Shortened Execution Time: Optimize circuit compilation to minimize latency, especially during mid-circuit measurements and reset operations. Leverage hardware-native gates and all-to-all connectivity, where available, to reduce the number of SWAP gates and overall circuit duration [13].

Correcting for Gate Errors

Observed Problem: The measured outcome distribution of a quantum circuit deviates significantly from noiseless simulation, even for shallow circuits.

Diagnostic Methodology:

- Step 1: Perform Gate Set Tomography (GST). While resource-intensive, GST provides a self-consistent and complete characterization of a set of quantum gates, revealing correlated errors and non-Markovian dynamics that standard randomized benchmarking might miss.

- Step 2: Benchmark Simultaneous Gate Operations. Use mirror circuits or correlated randomized benchmarking to measure the error rates of gates applied in parallel. This is critical for identifying crosstalk, which is often the dominant error source in multi-qubit operations [12].

- Step 3: Validate with Quantum Process Tomography on Key Subunits. For critical subroutines in your algorithm (e.g., a specific two-qubit gate used in a variational ansatz), full quantum process tomography can provide a detailed error map.

Mitigation Protocols:

- Calibration and Filtering: Implement robust calibration routines to maintain high single- and two-qubit gate fidelities. Use filter cavities and improved electronics to suppress noise in control pulses [11].

- Error-Aware Compilation: Use compilation tools that incorporate gate fidelity data and crosstalk metrics to avoid scheduling noisy gates or parallel operations on qubits with known high crosstalk [12].

- Probabilistic Error Cancellation (PEC): This advanced error mitigation technique uses a detailed noise model to "un-bias" the results of a noisy quantum circuit. Recent toolkits have demonstrated methods to decrease the sampling overhead of PEC by 100x, making it more practical for utility-scale circuits [12].

Managing Measurement Inaccuracies

Observed Problem: Readout errors are high, or the results are inconsistent between successive measurements of the same state.

Diagnostic Methodology:

- Step 1: Construct a Confusion Matrix. Prepare each computational basis state and measure the output probabilities. This creates a readout confusion matrix that can be used to classically correct measurement errors.

- Step 2: Test Mid-Circuit Measurement Crosstalk. Perform a mid-circuit measurement on one qubit while a neighboring qubit is in a superposition state. Measure the phase and amplitude damping on the idling qubit to quantify measurement-induced decoherence, which has been identified as a dominant "memory noise" in some systems [13].

- Step 3: Characterize Reset Fidelity and Duration. Measure the probability of successfully resetting a qubit to the ground state and the time required. Poor reset performance can corrupt subsequent circuit steps.

Mitigation Protocols:

- Readout Error Mitigation: Apply the inverse of the experimentally determined confusion matrix to the measured outcome statistics. This is a standard but costly (in terms of samples) classical post-processing technique.

- Dynamical Decoupling During Measurement: Apply dynamical decoupling sequences to qubits that are idle during concurrent mid-circuit measurements and feedforward operations. One demonstration showed this technique, combined with feedforward, improved result accuracy by 25% [12].

- Custom Discriminators and Filtering: Optimize the classical processing of the analog measurement signals to better distinguish between states, reducing misassignment rates.

Table 1: Representative Error Rates Across Quantum Hardware Platforms

| Platform | Energy Relaxation Time (T1) | Dephasing Time (T2) | Single-Qubit Gate Error | Two-Qubit Gate Error | Measurement Error | Source / Example |

|---|---|---|---|---|---|---|

| Superconducting | 100 - 300 µs | 100 - 200 µs | ~0.02% | 0.1% - 0.5% | 1 - 3% | IBM Heron r3 [12] |

| Trapped-Ions | > 10 s | > 1 s | ~0.03% | ~0.3% | 0.5 - 2% | Quantinuum H-Series [13] |

| Neutral Atoms | Information Missing | Information Missing | Information Missing | Information Missing | Information Missing | Information Missing |

Table 2: Impact and Mitigation of Key Noise Types

| Noise Source | Primary Impact on Computation | Key Mitigation Strategy | Reported Performance Gain | Source |

|---|---|---|---|---|

| Amplitude Damping | Qubit energy loss | Dynamic decoupling, QEC | Information Missing | [14] [11] |

| Dephasing | Loss of phase coherence | Dynamic decoupling, DFS | 25% accuracy improvement with DD during idle periods [12] | [12] |

| Gate Crosstalk | Correlated errors on idle qubits | Error-aware compilation | 58% reduction in 2Q gates via dynamic circuits [12] | [12] |

| Memory Noise | Decoherence during idle/measurement | Dynamical decoupling, faster reset | Identified as dominant error source in QEC experiments [13] | [13] |

Experimental Protocols for Noise Characterization

Protocol 1: Full Gate Set Tomography (GST)

Objective: To obtain a complete description of the quantum operations in a small gate set, including all correlations and non-Markovian errors. Procedure:

- Define a gate set: Typically {I, Gx, Gy} for each qubit, where I is idle, and Gx/Gy are π/2 rotations.

- Generate long sequences: Create a set of experiments where the gate operations are repeated many times. The sequences are designed to amplify and isolate every possible type of error in the gate set.

- Execute and measure: For each sequence, prepare a fixed initial state, run the gate sequence, and measure in a fixed basis.

- Reconstruct the map: Use maximum-likelihood estimation to find the set of quantum process matrices (or PTM representations) for the gates that best fit the experimental data.

Protocol 2: Mirror Circuit for Crosstalk Characterization

Objective: To measure the error of a specific gate operation in the presence of simultaneous operations on other qubits, isolating crosstalk. Procedure:

- Create a "perfect echo": For a target two-qubit gate on qubits (i, j), design a circuit that applies a random layer of single-qubit gates, then the target gate, then an inverse of the random layer. In isolation, this should return the qubits to their initial state.

- Add stressor gates: Simultaneously apply a set of "stressor" gates (e.g., random single-qubit gates or two-qubit gates) on other qubits in the system during the execution of the mirror circuit.

- Measure fidelity: The sequence fidelity of the mirror circuit on qubits (i, j), when stressors are active, quantifies the crosstalk impact on that specific gate.

The Scientist's Toolkit

Table 3: Key Research Reagent Solutions for Noise-Resilient Quantum Chemistry

| Item / Technique | Function in Experiment | Relevance to Noise Resilience |

|---|---|---|

| Qiskit SDK with Samplomatic | Open-source quantum software development kit [12]. | Enables advanced error mitigation (e.g., PEC with 100x lower overhead) and dynamic circuits via box annotations [12]. |

| Dynamic Circuits with Mid-circuit Measurement | Circuits that condition future operations on intermediate measurement results [12]. | Reduces circuit depth and crosstalk; allows for real-time QEC and reset, cutting 2Q gates by 58% in one demo [12]. |

| Quantum Principal Component Analysis | A quantum algorithm for filtering noise from a density matrix [15]. | Can be applied to a sensor's output state on a quantum processor to enhance measurement accuracy (200x improvement shown in NV-center experiments) [15]. |

| Decoherence-Free Subspaces | A method to encode logical qubits in a subspace immune to collective noise [11]. | Protects quantum memory; demonstrated to extend coherence times by over 10x on trapped-ion hardware [11]. |

| Quantum Error Correction Codes | Encodes a logical qubit into multiple physical qubits to detect and correct errors [13]. | Foundation for fault tolerance; enabled the first end-to-end quantum chemistry computation (molecular hydrogen ground state) on real hardware [13]. |

| BB-22 5-hydroxyisoquinoline isomer | BB-22 5-hydroxyisoquinoline isomer, MF:C25H24N2O2, MW:384.5 | Chemical Reagent |

| 1-(4-Iodo-2-methylphenyl)thiourea | 1-(4-Iodo-2-methylphenyl)thiourea |

Workflow and Signaling Diagrams

Frequently Asked Questions (FAQs)

Q: Our quantum chemistry simulations are consistently off by more than "chemical accuracy." Where should we focus our mitigation efforts first? A: The first step is to identify the dominant noise source in your specific circuit. Run a series of simple characterization circuits on your target hardware:

- Measure T1/T2 times to diagnose decoherence limits.

- Run mirror circuits to check for gate crosstalk.

- Perform readout calibration to build a confusion matrix. Often, for deep circuits, memory noise during idling or mid-circuit operations is a dominant factor. Implementing dynamical decoupling on idle qubits is a highly effective first step that has been shown to improve accuracy by up to 25% [12].

Q: Does quantum error correction (QEC) actually help on today's noisy hardware, or does the overhead make things worse? A: Recent experiments have demonstrated that QEC can indeed improve performance despite the overhead. Quantinuum's calculation of the molecular hydrogen ground state using a 7-qubit code showed that circuits with mid-circuit error correction performed better than those without, proving that the noise suppression can outweigh the added complexity [13]. The key is using tailored, "partially fault-tolerant" methods that offer a good trade-off between error suppression and resource overhead.

Q: What is "memory noise" and why is it particularly damaging? A: Memory noise refers to errors that accumulate on qubits while they are idle, waiting to be used in a subsequent operation. This includes dephasing and energy relaxation. It is particularly damaging in complex algorithms like Quantum Phase Estimation (QPE) because it scales with circuit duration, unlike gate errors which scale with the number of gates. In one study, incoherent memory noise was identified as the leading contributor to circuit failure after other errors were mitigated [13].

Q: Is there a "Goldilocks zone" for achieving quantum advantage with noisy qubits? A: Yes, theoretical work suggests that for unstructured problems, there is a constraint. If a quantum computer has too few qubits, it's not powerful enough. If it has too many qubits without a corresponding reduction in per-gate error rates, the noise overwhelms the computation. Achieving scalable quantum advantage requires the noise rate per gate to scale down as the number of qubits goes up, which is extremely difficult without error correction. This makes error correction the only path to fully scalable quantum advantage [16].

Frequently Asked Questions

FAQ 1: What is a Barren Plateau, and how do I know if my algorithm is on one? A Barren Plateau (BP) is a phenomenon where the cost function landscape of a variational quantum algorithm becomes exponentially flat as the system size increases [17]. This means that for an

n-qubit system, the gradients of the cost function vanish exponentially inn. You can identify a potential BP if you observe that the variances of your cost function or its gradients become exceptionally small as you scale up your problem, making it impossible for classical optimizers to find a minimizing direction without an exponential number of measurement shots [17] [18].FAQ 2: What are the main causes of Barren Plateaus? BPs can arise from several sources, often in combination [17]:

- Ansatz Choice: Deep, hardware-efficient ansatzes that behave like random circuits.

- Problem Structure: Global cost functions that require measuring correlations across all qubits.

- Entanglement: The presence of high levels of entanglement in the quantum circuit.

- Hardware Noise: Incoherent noise on hardware can lead to Noise-Induced Barren Plateaus (NIBPs), where the gradient vanishes exponentially with circuit depth and number of qubits [18].

FAQ 3: My algorithm is stuck on a Barren Plateau. What mitigation strategies can I try? Several strategies have been developed to avoid or mitigate BPs:

- Use Local Cost Functions: Instead of global observables, define cost functions based on local measurements, which are less prone to BPs [18].

- Employ Structured Ansatzes: Choose ansatzes with inherent problem symmetries or those with limited entanglement to prevent the circuit from behaving like a random unitary [17] [19].

- Explore Alternative Paradigms: Consider moving from a constrained optimization problem to a generalized eigenvalue problem using quantum subspace methods like the Generator Coordinate Inspired Method (GCIM) or ADAPT-GCIM, which can circumvent the BP issue [20] [21].

- Apply Error Mitigation: While not a full solution, techniques like quantum error correction codes can be tailored to mitigate the impact of noise, thereby partially addressing NIBPs [7] [8].

FAQ 4: Is there a trade-off between avoiding Barren Plateaus and achieving a quantum advantage? Yes, this is a critical area of research. There is growing evidence that the structural constraints often used to provably avoid BPs (e.g., restricting the circuit to a small, tractable subspace) may also allow the problem to be efficiently simulated classically [19]. This suggests that while these strategies make the problem trainable, they might simultaneously negate the potential for a super-polynomial quantum advantage. The challenge is to find models that are both trainable and not classically simulable.

Quantitative Data on Barren Plateaus

Table 1: Gradient Scaling in Different Barren Plateau Scenarios

| Scenario | Cause of Barren Plateau | Scaling of Gradient Variance | Key Mitigation Strategy |

|---|---|---|---|

| Noise-Induced Barren Plateaus (NIBPs) | Local Pauli noise (e.g., depolarizing) | Exponentially small in the number of qubits n and circuit depth L [18] |

Reduce circuit depth; use error mitigation codes [18] [7] |

| Deep Hardware-Efficient Ansatz | Random parameter initialization in deep, unstructured circuits | Exponentially small in the number of qubits n [17] [18] |

Use local cost functions; pre-training; structured ansatzes [18] |

| Shallow Circuit with Global Cost | Cost function depends on global observable across all qubits | Exponentially small in the number of qubits n, even for shallow depths [18] |

Reformulate problem using local cost functions [18] |

Table 2: Comparison of VQE and GCIM Approaches

| Feature | Variational Quantum Eigensolver (VQE) | Generator Coordinate Inspired Method (GCIM) |

|---|---|---|

| Core Principle | Constrained optimization over parameterized quantum circuit [20] | Generalized eigenvalue problem within a constructed subspace [20] [21] |

| Landscape | Prone to barren plateaus and local minima [20] | Bypasses barren plateaus associated with heuristic optimizers [20] |

| Parameterization | Highly nonlinear [20] | Linear combination of non-orthogonal basis states [20] |

| Key Advantage | Direct minimization of energy | Provides a lower bound to the VQE solution; optimization-free basis selection [20] |

| Resource Requirement | Multiple optimization iterations, each requiring many quantum measurements [22] | Fewer classical optimization loops, but requires more measurements to build the effective Hamiltonian [20] |

Experimental Protocols and Workflows

Protocol 1: Diagnosing a Noise-Induced Barren Plateau (NIBP)

Objective: To empirically verify if the vanishing gradients in a variational quantum algorithm are due to hardware noise.

Materials:

- Noisy quantum processing unit (QPU) or a noisy circuit simulator.

- Target variational quantum algorithm (e.g., VQE with a specific ansatz).

- Classical optimizer.

Methodology:

- Circuit Preparation: Implement your chosen parameterized quantum circuit

U(θ)on the QPU [18]. - Gradient Calculation: For a fixed set of parameters

θ, compute the partial derivative of the cost functionC(θ)with respect to a parameterθ_i. This can be done using the parameter-shift rule or similar methods. - Scaling Analysis: Systematically increase the number of qubits

nand the circuit depthLin your ansatz. For each(n, L)configuration, calculate the variance of the gradientVar[∂C/∂θ_i]across multiple random parameter initializations. - Data Fitting: Plot

Var[∂C/∂θ_i]as a function ofnandL. Fit an exponential decay curve to the data. An exponential decay in the gradient variance with respect tonandLis a strong indicator of an NIBP [18].

Interpretation: If the gradient variance decreases exponentially with both the number of qubits and the circuit depth, the algorithm is likely experiencing an NIBP. Mitigation efforts should then focus on noise reduction and circuit depth compression.

Protocol 2: Implementing the GCIM/ADAPT-GCIM Approach

Objective: To find the ground state energy of a molecular system while avoiding the barren plateau problem.

Materials:

- A quantum computer capable of preparing a reference state and applying unitary gates.

- A pool of unitary generators (e.g., UCC single and double excitation operators).

- Classical solver for generalized eigenvalue problems.

Methodology:

- Initialization: Start with a reference state

|φ₀⟩, often the Hartree-Fock state [20]. - Basis State Generation: For each generator

G_iin a pre-defined pool, apply it to the reference state to create a set of generating functions:|ψ_i⟩ = G_i |φ₀⟩[20]. In the adaptive version (ADAPT-GCIM), the most important generators are selected iteratively based on a gradient criterion [20]. - Quantum Measurement: Use the quantum computer to measure the matrix elements of the overlap (

S) and Hamiltonian (H) matrices in the generated basis. The elements are:S_{ij} = ⟨ψ_i|ψ_j⟩H_{ij} = ⟨ψ_i| H |ψ_j⟩

- Classical Post-Processing: Construct the

SandHmatrices and solve the generalized eigenvalue problem on a classical computer:H c = E S c[20]. - Solution: The lowest eigenvalue

Eis the approximation for the ground state energy.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools for Quantum Chemistry Simulations

| Item | Function in Experiment |

|---|---|

| Parametrized Quantum Circuit (PQC) | The core "quantum reagent" that prepares the trial wave function. It is a sequence of parameterized gates applied to an initial state [17]. |

| Unitary Coupled Cluster (UCC) Ansatz | A specific, chemically inspired PQC used in VQE for quantum chemistry simulations. It uses excitation operators to build correlation upon a reference state [20] [18]. |

| Generator Coordinate Inspired Method (GCIM) | An alternative to VQE that projects the Hamiltonian into a non-orthogonal subspace, bypassing nonlinear optimization and its associated barren plateaus [20] [21]. |

| Quantum Subspace Expansion (QSE) | A technique similar to GCIM that constructs an effective Hamiltonian in a subspace spanned by a set of basis states, which is then diagonalized classically [20]. |

| Quantum Error Correction Codes | Codes designed to protect quantum information from noise. Recent theoretical work shows that specific "covariant" codes can protect entangled sensors, making them more robust against noise [7] [8]. |

| (1S,2R)-1,2-dihydrophenanthrene-1,2-diol | (1S,2R)-1,2-dihydrophenanthrene-1,2-diol|High Purity |

| 6-aminoquinoxaline-2,3(1H,4H)-dione | 6-Aminoquinoxaline-2,3(1H,4H)-dione|CAS 6973-93-9 |

Workflow and System Relationship Diagrams

Diagram 1: Comparing VQE and GCIM algorithmic workflows, highlighting the Barren Plateau risk in VQE's optimization loop.

Diagram 2: Primary causes of Barren Plateaus and their corresponding mitigation strategies.

Material-Induced Noise in Superconducting Qubits and Fabrication Limitations

Core Concepts: Material-Induced Noise

What is material-induced noise in superconducting qubits?

Material-induced noise refers to unwanted disturbances and decoherence in superconducting qubits that originate from the physical materials and fabrication processes used to create the quantum circuits. Unlike control electronics noise, this type of noise is intrinsic to the qubit device itself. The primary mechanisms include:

- Two-Level Systems (TLS): Atomic-scale defects in substrate surfaces and tunnel barriers that can absorb energy, causing qubit relaxation and dephasing. TLS loss is often the dominant source of noise in superconducting qubits, particularly at low temperatures and single-photon powers. [23]

- Non-equilibrium Quasiparticles: Broken Cooper pairs in superconductors that can tunnel across Josephson junctions, leading to qubit relaxation and state transitions. [24]

- Surface Dielectric Loss: Energy dissipation at interfaces between superconducting metals and substrate materials or in surface oxides. [23]

- Mechanically-Induced Correlated Errors: Vibrations from cryogenic equipment (e.g., pulse tube coolers) that generate phonons, leading to correlated errors across multiple qubits. [24]

Identification & Diagnostics

How can I identify the dominant noise source in my qubit device?

Determining the dominant noise source requires systematic characterization. The table below outlines key signatures and diagnostic methods for common material-induced noise types.

Table 1: Diagnostic Signatures of Material-Induced Noise

| Noise Type | Key Experimental Signatures | Primary Diagnostic Methods |

|---|---|---|

| Two-Level Systems (TLS) | - Fluctuating qubit lifetimes ((T1)) [24]- Power-dependent loss (resonator (Qi) decreases with lower readout power) [23] | - Stark shift measurements- Time-resolved (T_1) fluctuations analysis [24] |

| Non-equilibrium Quasiparticles | - Sudden, correlated jumps in qubit energy relaxation across multiple qubits [24]- Increased excited state population | - Parity switching measurements- Shot-noise tunneling detectors |

| Mechanical Vibrations | - Periodic error patterns synchronized with cryocooler cycle (e.g., 1.4 Hz fundamental frequency) [24]- Correlated bit-flip errors across qubits | - Synchronized measurements with accelerometers [24]- Vibration isolation tests |

| Surface Dielectric Loss | - Consistent, non-fluctuating reduction in (T_1)- Electric field participation in dielectric interfaces | - Resonator loss tangent measurements- Electric field simulation in design |

What experimental protocols can characterize mechanically-induced noise?

The following workflow, developed from recent research, can isolate vibration-induced errors:

Protocol: Time-Resolved Vibration Correlation

- Setup: Anchor an accelerometer on the dilution refrigerator's mixing chamber plate to convert mechanical vibrations to voltage signals. [24]

- Synchronization: Operate the qubit measurement setup and accelerometer oscilloscope with a common trigger signal for synchronized data acquisition. [24]

- Data Collection: Apply repeated readout pulses (e.g., 2.5 μs pulses at 1 ms intervals) while simultaneously recording vibrational data and single-shot qubit readout outcomes. [24]

- Analysis: Correlate the timing of qubit excitation events (quantum jumps to |E⟩ and |F⟩ states) with peaks in the vibrational noise spectrum. Look for harmonic patterns matching the pulse tube's fundamental frequency (typically ~1.4 Hz). [24]

Diagram Title: Workflow for Vibration-Induced Noise Diagnosis

Material Selection & Fabrication

Which material combinations show promise for noise reduction?

Recent advances in material science have identified several promising pathways for reducing material-induced noise. The table below compares material systems and their demonstrated benefits.

Table 2: Material Systems for Reduced Noise in Superconducting Qubits

| Material System | Key Performance Metrics | Noise Reduction Mechanism |

|---|---|---|

| Tantalum on Silicon | - Millisecond-scale coherence times [25]- Reduced fabrication-related contamination [25] | - Improved superconducting properties- Cleaner interfaces reducing TLS density |

| Niobium Capacitors with Al/AlOx/Al Junctions | - Lifetimes exceeding 0.4 ms ((T_1)) [24]- Quality factors >10 million [24] | - Optimized metal-substrate interfaces- Minimized Al electrode area to reduce loss participation [24] |

| Partially Suspended Aluminum Superinductors | - 87% increase in inductance [26]- Improved noise robustness [26] | - Reduced substrate contact minimizes dielectric loss [26]- Gentler cleaning process preserves structural integrity |

What fabrication limitations currently constrain qubit performance?

Current fabrication techniques introduce several fundamental limitations that contribute to material-induced noise:

- Lift-Off Process Limitations: The traditional "lift-off" process for patterning metal structures leaves residual contamination, produces rough edges, and limits feature density. This method is considered "too dirty" for industrial-scale quantum device production. [27]

- Interface Quality: The metal-substrate interface quality, particularly for aluminum films deposited via lift-off, is often suboptimal and introduces TLS loss sources. [24]

- Structural Damage: Conventional fabrication cleaning processes can damage fragile suspended structures, reducing their effectiveness in noise mitigation. [26]

- Integration Complexity: Current systems require dense wiring and cooling structures that physically overwhelm the quantum device itself, creating scalability bottlenecks. [27]

Mitigation Strategies & Protocols

What fabrication techniques can mitigate material-induced noise?

Implementing advanced fabrication protocols can significantly reduce material-induced noise:

Protocol: Chemical Etching for Suspended Superinductors

- Fabrication: Create aluminum-based superconducting devices with partially suspended superinductors on a silicon wafer. [26]

- Etching: Use a simple chemical etching approach to selectively etch hundreds of sub-micron superinductors in specific wafer areas, leaving most of the silicon surface pristine. [26]

- Cleaning: Implement a low-temperature technique under vacuum to remove the etching mask without damaging the fragile, suspended structures. [26]

- Validation: Measure inductance increase (87% improvement demonstrated) and characterize coherence times. [26]

Protocol: Material System Optimization

- Electrode Minimization: For devices using Al/AlOx/Al Josephson junctions with Nb capacitors, minimize the area of Al electrodes since their metal-substrate interfaces tend to be less clean than directly sputtered Nb films. [24]

- Bandage Patches: Use bandage patches to further reduce the energy participation ratio in lossy interfaces. [24]

- Surface Treatment: Employ trenching and advanced surface treatments to reduce TLS density in substrate surfaces. [23]

How can I design my experiment to be more resilient to material limitations?

Strategic experimental design can help work around current fabrication limitations:

- Dynamic Decoupling: Implement pulse sequences that are less sensitive to specific noise spectra, particularly for vibration-induced errors. [24]

- Metastability Exploitation: Design algorithms to exploit the structured behavior of noise (metastability), where systems exhibit long-lived intermediate states that can be leveraged for intrinsic resilience. [28]

- Error-Adaptive Protocols: Use quantum error correction codes that protect entangled sensors, correcting errors approximately rather than perfectly to maintain sensing advantages. [8]

- Symmetric Design: Exploit symmetry properties in qubit layout and control to simplify noise characterization and mitigation through mathematical constructs like root space decomposition. [10]

The Scientist's Toolkit

Table 3: Essential Research Reagent Solutions for Material Noise Investigation

| Tool / Material | Primary Function | Application Context |

|---|---|---|

| High-Resistivity Silicon Substrates | Provides low-loss foundation for superconducting circuits | Reducing dielectric loss from substrate interactions [24] |

| Tantalum & Niobium Sputtering Targets | Creates high-quality superconducting capacitors and groundplanes | Improving interface quality and reducing TLS density [25] [24] |

| Chemical Etchants for Selective Removal | Enables creation of suspended superinductor structures | Minimizing substrate contact and dielectric loss [26] |

| Accelerometers (Cryogenic-Compatible) | Detects mechanical vibrations at millikelvin temperatures | Correlating qubit errors with pulse tube cooler operation [24] |

| Josephson Traveling Wave Parametric Amplifiers (JTWPAs) | Enables high-fidelity, multiplexed qubit readout | Simultaneous characterization of multiple qubits for correlated errors [24] |

| 1-(Piperidin-2-ylmethyl)piperidine | 1-(Piperidin-2-ylmethyl)piperidine, CAS:81310-55-6, MF:C11H22N2, MW:182.31 g/mol | Chemical Reagent |

| 2-amino-N-(4-methylphenyl)benzamide | 2-amino-N-(4-methylphenyl)benzamide | 2-amino-N-(4-methylphenyl)benzamide is a benzamide derivative for research. This product is For Research Use Only and is not intended for personal use. |

Future Directions & Advanced Concepts

What emerging approaches address these fabrication limitations?

The field is transitioning from basic research to manufacturing-focused development:

- Cryogenic Integrated Circuits: Developing fully integrated chips that operate at cryogenic temperatures, similar to the transformation from mainframes to microchips, potentially enabling 20,000 qubits per wafer. [27]

- Advanced Deposition Techniques: Moving beyond lift-off to cleaner, more precise deposition methods that reduce residues and enable higher-density features. [27]

- Structural Design Innovations: Implementing 3D integration and advanced packaging to decouple qubits from mechanical environments. [26] [24]

- Novel Material Exploration: Investigating alternative superconducting materials and interfaces with intrinsically lower loss tangents. [25]

Diagram Title: Material Selection Logic for Noise Reduction

FAQ: Common Experimental Challenges

Why do my qubits show fluctuating lifetimes ((T_1))?

Fluctuating (T_1) times, particularly in longer-lived qubits (relative standard deviations up to 30%), often indicate dominant coupling to a small number of Two-Level Systems (TLS). This is characteristic of state-of-the-art qubits with very low overall loss. Allan deviation analysis can confirm TLS as the primary limitation. [24]

How can I distinguish material noise from control electronics noise?

Material noise typically shows different correlation structures:

- Material noise: Often manifests as correlated errors across multiple qubits, especially for quasiparticle and vibration-induced events. [24]

- Control electronics noise: Tends to affect qubits independently based on individual control lines. Signal-to-noise ratio (SNR) dependency tests can isolate control electronics contributions. [29]

My qubit performance degraded after fabrication - what happened?

Post-fabrication degradation commonly stems from:

- Surface oxidation increasing TLS density

- Structural damage from dicing or wire bonding

- Particulate contamination during packaging

- Interface degradation between material layers Implementing gentler cleaning processes and controlled packaging environments can mitigate these issues. [26]

Are there alternatives to superconducting qubits that avoid these material issues?

Other qubit platforms have different tradeoffs:

- Trapped Ions: Offer long coherence times and high fidelity but have slower gate operations and scalability challenges. [30]

- Spin Qubits: Provide compatibility with semiconductor manufacturing but face isolation challenges from environmental noise. [30]

- Topological Qubits: Promise intrinsic fault tolerance but remain largely theoretical with significant experimental challenges. [30]

Each platform has different material constraints, and the choice depends on the specific application requirements in quantum chemistry computations.

Frequently Asked Questions (FAQs)

What is statistical uncertainty in quantum energy estimation? Statistical uncertainty is the inherent margin of error in any quantum measurement process, characterizing the dispersion of possible measured values around the true value. In quantum chemistry computations, this arises from limitations in measurement instruments, environmental noise, finite sampling, and algorithmic approximations. Unlike error (which implies a mistake), uncertainty acknowledges inherent variability even in correctly performed measurements [31] [32].

Why is achieving chemical precision particularly challenging on noisy quantum hardware? Chemical precision (1.6 × 10â»Â³ Hartree) is challenging because current quantum devices face multiple noise sources that introduce statistical uncertainty. These include high readout errors (often 10â»Â²), gate infidelities, limited sampling shots, and temporal noise variations. These factors collectively degrade measurement accuracy and precision, making it difficult to achieve the reliable energy estimates needed for predicting chemical reaction rates [33].

How can I determine if my energy estimation results are statistically significant? Statistical significance requires comparing your absolute error (distance from reference value) against standard error (measure of precision). If absolute errors consistently exceed 3× the standard error, systematic errors likely dominate. For robust results, implement repeated measurements, calculate both error types, and use techniques like Quantum Detector Tomography to identify and mitigate systematic biases [33].

What is the difference between accuracy and precision in quantum metrology? In quantum metrology, accuracy reflects closeness to the true value, while precision quantifies the reproducibility/repeatability of measurements. A measurement can be precise (consistent) but inaccurate if systematic errors exist, or accurate on average but imprecise with high variability. Quantum error correction primarily improves precision, while error mitigation techniques can improve both [15].

Which quantum error correction approach is most practical for near-term energy estimation? Approximate quantum error correction provides the most practical near-term approach. Rather than perfectly correcting all errors (requiring extensive resources), it corrects dominant error patterns, making a favorable trade between perfect correction and maintaining quantum advantage for sensing. This approach protects entangled sensors more effectively against realistic noise environments [8].

Troubleshooting Guides

Problem: High Variance in Repeated Energy Measurements

Symptoms

- Inconsistent energy values across multiple measurement rounds

- Standard errors exceeding chemical precision thresholds

- Poor reproducibility of supposedly identical experiments

Solutions

- Increase Shot Count: Systematically increase measurement shots (e.g., from 10³ to 10âµ) while monitoring standard error reduction [33]

- Implement Locally Biased Random Measurements: Use Hamiltonian-inspired classical shadows to prioritize measurement settings with greatest impact on energy estimation, reducing shot overhead [33]

- Apply Blended Scheduling: Interleave different circuit types (QDT, Hamiltonian measurements) to average out temporal noise fluctuations [33]

Problem: Systematic Bias in Energy Calculations

Symptoms

- Consistent over/under-estimation compared to reference values

- Absolute errors significantly larger than standard errors

- Results remain biased despite increased sampling

Solutions

- Quantum Detector Tomography (QDT): Characterize actual measurement apparatus using repeated settings to build unbiased estimators [33]

- Dynamic Circuit Capabilities: Use advanced control features (24% accuracy improvement demonstrated) to mitigate systematic calibration errors [34]

- Noise-Resilient Algorithms: Implement approaches like Observable Dynamic Mode Decomposition (ODMD) that show provable convergence even with perturbative noise [35]

Experimental Protocol: QDT for Bias Reduction

- Prepare and measure computational basis states repeatedly

- Construct noisy measurement effect matrix from results

- Perform pseudo-inverse to determine correction matrix

- Apply correction to subsequent energy measurements

- Validate with known benchmark states [33]

Problem: Quantum Resource Limitations

Symptoms

- Excessive measurement time requirements

- Insufficient qubit connectivity for molecular Hamiltonians

- Circuit depth exceeding coherence times

Solutions

- Efficient Observable Estimation: Use informationally complete (IC) measurements to estimate multiple observables from same data [33]

- Error Mitigation: Leverage HPC-powered error mitigation (100× cost reduction demonstrated) instead of full error correction [34]

- Hybrid Quantum-Classical Workflows: Offload appropriate subproblems to classical resources using distributed approaches [15]

Experimental Protocols & Methodologies

Protocol 1: Molecular Energy Estimation with Chemical Precision

Objective: Estimate BODIPY molecular energies to chemical precision (1.6×10â»Â³ Hartree) on noisy quantum hardware [33]

Materials:

- IBM Eagle r3 processor or equivalent

- Quantum chemistry software stack (Qiskit)

- Molecular Hamiltonian in qubit representation

Procedure:

- State Preparation: Initialize Hartree-Fock state (requires no two-qubit gates)

- Measurement Strategy Selection:

- Implement Hamiltonian-inspired locally biased random measurements

- Sample S = 7×10ⴠmeasurement settings

- Repeat each setting T = 8192 times

- Noise Mitigation:

- Execute parallel Quantum Detector Tomography circuits

- Apply blended scheduling to interleave circuits

- Data Processing:

- Construct unbiased estimators using QDT results

- Apply shot-efficient post-processing

- Calculate both absolute and standard errors

Validation: Compare against classical computational chemistry methods for equivalent active spaces [33]

Protocol 2: Noise-Resilient Metrology with Quantum Computing

Objective: Enhance measurement accuracy and precision using quantum processor assistance [15]

Materials:

- Quantum sensor (NV centers in diamond or superconducting qubits)

- Secondary quantum processor for information extraction

- Quantum state transfer capabilities

Procedure:

- Sensor Initialization: Prepare entangled probe state (e.g., GHZ state)

- Parameter Encoding: Expose to target field for time t, imprinting phase ϕ = ωt

- Noisy Evolution: Allow realistic environmental interactions (modeled as Λ∘Uϕ)

- State Transfer: Move noise-affected state to quantum processor

- Quantum Processing: Apply quantum Principal Component Analysis (qPCA)

- Information Extraction: Measure dominant components for noise-resilient estimation

Validation: Quantify improvement via quantum Fisher information and fidelity metrics [15]

Performance Data

Table 1: Error Reduction in Molecular Energy Estimation [33]

| Technique | Qubit Count | Initial Error | Final Error | Reduction Factor |

|---|---|---|---|---|

| QDT + Blended Scheduling | 8 | 1-5% | 0.16% | 12-31× |

| Locally Biased Measurements | 12 | 3.2% | 0.42% | 7.6× |

| Combined Methods | 16 | 4.1% | 0.28% | 14.6× |

| Full Protocol (BODIPY-4) | 8-28 | 2-5% | 0.16-0.45% | 10-20× |

Table 2: Quantum Metrology Enhancement with qPCA [15]

| Metric | Noisy State | After qPCA | Improvement |

|---|---|---|---|

| Accuracy (Fidelity) | 0.32 | 0.94 | 200× |

| Precision (QFI, dB) | 15.2 | 68.19 | +52.99 dB |

| Heisenberg Limit Proximity | 28% | 89% | 3.2× closer |

Research Reagent Solutions

Table 3: Essential Materials for Noise-Resilient Energy Estimation

| Resource | Function | Example Implementation |

|---|---|---|

| IBM Quantum Nighthawk | 120-qubit processor with 218 tunable couplers for complex circuits | Enables 5,000 two-qubit gates for molecular simulations [34] |

| IQM Halocene System | 150-qubit system specialized for error correction research | Supports logical qubit experiments and QEC code development [36] |

| Qiskit with HPC Integration | Quantum software with classical computing interfaces | Enables 100× error mitigation cost reduction [34] |

| Quantum Detector Tomography Kit | Characterizes and corrects measurement apparatus | Reduces readout errors from 1-5% to 0.16% [33] |

| NV-Center Quantum Sensors | Room-temperature quantum sensing platform | Validates noise-resilient metrology approaches [15] [37] |

Noise-Resilience Engineering: Algorithmic and Hardware Breakthroughs

Troubleshooting Guides

Guide 1: Addressing Post-Fabrication Device Damage and Low Yield

Problem: Suspended superinductor structures are fracturing or collapsing after the fabrication process.

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| Stress from Etchant Surface Tension | Inspect devices under SEM for structural failure; check yield across wafer. | Replace solvent-based resist removal with a low-temperature oxygen ashing process to eliminate destructive surface tension [38]. |

| Inadequate Etch Mask Protection | Review mask design; check if non-suspended components (e.g., Nb ground planes) are being etched. | Implement a lithographically defined, selective etch mask to protect fragile and incompatible structures, leaving most of the silicon substrate pristine [38] [39]. |

| Mechanical Strain from Film Stress | Pre-characterize film stress; observe if released structures curl or deform. | Optimize deposition parameters for the Al-AlOx-Al Josephson junction layers to minimize intrinsic strain before the sacrificial release [38]. |

Guide 2: Correcting Performance Issues in Suspended Superinductors

Problem: Fabricated suspended superinductors show lower-than-expected inductance or increased energy loss (low quality factor).

| Possible Cause | Diagnostic Steps | Solution |

|---|---|---|

| Unintended Substrate Coupling | Compare measured device capacitance to designed values; low reduction suggests incomplete suspension. | Optimize the XeF2 silicon etching time and flow rate to ensure the JJ array is fully released and suspended above the substrate [38]. |

| Resist Contamination | Perform surface analysis (e.g., XPS) on suspended structures for residual organics. | Ensure the oxygen ashing process that removes the etch mask is thorough and does not leave carbonaceous residue on the fragile elements [38] [26]. |

| Native Oxidation or Contamination | Measure loss tangents of test resonators; high loss indicates surface dielectric loss. | Maintain high vacuum after release and implement in-house developed wafer cleaning methods before and during fabrication [38]. |

Frequently Asked Questions (FAQs)

FAQ 1: What is the primary quantum computational advantage of suspending a superinductor?

Suspending the superinductor drastically reduces its stray capacitance to the substrate. This reduction is pivotal for developing more robust qubits like the fluxonium, as it allows the superinductor to achieve a higher impedance, a key requirement for protecting qubits from decoherence [40] [38] [39].

FAQ 2: How does this selective suspension technique improve upon previous methods?

Earlier methods involved etching the entire chip substrate, which could introduce loss to other components and was incompatible with materials like Niobium (Nb) used in high-quality resonators and ground planes. This new technique uses a lithographic mask to etch and suspend only specific components (like JJ arrays), preserving the integrity of the rest of the chip and enabling the use of a wider range of materials [38].

FAQ 3: What quantitative performance improvement can be expected?

In validation experiments, the suspended superinductor fabrication process resulted in an 87% increase in inductance compared to conventional, non-suspended components. Furthermore, the energy relaxation times of the resulting suspended qubits and resonators are on par with the state-of-the-art, confirming the high quality of the fabricated elements [39] [41] [26].

FAQ 4: Is this fabrication process scalable for quantum processors?

Yes. The process is designed for wafer-scale fabrication on 6-inch silicon wafers, making it compatible with the production of large-scale quantum processors. It integrates suspended structures into a broader fabrication flow that includes other essential components [38] [26].

FAQ 5: How does reducing substrate noise benefit quantum chemistry computations?

Reducing noise directly translates to longer qubit coherence times and higher-fidelity quantum gates. This is critical for running complex quantum algorithms, such as quantum phase estimation for molecular energy calculations, where computational accuracy is directly tied to the low-error execution of deep quantum circuits [39] [41].

Experimental Protocols

Protocol: Fabrication of Superconducting Circuits with Selectively Suspended Superinductors

This protocol details the methodology for creating planar superconducting circuits with suspended Josephson Junction (JJ) arrays, as validated in recent studies [38].

1. Substrate and Ground Plane Preparation

- Begin with a 6-inch intrinsic silicon wafer.

- Clean the wafer using established methods to ensure a pristine surface [38].

- Fabricate the ground plane and coplanar waveguide structures from Niobium (Nb) via standard lithography and deposition techniques.

2. Josephson Junction Array Fabrication

- Define the superinductor pattern using electron-beam or optical lithography.

- Use a double-angle evaporation process (the Dolan bridge technique) to deposit Al-AlOx-Al and form the array of Josephson junctions [38].

3. Selective Etching and Suspension

- Lithographically define a protective etch mask over the entire wafer. The mask's critical feature is an opening that exposes only the areas where the JJ array is to be suspended, protecting all other components [38].

- Expose the wafer to

XeF2, a vapor-phase silicon etchant. The etchant selectively removes silicon from the unmasked areas, undercutting and releasing the JJ array, which then lifts and suspends due to released strain [38]. - Remove the protective etch mask using a gentle oxygen ashing process to avoid damaging the newly suspended, fragile structures [38].

Table 1: Performance Comparison of Superinductor Configurations

| Parameter | On-Substrate Superinductor | Suspended Superinductor | Measurement Context |

|---|---|---|---|

| Inductance per Junction | 0.91 nH | Data implies significantly higher | Derived from room temperature probing [38] |

| Overall Inductance Increase | Baseline | +87% | Comparison of fabricated devices [39] [41] |

| Impedance (R) | -- | > 200 kΩ | "Hyperinductance regime" [38] |

| Qubit/Resonator Coherence | State-of-the-art | On par with state-of-the-art | Validation via qubit and resonator characterization [38] |

The Scientist's Toolkit

Table 2: Essential Materials and Reagents for Selective Suspension Fabrication

| Item | Function / Role in the Protocol |

|---|---|

| Intrinsic Silicon Wafer | The primary substrate for fabricating the planar superconducting circuits. |

| Niobium (Nb) | Used for the ground plane and coplanar waveguide resonators due to its high quality factor; protected from etchants by the mask [38]. |

| Aluminum (Al) | The superconducting metal used to create the Josephson junctions via double-angle evaporation [38]. |

| XeF2 (Xenon Difluoride) | A vapor-phase, isotropic silicon etchant. It selectively removes silicon to suspend the JJ arrays without damaging the metal structures [38]. |

| Photoresist for Etch Mask | A lithographically patterned layer that defines which areas of the silicon substrate are exposed to the XeF2 etchant, enabling selective suspension [38]. |

| 4-Bromo-4'-chloro-3'-fluorobenzophenone | 4-Bromo-4'-chloro-3'-fluorobenzophenone |

| 1,3-Dichloro-1,1-difluoropropane | 1,3-Dichloro-1,1-difluoropropane, CAS:819-00-1, MF:C3H4Cl2F2, MW:148.96 g/mol |

Workflow and Signaling Diagrams

Fabrication Workflow for Suspended Superinductors

Performance Advantage of Suspended Superinductors

Frequently Asked Questions (FAQs)

Q1: What is an error-mitigating Ansatz and how does it differ from a standard variational Ansatz? An error-mitigating Ansatz is a parameterized wavefunction design that incorporates specific features to make quantum computations more resilient to the noise present on near-term quantum hardware. While a standard variational Ansatz, like tUCCSD, focuses solely on representing the quantum state, an error-mitigating Ansatz is co-designed with noise suppression and mitigation strategies. This can include intrinsic properties that reduce sensitivity to errors, or it is used in conjunction with post-processing techniques like Pauli error reduction and measurement error mitigation to recover accurate expectation values from noisy quantum circuits [42] [43].

Q2: My quantum linear response (qLR) calculations are yielding unstable excitation energies. What could be the cause? Unstable results in qLR are frequently caused by the combined effect of shot noise and hardware noise, which corrupts the generalized eigenvalue problem. To address this:

- Increase Sampling: The foundational step is to increase the number of measurement shots ("Pauli saving") to reduce the inherent statistical shot noise [42] [43].

- Employ Error Mitigation: Apply Ansatz-based read-out error mitigation. This involves characterizing the noise model associated with your specific Ansatz and device, then using this information to correct the measured expectation values [42] [43].

- Leverage Classical Processing: Use techniques like the double factorization of the two-electron integral tensor. This groups Hamiltonian terms into a linear number of measurable fragments, drastically reducing the number of unique circuits and the associated cumulative noise from repeated executions [44].

Q3: How can I reduce the measurement overhead for complex molecules like BODIPY on noisy hardware? For large molecules, measurement overhead is a primary bottleneck. Effective strategies include:

- Locally Biased Random Measurements: This technique prioritizes measurement settings that have a larger impact on the final energy estimation, reducing the number of shots required while maintaining high precision [33].

- Informationally Complete (IC) Measurements: IC measurements allow you to estimate multiple observables from the same set of data. This is particularly beneficial for measurement-intensive algorithms like qEOM and ADAPT-VQE [33].

- Parallel Quantum Detector Tomography (QDT): Perform QDT alongside your main experiment to characterize and mitigate readout errors in post-processing, significantly reducing estimation bias [33].

Q4: Can I use error-mitigating Ansatze for applications beyond ground-state energy calculation? Yes. The quantum linear response (qLR) and equation-of-motion (qEOM) frameworks are built on top of a variationally obtained ground state from an Ansatz like oo-tUCCSD. These frameworks are specifically designed to compute molecular spectroscopic properties, such as absorption spectra, by accessing excited state information. Successful proof-of-principle demonstrations, such as obtaining the absorption spectrum of LiH using a triple-zeta basis set, have been performed on real quantum hardware [42] [43].

Troubleshooting Guides

Issue 1: High Variance in Energy Estimation

Problem: The estimated energy of your molecular ground state has a high variance across multiple runs, making it difficult to converge the VQE optimization.

Diagnosis: This is typically caused by a combination of shot noise (insufficient measurements) and hardware readout noise [33].

Resolution:

- Implement "Pauli Saving": Analyze the Hamiltonian and identify groups of Pauli operators that can be measured simultaneously. Focus your shot allocation on the groups with the largest coefficients (largest

|ωℓ|in the HamiltonianH = ∑ℓ ωℓ Pℓ) to reduce the overall variance more efficiently [42] [43]. - Apply Readout Error Mitigation: Use techniques like Quantum Detector Tomography (QDT). By characterizing the readout error matrix of your device, you can construct an unbiased estimator that significantly reduces the systematic error in your energy measurements [33].

- Use Efficient Hamiltonian Grouping: Adopt the Basis Rotation Grouping strategy based on a low-rank factorization of the Hamiltonian [44].

H = Uâ‚€ (∑ gp np) U₀†+ ∑ Uâ„“ (∑ gpq np nq) Uâ„“â€This method reduces the number of distinct measurement circuits fromO(Nâ´)toO(N), and the operators measured (np,np nq) are local, making them less susceptible to readout errors that grow with operator weight [44].

Issue 2: Unphysical Results Due to Symmetry Violation

Problem: The computed wavefunction violates expected physical symmetries, such as particle number or spin, leading to unphysical properties.

Diagnosis: Quantum noise can break the symmetries of the simulated molecule during the evolution of the quantum circuit [44].

Resolution:

- Post-Selection on Symmetries: After preparing your state and performing measurements in the computational basis, you can classically post-select only those measurement outcomes (bitstrings) that correspond to the correct particle number and

Szspin value. This projects the noisy state back into the correct symmetry sector [44]. - Incorporate Symmetry into the Ansatz: Design your Ansatz to be symmetry-preserving by construction. For example, the tUCCSD Ansatz is built from fermionic excitation operators that naturally conserve particle number [43].

Issue 3: Algorithm Instability on Specific Hardware

Problem: An algorithm that works well in noiseless simulation fails to produce meaningful results on a specific quantum processing unit (QPU), even with standard error mitigation.

Diagnosis: The algorithm may be particularly vulnerable to the unique spatio-temporal noise correlations of that specific device [10].

Resolution:

- Characterize Spatio-Temporal Noise: Use advanced noise characterization frameworks, like the one developed by Johns Hopkins APL, which uses root space decomposition to classify how noise propagates through the system over time and across multiple qubits. This helps identify the most significant error sources [10].

- Exploit Symmetry for Error Correction: Design your initial entangled sensor state (e.g., your Ansatz) using a family of quantum error correction codes. These codes can be tailored to protect against the specific type of noise identified on your hardware, making the computation robust even if some qubits experience errors. This approach trades a small amount of sensitivity for greatly enhanced resilience [7].

- Adopt a Noise-Agnostic Mitigation Model: Train a neural model, such as the Data Augmentation-empowered Error Mitigation (DAEM) model, on noisy data from fiducial processes run on the same hardware. This model can learn to reverse the effect of the device's noise without requiring prior knowledge of the exact noise model, making it highly versatile and hardware-adaptive [45].

Experimental Protocols & Data

Protocol 1: Ansatz-Based Read-Out Error Mitigation for qLR

This protocol outlines how to correct for errors in the measurement (read-out) process when using a specific Ansatz.

Objective: To mitigate read-out errors in the expectation values used to construct the quantum Linear Response (qLR) matrices [42] [43].

Procedure:

- State Preparation: Prepare the ground state

|ψ(θ)>using your chosen parameterized Ansatz (e.g., oo-tUCCSD) on the quantum computer. - Noisy Measurement: For each Pauli string

Prequired for the qLR Hessian (E[2]) and metric (S[2]) matrices, measure the expectation value〈P〉_noisyon the hardware. - Error Characterization: For the same Ansatz

|ψ(θ)>, characterize the read-out error probability matrixAfor the relevant qubits. This matrix gives the probability that a prepared computational basis state|i>is measured as|j>. - Error Mitigation: Invert the error matrix

Aand apply it to the vector of noisy measurement outcomes to obtain a corrected estimate of the expectation value:〈P〉_corrected = Aâ»Â¹ 〈P〉_noisy.

Table 1: Key Components for Ansatz-Based Read-Out Error Mitigation

| Component | Description | Function in Protocol |

|---|---|---|

| oo-tUCCSD Ansatz | Orbital-optimized, trotterized Unitary Coupled Cluster with Singles and Doubles [43]. | Provides the parameterized wavefunction |ψ(θ)> whose properties are being measured. |

Pauli String P |

A tensor product of single-qubit Pauli operators [42]. | The observable whose expectation value is being measured. |

Read-Out Error Matrix A |

A stochastic matrix that models classical bit-flip probabilities during qubit measurement [42]. | Characterizes the device-specific noise to be corrected. |

Protocol 2: Noise-Resilient Measurement via Hamiltonian Factorization

This protocol uses a classical decomposition of the molecular Hamiltonian to drastically reduce measurement cost and noise sensitivity [44].

Objective: To efficiently and robustly measure the energy expectation value of a prepared quantum state.

Procedure:

- Hamiltonian Factorization: On a classical computer, perform a double factorization of the electronic structure Hamiltonian to express it in the form:

H = U₀ (∑ gp np) U₀†+ ∑ Uℓ (∑ gpq np nq) Uℓ†- State Preparation: Prepare your Ansatz state

|ψ>on the quantum processor. - Basis Rotation and Measurement: For each fragment

ℓ(includingℓ=0): a. Apply the basis rotation circuitUℓto the state|ψ>. b. Measure all qubits in the computational basis to sample from the probability distribution of the number operatorsnpand productsnp nq. c. Classically compute the expectation values〈np〉_ℓand〈np nq〉_ℓfrom the sampled bitstrings. - Energy Estimation: Reconstruct the total energy on the classical computer using:

〈H〉 = ∑ gp 〈np〉_0 + ∑ ∑ gpq(ℓ) 〈np nq〉_ℓ

Table 2: Benefits of Basis Rotation Grouping Measurement Strategy

| Metric | Naive Measurement | Basis Rotation Grouping [44] |

|---|---|---|

| Number of Term Groupings | O(Nâ´) |

O(N) (cubic reduction) |

| Operator Locality | Up to N-local (non-local) |

1- and 2-local (local) |

| Readout Error Sensitivity | Exponential in N |

Constant (mitigated) |

| Example: Total Measurements | Astronomically large | Up to 1000x reduction for large systems |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Components for Noise-Resilient Quantum Chemistry Experiments

| Tool / Component | Function | Example Use-Case |

|---|---|---|

| Orbital-Optimized VQE (oo-VQE) | A hybrid algorithm that variationally minimizes energy with respect to both circuit (θ) and orbital (κ) parameters [43]. |

Improving the description of strongly correlated molecules within an active space. |

| tUCCSD Ansatz | A Trotterized approximation of the unitary coupled-cluster Ansatz, implementable on quantum hardware [43]. | Serving as a strong reference wavefunction for ground and excited state calculations. |

| Quantum Linear Response (qLR) | A framework for computing molecular excitation energies and spectroscopic properties from a ground state [42] [43]. | Calculating the absorption spectrum of a molecule like LiH. |

| Pauli Saving | A technique to reduce the number of measurements by intelligently grouping Hamiltonian terms and allocating shots [42] [43]. | Lowering the measurement cost and noise impact in the evaluation of the qLR matrices. |

| Data Augmentation-empowered Error Mitigation (DAEM) | A neural network model that mitigates quantum errors without prior noise knowledge or clean training data [45]. | Correcting errors in a complex quantum dynamics simulation where the noise model is unknown. |

| Informationally Complete (IC) Measurements | A measurement strategy that allows estimation of multiple observables from the same data set [33]. | Reducing circuit overhead in algorithms like ADAPT-VQE and qEOM. |

| 1-(Bromomethyl)-2-(trifluoromethyl)benzene | 1-(Bromomethyl)-2-(trifluoromethyl)benzene, CAS:395-44-8, MF:C8H6BrF3, MW:239.03 g/mol | Chemical Reagent |

| (R)-5,6,7,8-Tetrahydroquinolin-8-amine | (R)-5,6,7,8-Tetrahydroquinolin-8-amine|Chiral CAMPY Ligand | (R)-5,6,7,8-Tetrahydroquinolin-8-amine (CAMPY) is a chiral diamine ligand for asymmetric transfer hydrogenation catalysis. For Research Use Only. Not for human or veterinary use. |

Troubleshooting Guides

Troubleshooting DSRG Effective Hamiltonian Generation

Problem: High correlation energy error in active space selection.

- Symptoms: Inaccurate ground state energy, significant error compared to full configuration interaction (FCI).

- Possible Causes: The active orbital selection may not be capturing the most important orbitals for correlation energy. The contribution from correlation energy tends to decrease sharply with the order of expansion, with the most significant contributions often coming from the initial few orders [46].

- Solutions:

- Implement a correlation energy-based orbital selection algorithm that uses single and double orbital correlation energy (Δεi and Δεij) from many-body expanded FCI as a selection criterion [46].

- Automatically include HOMO and LUMO orbitals as they are directly related to molecular reactivity [46].

- Use a relative contribution threshold of 30% for orbital selection, which has been shown to be appropriate across various simulations [46].

Problem: Inefficient quantum resource utilization with DSRG.

- Symptoms: Quantum circuit depth too high for current NISQ devices, excessive gate counts.

- Possible Causes: The effective Hamiltonian may not be properly optimized for quantum hardware constraints, or the method may be applied without leveraging its full strengths.

- Solutions:

- Combine DSRG with hardware adaptable ansatz (HAA) circuits to generate noise-resilient quantum circuits [46].

- Ensure the DSRG method is used to construct an effective Hamiltonian that reduces the full system Hamiltonian into a lower-dimensional one retaining essential physics [46].

- Integrate with automatic orbital selection based on orbital correlation energy to minimize active space size while maintaining accuracy [46].

Troubleshooting Transcorrelated Method Implementation

Problem: Slow convergence and optimization difficulties.

- Symptoms: Variational algorithm fails to converge to accurate ground state energy, requires excessive optimization iterations.