Taming the Shot Cost: Why ADAPT-VQE is Measurement-Hungry and How to Optimize It

The Adaptive Derivative-Assembled Pseudo-Trotter Variational Quantum Eigensolver (ADAPT-VQE) is a leading algorithm for molecular simulation on near-term quantum computers, prized for its compact circuits and accuracy.

Taming the Shot Cost: Why ADAPT-VQE is Measurement-Hungry and How to Optimize It

Abstract

The Adaptive Derivative-Assembled Pseudo-Trotter Variational Quantum Eigensolver (ADAPT-VQE) is a leading algorithm for molecular simulation on near-term quantum computers, prized for its compact circuits and accuracy. However, its high demand for quantum measurements, or 'shots,' poses a significant bottleneck for practical application. This article explores the foundational reasons behind ADAPT-VQE's shot-intensive nature, stemming from its iterative ansatz construction and parameter optimization loops. We then detail current methodological advances and optimization strategies—from shot reuse and allocation to machine learning and greedy algorithms—that are dramatically reducing this overhead. Finally, we validate these approaches through hardware demonstrations and comparative benchmarks, providing a roadmap for researchers in drug development and biomedical research to harness ADAPT-VQE for practical quantum chemistry problems.

The Core Challenge: Deconstructing ADAPT-VQE's Inherent Shot Hunger

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) represents a significant advancement in quantum computational chemistry, designed specifically for the constraints of Noisy Intermediate-Scale Quantum (NISQ) devices. Unlike fixed-structure ansätze such as Unitary Coupled Cluster (UCCSD) or hardware-efficient approaches, ADAPT-VQE dynamically constructs quantum circuits tailored to specific molecular systems [1] [2]. This adaptive construction offers notable advantages in reducing circuit depth and mitigating trainability issues like barren plateaus, but introduces a substantial quantum measurement burden that remains a critical bottleneck for practical implementation [1] [3].

At its core, ADAPT-VQE addresses fundamental limitations of pre-defined ansätze. Fixed ansätze often contain redundant operators that increase circuit depth without meaningfully contributing to accuracy, while hardware-efficient ansätze face challenges with optimization and limited accuracy [2]. The adaptive approach builds circuits iteratively, selecting only the most relevant operators at each step. However, this very strength necessitates extensive quantum measurements for both operator selection and parameter optimization, creating what has become known as the "shot overhead" problem in ADAPT-VQE implementations [1] [4]. This overhead stems from the requirement to evaluate numerous observables at each iteration, with the number of measurements scaling polynomially with system size [2].

Understanding this measurement overhead requires examining the fundamental ADAPT-VQE loop, which consists of two computationally expensive stages: operator selection based on gradient calculations and global parameter optimization of the expanding ansatz [2] [5]. Each stage demands extensive quantum measurements, making the overall process shot-intensive compared to non-adaptive VQE approaches. As research continues to bridge the gap between quantum resource requirements and current hardware capabilities, addressing this measurement overhead has become a central focus in the development of practical quantum computational chemistry methods [3].

The ADAPT-VQE Algorithmic Framework

Core Iterative Loop

The ADAPT-VQE algorithm constructs quantum circuits through an iterative, greedy process that systematically expands an initial reference state. The procedure begins with a simple quantum state, typically the Hartree-Fock reference state, and progressively appends parameterized unitary operators selected from a predefined pool [2]. The algorithm operates through two fundamental steps that repeat until convergence:

Step 1: Operator Selection - At iteration m, with a current parameterized ansatz wavefunction |Ψ^(m-1)⟩, the algorithm identifies the most promising unitary operator ð’°* from a pool 𕌠of possible operators. The selection criterion maximizes the absolute gradient of the energy expectation value with respect to the new parameter:

ð’°* = argmax|d/dθ ⟨Ψ^(m-1)|ð’°(θ)†Âð’°(θ)|Ψ^(m-1)⟩| at θ=0 [2]

This results in a new ansatz wavefunction |Ψ^(m)⟩ = ð’°*(θm)|Ψ^(m-1)⟩, where θm represents a newly introduced free parameter.

Step 2: Global Optimization - The algorithm then performs a multi-dimensional optimization over all parameters [θ1, θ2, ..., θ_m] to minimize the energy expectation value:

[θ1^(m), ..., θm^(m)] = argmin⟨Ψ^(m)(θm, θ(m-1), ..., θ1)|Â|Ψ^(m)(θm, θ(m-1), ..., θ1)⟩ [2]

After optimization, the current ansatz becomes |Ψ^(m)⟩ = |Ψ^(m)(θm^(m), θ(m-1)^(m), ..., θ_1^(m))⟩, completing one iteration of the ADAPT-VQE loop.

Operator Pool Options

The choice of operator pool significantly influences ADAPT-VQE performance. Common pool types include:

- Fermionic Excitation Pools: Originally used in ADAPT-VQE, these consist of generalized single and double (GSD) excitations [3].

- Qubit Excitation Pools: Directly parameterize qubit operators rather than fermionic excitations [3].

- Coupled Exchange Operator (CEO) Pools: A novel approach that demonstrates substantial improvements in measurement efficiency and circuit compactness [3].

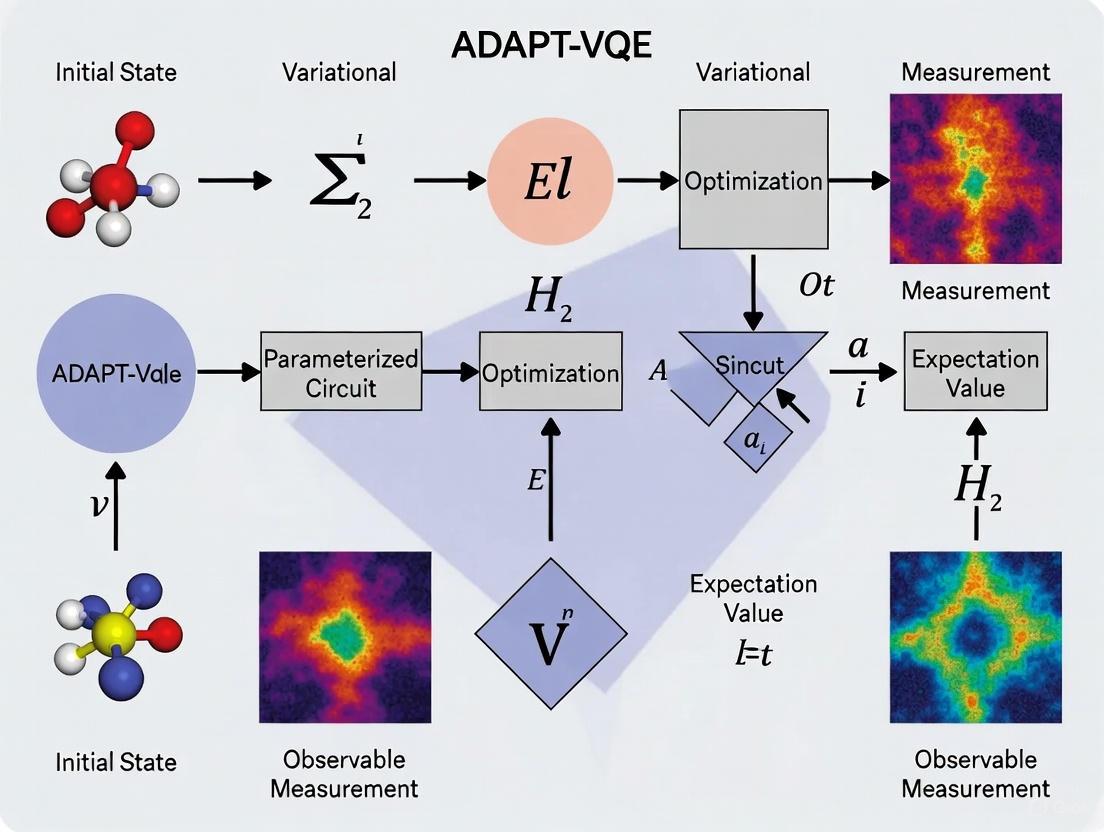

The algorithmic flow can be visualized as follows:

Quantifying the Measurement Overhead

The measurement overhead in ADAPT-VQE arises from multiple sources within the iterative loop. The operator selection step requires computing gradients for every operator in the pool, which typically involves tens of thousands of extremely noisy measurements on quantum devices [2]. Simultaneously, the global optimization procedure must minimize a high-dimensional, noisy cost function, further contributing to the shot requirements [2] [5].

Table 1: Primary Sources of Measurement Overhead in ADAPT-VQE

| Overhead Source | Description | Impact on Measurements |

|---|---|---|

| Operator Selection | Calculating gradients for all pool operators to identify the best candidate | Requires extensive measurements for each operator in the pool, scaling with pool size [2] |

| Parameter Optimization | Optimizing all parameters in the growing ansatz circuit | Demands repeated energy evaluations during classical optimization loop [2] |

| Gradient Evaluation | Measuring commutator [H, Ak] for each pool operator Ak | Necessitates additional quantum measurements beyond energy evaluation [1] |

| Ansatz Growth | Increasing circuit complexity with each iteration | Longer circuits may require more shots to maintain precision due to noise [3] |

The substantial measurement requirements are particularly challenging given the limitations of current quantum hardware. As noted in recent research, "the operator selection procedure involves computing gradients of the expectation value of the Hamiltonian for every choice of operator in the operator pool, which typically requires tens of thousands of extremely noisy measurements on the quantum device" [2]. This overhead has limited full implementations of ADAPT-VQE-type algorithms on current-generation quantum processing units (QPUs), with only partial attempts successfully demonstrated to date [2].

Table 2: Comparative Resource Requirements for Molecular Simulations

| Molecule | Qubits | Algorithm | Measurement Cost | CNOT Count |

|---|---|---|---|---|

| LiH | 12 | Original ADAPT-VQE | Baseline | Baseline |

| LiH | 12 | CEO-ADAPT-VQE* | Reduced by 99.6% | Reduced by 88% [3] |

| BeHâ‚‚ | 14 | Original ADAPT-VQE | Baseline | Baseline |

| BeHâ‚‚ | 14 | CEO-ADAPT-VQE* | Reduced by 99.6% | Reduced by 88% [3] |

| Hâ‚‚ | 4 | ADAPT-VQE withPauli Reuse & Shot Allocation | 32.29% of original | Not Specified [1] |

The tables above quantify the significant measurement overhead challenges in ADAPT-VQE while also demonstrating the substantial improvements possible through algorithmic enhancements. The CEO-ADAPT-VQE* approach shows particularly dramatic reductions in both measurement costs and gate counts compared to the original ADAPT-VQE formulation [3].

Methodologies for Reducing Measurement Overhead

Pauli Measurement Reuse and Commutativity Grouping

One promising approach to reducing measurement overhead involves reusing Pauli measurement outcomes obtained during VQE parameter optimization in subsequent operator selection steps [1]. This strategy recognizes that the Pauli strings measured for energy estimation often overlap with those required for gradient calculations in the operator selection phase. By storing and reusing these measurements across ADAPT-VQE iterations, the method significantly reduces the number of unique quantum measurements required [1] [4].

This reuse protocol is particularly effective when combined with commutativity-based grouping of Hamiltonian terms and gradient observables. The technique organizes Pauli measurements into mutually commuting sets (often using qubit-wise commutativity), allowing simultaneous measurement of all operators within each group [1]. Research demonstrates that this combined approach of "reusing Pauli measurement outcomes obtained during VQE parameter optimization in the subsequent operator selection step" can reduce average shot usage to approximately 32.29% of the original requirement when both measurement grouping and reuse are implemented [1].

The implementation workflow for this method involves:

- Initial Pauli Measurement: During VQE optimization, measure and store all Pauli string outcomes

- Commutativity Analysis: Identify overlapping Pauli strings between Hamiltonian measurements and gradient observables

- Measurement Grouping: Organize required measurements into commuting sets using qubit-wise commutativity

- Data Reuse: In subsequent operator selection, reuse relevant previously measured Pauli strings rather than remeasuring

This approach differs significantly from alternative methods like adaptive informationally complete (IC) generalized measurements, as it retains measurements in the computational basis and introduces minimal classical overhead since Pauli string analysis can be performed once during initial setup [1].

Variance-Based Shot Allocation

Variance-based shot allocation represents another powerful technique for reducing measurement overhead in ADAPT-VQE. This method strategically distributes measurement shots among different observables based on their estimated variances, prioritizing resources toward terms with higher uncertainty [1]. The approach applies to both Hamiltonian measurements and gradient measurements, making it specifically tailored for ADAPT-VQE's unique requirements.

The theoretical foundation for this method comes from the optimal shot allocation framework, which minimizes the total variance of the estimated energy or gradient for a fixed total shot budget [1]. The implementation typically follows these steps:

- Variance Estimation: Estimate variances for individual Pauli measurements, either theoretically or through preliminary sampling

- Shot Budgeting: Allocate shots to each measurable term proportional to the square root of its variance

- Iterative Refinement: Update variance estimates and shot allocation as the algorithm progresses

Numerical simulations demonstrate the effectiveness of this approach, with results showing "shot reductions of 6.71% (VMSA) and 43.21% (VPSR) for H2, and 5.77% (VMSA) and 51.23% (VPSR) for LiH, relative to uniform shot distribution" [1]. The significant variation in improvement percentages highlights the method's dependence on molecular system characteristics and the specific shot allocation strategy employed.

Greedy Gradient-Free Approaches

The Greedy Gradient-free Adaptive VQE (GGA-VQE) represents an alternative approach that addresses measurement overhead by eliminating gradient calculations entirely [2] [5]. This method replaces the conventional gradient-based operator selection with an energy-sorting approach that identifies both the optimal operator and its associated parameter value simultaneously [5].

The GGA-VQE algorithm operates by:

- Landscape Characterization: For each operator in the pool, explicitly construct the one-dimensional energy landscape as a function of the new parameter

- Analytical Minimization: Exploit the known trigonometric structure of parameterized expectation values to identify the optimal parameter value

- Operator Selection: Choose the operator that provides the largest energy decrease when applied with its optimal parameter

This approach provides "improved resilience to statistical sampling noise" while maintaining accuracy comparable to standard ADAPT-VQE [2]. By avoiding gradient calculations and leveraging analytical forms, GGA-VQE reduces the quantum measurement burden while simultaneously simplifying the classical optimization component of the algorithm.

Enhanced Initial States and Active Space Selection

Physically motivated improvements to ADAPT-VQE focus on enhancing initial state preparation and guiding ansatz growth to produce more compact wavefunctions with faster convergence [6]. These strategies include:

Improved Initial States: Using natural orbitals from unrestricted Hartree-Fock (UHF) calculations to enhance the starting point beyond the standard Hartree-Fock reference. These orbitals capture some correlation effects at minimal computational cost and can improve overlap with the true ground state [6].

Projection Protocols: Restricting the orbital space to an active subset based on orbital energies near the Fermi level, following insights from perturbation theory. This approach prioritizes excitations with small energy denominators, which typically contribute most significantly to correlation energy [6].

These methods reduce measurement overhead indirectly by generating more compact ansätze that require fewer iterations to converge to chemical accuracy, thereby reducing the total number of measurements throughout the ADAPT-VQE process [6].

Experimental Protocols and Validation

Experimental Setup for Shot Reduction Studies

Research evaluating measurement reduction strategies in ADAPT-VQE typically employs standardized computational chemistry workflows combined with quantum simulation environments. The experimental protocol generally follows these steps:

Molecular System Preparation:

- Select molecular systems (e.g., Hâ‚‚, LiH, BeHâ‚‚, Hâ‚‚O)

- Generate molecular geometries and electronic structure data

- Transform molecular Hamiltonians to qubit representations using Jordan-Wigner or Bravyi-Kitaev transformations [1]

Quantum Simulation:

Performance Metrics:

Researcher's Toolkit: Essential Components

Table 3: Essential Research Components for ADAPT-VQE Measurement Studies

| Component | Function | Examples/Alternatives |

|---|---|---|

| Quantum Simulator | Emulates quantum computer behavior with configurable shot counts | Qiskit, Cirq, PennyLane [1] |

| Electronic Structure Package | Computes molecular integrals and reference energies | PySCF, OpenFermion, Psi4 [6] |

| Operator Pools | Defines set of available operators for ansatz construction | Fermionic (GSD), Qubit, CEO pools [3] |

| Measurement Grouping Algorithm | Identifies commuting Pauli terms for simultaneous measurement | Qubit-wise commutativity, graph coloring [1] |

| Shot Allocation Strategy | Optimizes distribution of measurements across terms | Variance-based allocation, uniform allocation [1] |

| Classical Optimizer | Adjusts circuit parameters to minimize energy | BFGS, COBYLA, SPSA [2] |

| 2,4,6-Trimethoxycinnamic acid | 2,4,6-Trimethoxycinnamic acid, CAS:13063-09-7, MF:C12H14O5, MW:238.24 g/mol | Chemical Reagent |

| Chloroprocaine | Chloroprocaine, CAS:133-16-4, MF:C13H19ClN2O2, MW:270.75 g/mol | Chemical Reagent |

The measurement overhead in ADAPT-VQE presents a significant challenge for practical implementation on current quantum hardware, but numerous strategies demonstrate promising pathways toward mitigation. The integrated approaches of Pauli measurement reuse, variance-based shot allocation, gradient-free optimization, and physically motivated ansatz construction collectively address different aspects of the shot overhead problem [1] [2] [6].

Future research directions should focus on several key areas. First, evaluating these measurement reduction strategies on actual quantum hardware with realistic noise profiles remains essential for assessing practical utility [1]. Second, exploring synergies between different approaches—such as combining CEO pools with measurement reuse protocols—may yield multiplicative benefits [3]. Finally, developing theoretical foundations for measurement complexity in adaptive algorithms could guide the design of more efficient future implementations.

As quantum hardware continues to evolve, reducing measurement overhead through algorithmic innovations will remain crucial for demonstrating practical quantum advantage in chemical simulation. The progress documented in recent research suggests that optimized ADAPT-VQE variants are steadily bridging the gap between theoretical potential and practical implementation on NISQ-era quantum devices [3].

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) represents a promising framework for quantum chemistry simulations on noisy intermediate-scale quantum (NISQ) devices. However, its practical implementation is severely constrained by exorbitant quantum measurement requirements. This technical analysis examines the fundamental architectural components of ADAPT-VQE that contribute to its extensive shot costs, with particular focus on the operator selection mechanism and gradient measurement protocols. We synthesize recent methodological advances that substantially reduce these resource requirements through measurement reuse strategies, variance-based shot allocation, and modified operator selection criteria. Quantitative evaluations demonstrate measurement reductions exceeding 99% in some implementations, potentially bridging the gap between theoretical algorithm design and practical execution on current quantum hardware.

ADAPT-VQE has emerged as a leading variational algorithm for quantum chemistry, dynamically constructing problem-specific ansätze through an iterative process that typically employs energy gradients for operator selection [5]. Unlike fixed-ansatz approaches, ADAPT-VQE builds the quantum circuit layer by layer, selecting each subsequent operator from a predefined pool based on its potential to minimize energy [6]. While this adaptive construction yields more compact and accurate circuits than static alternatives, it introduces substantial measurement overhead that constitutes a critical bottleneck for practical implementation [1].

The algorithm's characteristically high shot requirements originate from two interdependent processes: the operator selection step that identifies the most promising operator to add to the growing ansatz, and the subsequent parameter optimization that determines optimal rotation angles for all parameters in the circuit [5]. With current quantum processing units (QPUs) limited to finite shot rates and susceptible to statistical noise, these measurement demands frequently render faithful algorithm execution infractable [5]. This analysis examines the architectural sources of these costs and documents emerging strategies to mitigate them without sacrificing chemical accuracy.

The Operator Selection Mechanism: Architectural Foundation of Shot Costs

Standard Gradient-Based Selection

The conventional ADAPT-VQE algorithm employs an iterative growth mechanism where the ansatz is constructed sequentially according to the following structure: [|\psi^{(N)}\rangle = \prod{k=1}^{N} e^{\thetak \hat{\tau}k} |\psi{\text{ref}}\rangle] where (|\psi{\text{ref}}\rangle) is a reference state (typically Hartree-Fock), and each ( \hat{\tau}k ) is an anti-Hermitian operator selected from a predefined pool [6].

At each iteration ( N ), the algorithm identifies the next operator to append by evaluating the gradient of the energy with respect to each potential operator parameter: [gi = \frac{\partial E^{(N)}}{\partial \thetai} = \langle \psi^{(N)} | [\hat{H}, \hat{\tau}_i] | \psi^{(N)} \rangle] where ( \hat{H} ) is the molecular Hamiltonian [5] [6]. The operator yielding the largest gradient magnitude is selected for inclusion in the ansatz.

This gradient evaluation requires measuring the expectation values of commutators ([\hat{H}, \hat{\tau}i]) for every operator ( \hat{\tau}i ) in the pool, necessitating extensive quantum measurements [5]. Following operator selection, a classical optimization routine adjusts all parameters ({\theta_i}) to minimize energy, requiring additional measurement cycles for cost function evaluation [5].

Measurement Costs in Standard Implementation

The shot costs associated with standard operator selection scale with several algorithm characteristics:

- Pool Size: The number of operators in the selection pool directly determines the number of commutator measurements required [6].

- Circuit Depth: As the ansatz grows, evaluating (|\psi^{(N)}\rangle) becomes increasingly costly due to deeper circuits and noise accumulation [5].

- Hamiltonian Complexity: Molecular systems with complex electronic structure yield Hamiltonians comprising many Pauli terms, each requiring separate measurement [1].

Table 1: Components of Shot Costs in Standard ADAPT-VQE Implementation

| Cost Component | Description | Impact on Shot Requirements |

|---|---|---|

| Gradient Measurements | Evaluating (\langle [\hat{H}, \hat{\tau}_i] \rangle) for all pool operators | Scales linearly with pool size; dominant cost in early iterations |

| Parameter Optimization | Classical optimization of all ansatz parameters | Requires repeated energy evaluations; grows with ansatz depth |

| Hamiltonian Measurement | Evaluating (\langle \hat{H} \rangle) for energy calculation | Scales with number of Pauli terms in Hamiltonian |

| Statistical Precision | Achieving sufficient precision for reliable operator ranking | Requires multiple shots per measurement; exacerbated by noise |

Quantitative Analysis of Shot Reduction Strategies

Recent research has produced significant advances in reducing the measurement overhead of ADAPT-VQE. The table below synthesizes quantitative results from multiple studies demonstrating the efficacy of various optimization strategies.

Table 2: Shot Reduction Performance of Optimized ADAPT-VQE Protocols

| Method | Key Innovation | Chemical Systems Tested | Shot Reduction | Implementation Details |

|---|---|---|---|---|

| Reused Pauli Measurements [1] | Recycling measurement outcomes from VQE optimization to gradient evaluation | Hâ‚‚ (4q) to BeHâ‚‚ (14q), Nâ‚‚Hâ‚„ (16q) | 32.29% of baseline (with grouping + reuse) | Combines qubit-wise commutativity grouping with measurement reuse |

| Variance-Based Shot Allocation [1] | Optimal shot distribution based on term variances | Hâ‚‚, LiH | 6.71% (VMSA) to 43.21% (VPSR) for Hâ‚‚; 5.77% (VMSA) to 51.23% (VPSR) for LiH | Applied to both Hamiltonian and gradient measurements |

| CEO-ADAPT-VQE* [3] | Novel coupled exchange operator pool with improved subroutines | LiH, H₆, BeH₂ (12-14 qubits) | 99.6% reduction in measurement costs | Combined operator pool optimization with measurement strategies |

| GGA-VQE [5] | Gradient-free optimization with analytical landscape functions | Molecular ground states, 25-body Ising model | Reduced parameter optimization measurements | Replaces gradient measurements with direct operator selection |

The performance gains demonstrated by these optimized protocols substantially narrow the gap between theoretical algorithm design and practical implementation on current hardware. The CEO-ADAPT-VQE* approach demonstrates particularly dramatic improvement, reducing measurement costs to just 0.4% of original requirements while maintaining chemical accuracy [3].

Experimental Protocols for Shot-Efficient ADAPT-VQE

Measurement Reuse and Commutativity Grouping

The integration of measurement reuse with commutativity grouping represents one of the most effective strategies for reducing shot requirements in ADAPT-VQE [1]. The experimental protocol proceeds as follows:

Initial Setup:

- Prepare the Hamiltonian ( \hat{H} ) in Pauli representation and identify all commutators ( [\hat{H}, \hat{\tau}i] ) for operators ( \hat{\tau}i ) in the pool.

- Decompose each commutator into measurable Pauli terms.

Qubit-Wise Commutativity (QWC) Grouping:

- Group Pauli terms from both Hamiltonian and commutators into mutually commuting sets.

- This allows simultaneous measurement of all terms within a group in a single basis rotation.

Measurement Reuse Protocol:

- During VQE parameter optimization, collect and store all Pauli measurement outcomes.

- For subsequent gradient evaluation in operator selection, reuse compatible measurements rather than performing new ones.

- This leverages the significant overlap between Pauli strings required for energy evaluation and those needed for gradient calculations [1].

This protocol capitalizes on the structural properties of molecular Hamiltonians and their commutators with excitation operators, effectively amortizing measurement costs across algorithm steps.

Variance-Based Shot Allocation

Optimal shot allocation based on variance estimation provides another powerful approach to measurement reduction [1]. The implementation consists of:

Variance Estimation:

- Estimate variances ( \sigmai^2 ) for each Pauli term ( Pi ) in the Hamiltonian and gradient observables.

- Use preliminary measurements with uniform shot distribution to initialize variance estimates.

Shot Budget Optimization:

- Allocate shots across terms proportional to ( |wi|\sigmai ), where ( wi ) is the coefficient weight of term ( Pi ).

- This follows the theoretical optimum for minimizing total estimation error [1].

Iterative Refinement:

- Update variance estimates as measurements proceed.

- Dynamically adjust shot allocation based on refined variance estimates.

This approach prioritizes measurement resources toward high-weight, high-variance terms that contribute most significantly to overall estimation error.

Variance-Based Shot Allocation Workflow

Gradient-Free Operator Selection

The Greedy Gradient-free Adaptive VQE (GGA-VQE) protocol circumvents traditional gradient measurements entirely [5]:

Analytical Landscape Construction:

- For each candidate operator ( \hat{\tau}i ) in the pool, construct the energy landscape ( E(\thetai) = \langle \psi | e^{-\thetai \hat{\tau}i} \hat{H} e^{\thetai \hat{\tau}i} | \psi \rangle ).

- For common operator pools, this landscape can be expressed as a simple trigonometric function: ( E(\thetai) = A\cos(2\thetai) + B\sin(2\theta_i) + C ).

Parameter Determination:

- Determine coefficients ( A ), ( B ), and ( C ) through a minimal number of energy evaluations (typically 2-3 specific ( \theta_i ) values).

- This completely characterizes the energy landscape for each operator.

Simultaneous Operator and Angle Selection:

- Identify the operator ( \hat{\tau}i ) that produces the deepest energy minimum at its optimal ( \thetai ).

- Append ( e^{\thetai^{opt} \hat{\tau}i} ) to the ansatz without subsequent parameter optimization.

This approach selects both the operator and its optimal rotation angle simultaneously, eliminating separate gradient measurements and reducing the parameter optimization burden [5].

The Research Toolkit: Essential Components for Shot-Efficient Implementation

Table 3: Research Reagent Solutions for ADAPT-VQE Implementation

| Component | Function | Implementation Considerations |

|---|---|---|

| Operator Pools | Set of candidate operators for ansatz construction | CEO pool [3] reduces circuit depth and measurements simultaneously |

| Commutativity Grouping | Enables simultaneous measurement of multiple observables | Qubit-wise commutativity provides practical balance of efficiency and implementation complexity [1] |

| Measurement Reuse Framework | Classical storage and retrieval of quantum measurements | Requires efficient data structure for Pauli string lookup and compatibility assessment [1] |

| Variance Estimation Module | Dynamically tracks observable variances for shot allocation | Initial uniform measurements bootstrap the process; continuous refinement improves efficiency [1] |

| Error Suppression Techniques | Reduces impact of hardware noise on measurements | Combining error suppression with error detection improves fidelity without full QEC overhead [7] |

| Analytical Landscape Solver | Determines optimal parameters without iterative optimization | Specific to operator pool type; enables gradient-free selection [5] |

| 4-Nitrosodiphenylamine | 4-Nitrosodiphenylamine, CAS:156-10-5, MF:C12H10N2O, MW:198.22 g/mol | Chemical Reagent |

| Endosulfan Sulfate | Endosulfan Sulfate, CAS:1031-07-8, MF:C9H6Cl6O4S, MW:422.9 g/mol | Chemical Reagent |

The measurement costs associated with operator selection and gradient evaluations present a fundamental challenge for practical ADAPT-VQE implementation on near-term quantum hardware. Through systematic analysis of the algorithm's architectural components, we have identified the primary sources of shot costs and documented emerging methodologies that substantially reduce these requirements. The integration of measurement reuse strategies, variance-based shot allocation, and modified operator selection criteria demonstrably lowers shot costs by up to two orders of magnitude while maintaining chemical accuracy. These advances narrow the gap between theoretical algorithm design and practical execution, accelerating progress toward quantum utility in computational chemistry and drug development applications.

The Adaptive Derivative-Assembled Pseudo-Trotter Variational Quantum Eigensolver (ADAPT-VQE) has emerged as a pivotal promising approach for electronic structure challenges in quantum chemistry with noisy quantum devices, representing a significant advancement over fixed-ansatz approaches [8] [9]. Unlike traditional variational quantum algorithms that use pre-determined circuit structures, ADAPT-VQE dynamically constructs an ansatz by systematically adding fermionic operators one-at-a-time, generating a problem-specific ansatz with a minimal number of parameters and shallower circuits [9] [10]. However, this adaptive flexibility comes at a significant cost: a dramatically increased quantum measurement overhead that presents a major bottleneck for practical implementations on current hardware [1] [3] [2].

This "double burden" arises from the algorithm's fundamental structure, which combines two measurement-intensive processes. First, like all Variational Quantum Eigensolvers, ADAPT-VQE must optimize parameters in a variational circuit—a process requiring repeated energy measurements to guide the classical optimizer. Second, and uniquely to adaptive approaches, the algorithm must continually grow and select the ansatz itself through operator gradient evaluations [1] [2]. This dual requirement of simultaneous parameter optimization and ansatz growth creates a perfect storm of measurement demands that this whitepaper will analyze in depth, providing both theoretical understanding and practical mitigation strategies for researchers and drug development professionals working at the intersection of quantum computing and molecular simulation.

The ADAPT-VQE Algorithm: Core Mechanism and Bottlenecks

Fundamental Algorithmic Structure

The ADAPT-VQE algorithm operates through an iterative process that systematically constructs a quantum circuit ansatz tailored to the specific molecular system being simulated. The algorithm begins with a simple reference state, typically the Hartree-Fock wavefunction, and progressively builds complexity by adding parameterized unitary operators selected from a predefined pool [9]. Mathematically, at iteration N, the wavefunction takes the form:

$$ |\psi^{(N)}\rangle = \prod{i=1}^{N} e^{\thetai \hat{A}_i} |\psi^{(0)}\rangle $$

where $|\psi^{(0)}\rangle$ denotes the initial state, $\hat{A}i$ represents the fermionic anti-Hermitian operator introduced at the i-th iteration, and $\thetai$ is its corresponding amplitude [8].

The critical innovation of ADAPT-VQE lies in its operator selection mechanism. At each iteration, the algorithm evaluates the energy gradient with respect to each potential operator in the pool and selects the one with the largest gradient magnitude [8] [9]. This operator is then appended to the growing ansatz, after which all parameters (both the new addition and previous parameters) are optimized variationally. This process continues until the energy converges to within a desired accuracy threshold, typically chemical accuracy (1.6 mHa or 1 kcal/mol) [3].

The "double burden" of ADAPT-VQE manifests through two interdependent measurement-intensive processes that collectively drive the high shot requirements:

Ansatz Growth Measurements: At each iteration, the algorithm must evaluate the gradient $∂E^{(N)}/∂θ_i$ for every operator in the pool to identify the most promising candidate for inclusion [1] [2]. For a pool of size M, this requires O(M) additional measurements per iteration beyond the energy evaluations needed for parameter optimization. With typical fermionic pools containing generalized single and double excitations, M scales as O($n^2o^2$) where n and o are the numbers of virtual and occupied orbitals respectively, creating a substantial measurement burden [3].

Parameter Optimization Measurements: Each time the ansatz grows, the expanded parameter set ${θ_i}$ must be re-optimized through the standard VQE procedure [2]. This requires numerous energy evaluations to guide the classical optimizer, with each energy evaluation itself requiring many quantum measurements to estimate the expectation value $\langle \psi^{(N)} | \hat{H} | \psi^{(N)} \rangle$ of the molecular Hamiltonian [1] [9].

The interplay between these processes creates a compounding effect: as the ansatz grows, the parameter optimization becomes more costly due to the increasing parameter count, while the operator selection requires increasingly complex gradient calculations [1] [2]. This dual burden explains why ADAPT-VQE demands significantly more quantum measurements than either standard VQE with fixed ansätze or classical quantum chemistry methods, presenting a fundamental challenge for practical deployment on current quantum hardware.

Quantifying the Resource Burden: Experimental Data

Extensive numerical studies have quantified the substantial resource requirements of ADAPT-VQE and the performance improvements offered by various optimization strategies. The following table summarizes key experimental findings from recent research:

Table 1: Measurement Reduction Strategies in ADAPT-VQE

| Strategy | Molecule(s) Tested | Key Metric Improvement | Reference |

|---|---|---|---|

| Reused Pauli Measurements | Hâ‚‚ to BeHâ‚‚ (4-14 qubits), Nâ‚‚Hâ‚„ (16 qubits) | 32.29% average shot usage with grouping and reuse vs. naive measurement | [1] |

| Variance-Based Shot Allocation | Hâ‚‚, LiH | Shot reductions of 6.71% (VMSA) and 43.21% (VPSR) for Hâ‚‚ | [1] |

| CEO Pool + Improved Subroutines | LiH, H₆, BeH₂ (12-14 qubits) | Measurement costs reduced to 0.4-2% of original ADAPT-VQE | [3] |

| Greedy Gradient-Free Approach (GGA-VQE) | Hâ‚‚O, LiH | 2-5 circuit measurements per iteration vs. thousands in standard ADAPT | [2] |

| Classical Pre-optimization (SWCS) | Molecules up to 52 spin-orbitals | Significant reduction in quantum processor measurements | [11] |

Further analysis reveals how these optimizations impact overall quantum computational resources across different molecular systems:

Table 2: Overall Resource Reductions in State-of-the-Art ADAPT-VQE

| Molecule | Qubit Count | CNOT Reduction | CNOT Depth Reduction | Measurement Cost Reduction | Reference |

|---|---|---|---|---|---|

| LiH | 12 | 88% | 96% | 99.6% | [3] |

| H₆ | 12 | 85% | 95% | 99.4% | [3] |

| BeHâ‚‚ | 14 | 82% | 94% | 99.2% | [3] |

These dramatic improvements highlight the immense potential of specialized optimization strategies to mitigate the double burden of ADAPT-VQE. The CEO-ADAPT-VQE* algorithm, which combines the novel Coupled Exchange Operator pool with other improvements, demonstrates particularly impressive gains, reducing measurement costs by three orders of magnitude compared to the original ADAPT-VQE formulation [3].

Methodologies for Shot Reduction: Experimental Protocols

Pauli Measurement Reuse and Commutativity-Based Grouping

The protocol for reusing Pauli measurements leverages the fact that the Hamiltonian and the gradient observables (commutators $[H, A_i]$) often share common Pauli terms [1]. The methodology proceeds as follows:

Initial Setup: During the classical precomputation phase, identify all Pauli strings present in both the Hamiltonian $H$ and the gradient observables $[H, Ai]$ for all operators $Ai$ in the pool. Construct a mapping between compatible terms.

Quantum Execution: For each VQE optimization cycle at iteration $N$:

- Measure the complete set of Hamiltonian Pauli terms, storing the results in a classical database.

- Reuse these measurement outcomes during the subsequent operator selection step by extracting the relevant Pauli string expectation values for gradient calculations.

- Supplement with additional measurements only for Pauli strings unique to the gradient observables.

Grouping Optimization: Apply qubit-wise commutativity (QWC) or more advanced commutativity-based grouping to both Hamiltonian and gradient observables, enabling simultaneous measurement of compatible terms and further reducing the total number of quantum circuit executions [1].

This protocol capitalizes on the significant overlap between the Pauli terms needed for energy estimation and those required for gradient calculations, effectively amortizing the measurement cost across both stages of the algorithm.

Variance-Based Shot Allocation

Variance-based shot allocation dynamically distributes quantum measurements based on the statistical properties of each observable, prioritizing terms with higher variance and greater impact on the final energy or gradient estimation [1]. The experimental protocol implements:

Variance Estimation: For each Pauli term $Pi$ in the Hamiltonian or gradient observables, estimate the variance $\sigmai^2 = \langle Pi^2 \rangle - \langle Pi \rangle^2$ using an initial allocation of shots (e.g., 10% of the total budget).

Optimal Allocation: Calculate the optimal shot distribution using the theoretical framework of Rubin et al. [33] adapted for ADAPT-VQE: $$ ni \propto \frac{|gi|\sigmai}{\sqrt{\sumj |gj|\sigmaj}} $$ where $ni$ is the number of shots allocated to term $i$, $gi$ is the coefficient of the Pauli term in the Hamiltonian or gradient observable, and $\sigma_i$ is the estimated standard deviation.

Iterative Refinement: For multi-step ADAPT-VQE procedures, update variance estimates and reallocate shots at regular intervals to adapt to changing circuit characteristics and operator compositions.

This methodology has demonstrated shot reductions of 43.21% for Hâ‚‚ and 51.23% for LiH compared to uniform shot distribution, while maintaining chemical accuracy [1].

Gradient-Free Greedy Optimization (GGA-VQE)

The Greedy Gradient-Free Adaptive VQE (GGA-VQE) protocol fundamentally reimagines the ADAPT-VQE optimization process to circumvent the high-dimensional parameter optimization problem [2] [12]. The experimental methodology includes:

Candidate Operator Screening: For each candidate operator $Uk(\thetak)$ in the pool:

- Prepare the current ansatz state $|\psi^{(N)}\rangle$ on the quantum processor.

- Apply $Uk(\thetak)$ with 3-5 different strategically chosen angle values $\theta_k^{(j)}$.

- For each angle, measure the energy using a limited shot budget (typically 10,000 shots or fewer for noisy simulations).

Analytical Curve Fitting: For each candidate, fit the measured energy points to a simple trigonometric function $E_k(\theta) = A\cos(\theta + \phi) + C$, which accurately captures the single-parameter energy dependence.

Optimal Parameter Selection: Analytically determine the optimal angle $\theta_k^*$ that minimizes the fitted energy function for each candidate operator.

Greedy Selection: From all candidates, select the operator $Uk^*$ and corresponding angle $\thetak^*$ that yields the lowest predicted energy.

Ansatz Expansion: Append $Uk^*(\thetak^*)$ to the growing ansatz with its parameter fixed, then proceed to the next iteration without global re-optimization of previous parameters.

This protocol dramatically reduces the quantum resources required, needing only 2-5 circuit measurements per candidate operator compared to the thousands required for full gradient calculations and parameter re-optimizations in standard ADAPT-VQE [2] [12].

Visualization of Algorithmic Workflows

The following diagrams illustrate the core workflows of standard ADAPT-VQE and optimized variants, highlighting key bottlenecks and optimization points.

Standard ADAPT-VQE Workflow

Optimized ADAPT-VQE with Mitigation Strategies

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Computational Tools for ADAPT-VQE Research

| Tool Category | Specific Implementation | Function in Research | Key Features |

|---|---|---|---|

| Operator Pools | Fermionic GSD Pool [3] | Provides candidate operators for ansatz growth | Generalized single and double excitations |

| Qubit Pool [3] | Qubit-efficient operator selection | Direct qubit operators, reduced circuit depth | |

| CEO Pool [3] | Enhanced efficiency for correlated systems | Coupled exchange operators, compact ansatz | |

| Measurement Techniques | Qubit-Wise Commutativity (QWC) Grouping [1] | Reduces measurements via compatible term grouping | Simultaneous measurement of commuting terms |

| Variance-Based Shot Allocation [1] | Optimizes shot distribution across terms | Prioritizes high-variance, high-impact measurements | |

| Pauli Measurement Reuse [1] | Amortizes measurement costs across algorithm stages | Reuses Hamiltonian measurements for gradients | |

| Classical Computational Tools | Sparse Wavefunction Circuit Solver (SWCS) [11] | Classical pre-optimization to reduce quantum workload | Wavefunction truncation, computational cost reduction |

| Fragment Molecular Orbital (FMO) [13] | System decomposition for larger molecules | Divide-and-conquer approach, reduced qubit requirements | |

| Hardware-Specific Optimizations | Hardware-Efficient Ansatz Elements [9] | Native gate utilization for specific quantum processors | Reduced circuit depth, improved fidelity |

| Error Mitigation Techniques [2] | Counteracts device noise in measurements | Readout error correction, zero-noise extrapolation | |

| Erythromycin Propionate | Erythromycin Propionate, CAS:134-36-1, MF:C40H71NO14, MW:790.0 g/mol | Chemical Reagent | Bench Chemicals |

| Octyl Gallate | Octyl Gallate, CAS:1034-01-1, MF:C15H22O5, MW:282.33 g/mol | Chemical Reagent | Bench Chemicals |

The "double burden" of ADAPT-VQE—combining the measurement-intensive processes of parameter optimization and ansatz growth—represents a fundamental challenge for the practical deployment of adaptive quantum algorithms on current hardware. However, as this technical analysis demonstrates, significant progress has been made in developing sophisticated strategies to mitigate these resource demands.

The integration of measurement reuse protocols, variance-based shot allocation, gradient-free optimization, and novel operator pools has collectively reduced measurement costs by up to 99.6% compared to the original ADAPT-VQE formulation [3]. These advances, combined with classical pre-optimization techniques and fragment-based approaches, are steadily bridging the gap between theoretical potential and practical implementation.

For researchers and drug development professionals, these developments signal a promising trajectory toward quantum utility in molecular simulation. The successful implementation of greedy gradient-free algorithms on 25-qubit quantum hardware [2] [12] demonstrates that robust, measurement-efficient adaptive algorithms can already yield meaningful results on existing devices. As quantum hardware continues to improve in scale and fidelity, the optimized ADAPT-VQE variants discussed herein will play a crucial role in unlocking quantum advantage for real-world chemical and pharmaceutical applications, from catalyst design to drug discovery.

The Impact of Molecular Complexity and Pool Size on Measurement Scaling

In the pursuit of quantum advantage for molecular simulations, the Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) has emerged as a leading algorithm for the Noisy Intermediate-Scale Quantum (NISQ) era. However, a significant bottleneck hindering its practical application is the exorbitant number of quantum measurements, or "shots," required to achieve chemical accuracy [3]. This whitepaper examines the fundamental relationship between molecular complexity, the effective "pool size" of quantum operators, and the resultant measurement scaling. Understanding this relationship is crucial for developing more efficient protocols, particularly for researchers in drug development who require accurate molecular simulations.

ADAPT-VQE and the Quantum Measurement Challenge

Algorithmic Workflow and Resource Demands

ADAPT-VQE is a hybrid quantum-classical algorithm that constructs a problem-tailored ansatz dynamically. Unlike static approaches, it iteratively appends parameterized unitaries from a predefined operator pool, selected based on their energy gradients with respect to the current variational state [3]. This adaptive construction leads to shallower circuits and improved trainability but introduces a substantial quantum measurement overhead.

The primary resource consumption occurs in two critical steps:

- VQE Parameter Optimization: Iterative evaluation of the energy expectation value, which requires measuring the molecular Hamiltonian.

- Operator Selection: Calculation of energy gradients for every operator in the pool during each iteration to identify the most promising operator to add [14] [3].

The total number of shots required is a function of the number of iterations, the size of the operator pool, and the shot noise associated with measuring each observable on the quantum device.

The "Pool Size" Concept and its Implications

In the context of ADAPT-VQE, "pool size" directly refers to the number of quantum operators (e.g., excitation operators) available for selection during the adaptive process. A larger pool provides a richer search space for constructing the ansatz but linearly increases the measurement burden in the operator selection step, as gradients must be evaluated for each operator in every iteration [3]. This creates a critical trade-off between ansatz expressibility and measurement feasibility.

Figure 1. ADAPT-VQE Workflow and Shot Bottleneck. The gradient measurement step (red) scales linearly with the operator pool size (N), creating a major shot bottleneck [3].

Quantifying Molecular Complexity for Measurement Scaling

Molecular Assembly Theory

Molecular complexity is a key driver of the resources required for simulation. Assembly Theory provides a robust framework for quantifying molecular complexity through the Molecular Assembly (MA) index. The MA index quantifies the minimal number of steps required to construct a molecule from its basic building blocks, thereby reflecting the amount of information or "constrained history" embedded in its structure [15]. A higher MA index signifies a more complex molecule.

Experimental Proxies for Molecular Complexity

Calculating the exact MA index can be computationally intensive. Fortunately, research has demonstrated that the MA index can be directly inferred from standard spectroscopic techniques, making it an experimentally accessible metric [15]. Table 1 summarizes the correlation between spectral features and molecular complexity.

Table 1. Experimental Measurement of Molecular Complexity via Spectroscopy [15]

| Spectroscopic Technique | Measurable Proxy | Relationship to Molecular Assembly (MA) Index |

|---|---|---|

| Infrared (IR) Spectroscopy | Number of unique absorption bands in the fingerprint region (400-1500 cmâ»Â¹) | Linear correlation (Pearson coefficient: 0.86); MA = 0.21 × n_peaks – 0.15 |

| Nuclear Magnetic Resonance (NMR) | Number of magnetically inequivalent carbon resonances | Reflects unique atomic environments; higher complexity reduces magnetic equivalence |

| Tandem Mass Spectrometry (MS/MS) | Number of unique molecular fragments | Correlates with the diversity of constructible substructures |

The link to ADAPT-VQE is direct: molecules with higher MA indices typically require more complex electron correlation descriptions. This, in turn, necessitates larger operator pools and longer adaptive cycles in ADAPT-VQE, exponentially increasing the total shot count needed for convergence.

Strategies for Shot Reduction in ADAPT-VQE

Addressing the shot problem requires integrated strategies that target both the operator pool and the measurement process itself. Recent research has yielded significant improvements.

Advanced Operator Pools

The choice of operator pool profoundly impacts efficiency. The novel Coupled Exchange Operator (CEO) pool demonstrates a dramatic reduction in quantum resources compared to traditional fermionic pools (e.g., Generalized Single and Double excitations). The CEO pool is designed with hardware efficiency and minimal completeness in mind, leading to shallower circuits and fewer required iterations [3].

Table 2. Resource Reduction of CEO-ADAPT-VQE* vs. Original ADAPT-VQE [3]

| Molecule (Qubits) | CNOT Count Reduction | CNOT Depth Reduction | Measurement Cost Reduction |

|---|---|---|---|

| LiH (12) | 88% | 96% | 99.6% |

| H₆ (12) | Not Specified | Not Specified | 99.6% |

| BeHâ‚‚ (14) | Up to 88% | Up to 96% | Up to 99.6% |

Improved Measurement Protocols

Beyond pool design, two key protocols directly reduce shot overhead:

- Reusing Pauli Measurements: Pauli measurement outcomes obtained during the VQE parameter optimization step can be classically post-processed and reused in the subsequent operator selection step. This avoids redundant measurements of the same operators across different algorithmic stages [14].

- Variance-Based Shot Allocation: Instead of using a fixed number of shots for all measurements, this technique allocates shots proportionally to the variance of each observable. Operators with higher uncertainty (variance) receive more shots, optimizing the overall use of quantum resources to achieve a target precision [14].

Figure 2. Variance-Based Shot Allocation. This protocol optimizes measurement efficiency by dynamically directing shots toward higher-variance observables [14].

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 3. Key Reagents and Computational Tools for ADAPT-VQE and Complexity Analysis

| Item / Solution | Function / Description | Application Context |

|---|---|---|

| CEO Operator Pool | A novel, hardware-efficient operator pool that reduces circuit depth and iteration count. | ADAPT-VQE ansatz construction [3] |

| Variance-Based Allocation Algorithm | A classical routine that optimizes quantum shot distribution based on real-time variance estimation. | Quantum measurement optimization [14] |

| Assembly Index Algorithm | A software tool (e.g., in Go) to compute the Molecular Assembly index from a molecular graph. | Quantifying molecular complexity [15] |

| xTB Software Package | A semi-empirical quantum chemistry program for fast geometry optimization and IR spectrum calculation. | Predicting IR spectra for MA estimation [15] |

| 2-Iminobiotin | 2-Iminobiotin, CAS:13395-35-2, MF:C10H17N3O2S, MW:243.33 g/mol | Chemical Reagent |

| Ftivazide | Ftivazide | Ftivazide is a thiosemicarbazone for research on multi-drug resistant tuberculosis (MDR-TB). This product is For Research Use Only. Not for human or veterinary use. |

The high shot requirement in ADAPT-VQE is not an isolated problem but a direct consequence of the interplay between molecular complexity and the computational strategy employed. Complex molecules, quantified by a high Molecular Assembly index, demand larger operator pools and more iterations, leading to unfavorable measurement scaling. The path forward lies in the co-design of algorithmic components: employing chemically-inspired, minimal operator pools like the CEO pool, and implementing smart measurement protocols that reuse data and allocate shots optimally. The integration of these strategies, as demonstrated by state-of-the-art variants like CEO-ADAPT-VQE*, reduces measurement costs by over 99%, providing a viable path toward practical quantum advantage in drug development and material science.

ADAPT-VQE in Practice: Standard Protocols and Measurement Workflows

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) is an advanced hybrid quantum-classical algorithm designed to compute the ground-state energy of molecular systems more efficiently than standard VQE. Developed to address key limitations of fixed ansatz approaches, ADAPT-VQE iteratively constructs a problem-specific quantum circuit (ansatz) by dynamically selecting operators from a predefined pool based on their potential to lower the energy expectation value [16] [17]. This adaptive growth results in a more compact and chemically meaningful ansatz, helping to mitigate issues like deep quantum circuits and the barren plateau phenomenon often encountered in hardware-efficient or unitary coupled cluster (UCC) ansatzes [1] [5].

This guide breaks down the standard ADAPT-VQE algorithm in the context of a pressing research question: Why does ADAPT-VQE require so many quantum measurements (shots)? The high shot overhead is a significant bottleneck for its practical application on near-term quantum devices [1] [18]. We will explore the algorithm's workflow, the source of its measurement demands, and emerging strategies to enhance its shot-efficiency.

Algorithm Workflow: A Step-by-Step Guide

The ADAPT-VQE algorithm follows an iterative procedure to build its ansatz. The flowchart below visualizes this workflow, with detailed steps following.

Step 1: Initialization

The algorithm begins by preparing an initial reference state, typically the Hartree-Fock (HF) wavefunction ((|\Psi_{\mathrm{HF}}\rangle)), which serves as the starting point for the adaptive ansatz [16] [17]. A crucial preparatory step is defining an operator pool. In the standard fermionic ADAPT-VQE, this pool consists of all possible spin-compatible single and double excitation operators derived from the UCCSD ansatz:

- Single excitations: ( \hat{A}{p,q} = ap^\dagger aq - aq^\dagger a_p )

- Double excitations: ( \hat{A}{pq,rs} = ap^\dagger aq^\dagger as ar - ar^\dagger as^\dagger aq a_p )

The size of this pool grows as ( \mathcal{O}(N^2 n^2) ), where (N) is the number of spin-orbitals and (n) is the number of electrons [18]. This polynomial scaling is a primary contributor to the algorithm's measurement overhead.

Step 2: Gradient Measurement and Operator Selection

For the current parameterized ansatz state (|\Psi^{(k-1)}\rangle) at iteration (k), the algorithm computes the energy gradient with respect to the parameter of each operator (Am) in the pool. This gradient is given by the expression: [ \frac{\partial E^{(k-1)}}{\partial \thetam} = \langle \Psi^{(k-1)} | [H, Am] | \Psi^{(k-1)} \rangle ] This commutator-based metric estimates how much the energy would change if the operator (Am) were added to the circuit [16]. The operator with the largest gradient magnitude is selected for inclusion in the ansatz. This step requires evaluating the expectation value of the commutator ([H, A_m]) for every operator in the pool, a process that demands a vast number of quantum measurements.

Step 3: Ansatz Growth and Parameter Optimization

The selected operator (e.g., ( e^{\thetam Am} )) is appended to the existing ansatz circuit, introducing a new variational parameter (\thetam) [19] [16]. The ansatz at iteration (k) takes the form of a disentangled UCC ansatz: [ |\Psi^{(k)}\rangle = \left( \prod e^{\thetai Ai} \right) |\Psi{\mathrm{HF}}\rangle ] After growing the ansatz, a full variational optimization of all parameters (the new parameter and all previously introduced parameters) is performed using the standard VQE routine to minimize the expectation value of the Hamiltonian ( \langle \Psi | H | \Psi \rangle ) [16] [5]. This optimization itself is shot-intensive.

Step 4: Convergence Check

The process repeats from Step 2 until a convergence criterion is met. The standard criterion is that the norm of the gradient vector falls below a predefined threshold (e.g., (10^{-3})) [19] [16]. Upon convergence, the algorithm outputs the final energy and the adaptively constructed ansatz circuit.

The Scientist's Toolkit: Key Components for an ADAPT-VQE Experiment

The table below catalogs the essential "research reagents" or components required to implement the ADAPT-VQE algorithm, based on standard implementations in software libraries like InQuanto, PennyLane, and OpenVQE [19] [16] [17].

| Component | Function & Purpose | Example Form/Type | |

|---|---|---|---|

| Molecular Hamiltonian | Hermitian operator ((H)) representing the system's energy; its expectation value is minimized. | Fermionic or qubit (Pauli string) form [1] [18]. | |

| Reference State | Initial state for the variational circuit; provides a chemically reasonable starting point. | Hartree-Fock state (( | \Psi_{\mathrm{HF}}\rangle)) [16] [17]. |

| Operator Pool | Collection of generators from which the ansatz is built; defines the search space. | UCCSD excitations [19] [18], Qubit excitations [18]. | |

| Classical Minimizer | Classical optimization algorithm that updates variational parameters to minimize energy. | L-BFGS-B, COBYLA [19] [16]. | |

| Gradient Protocol | Method for evaluating the selection criterion (( \langle [H, A_m] \rangle )) for operator choice. | Commutator measurement [16], Statevector simulation [19]. | |

| Quantum Device/Simulator | Platform for executing quantum circuits and measuring expectation values. | Statevector simulator (e.g., Qulacs) [19], QPU [5]. | |

| 1-Bromo-5-methoxypentane | 1-Bromo-5-methoxypentane, CAS:14155-86-3, MF:C6H13BrO, MW:181.07 g/mol | Chemical Reagent | |

| Tetraamminepalladium(2+) dinitrate | Tetraamminepalladium(2+) Dinitrate|CAS 13601-08-6 |

Why ADAPT-VQE Is Shot-Intensive: A Detailed Analysis

The measurement overhead in ADAPT-VQE stems from two primary sources, which are quantitatively summarized in the table below.

| Source of Overhead | Description | Impact on Shot Count |

|---|---|---|

| Operator Selection (Gradient Evaluation) | Requires measuring the commutator ( [H, Am] ) for every operator (Am) in a large pool (e.g., ( \mathcal{O}(N^2n^2) ) for UCCSD) in every iteration [1] [18]. | This is the dominant cost. For example, a 14-qubit system (BeHâ‚‚) can have a pool of hundreds to thousands of operators, each requiring many shots for a precise gradient estimate [1]. |

| Parameter Optimization | Each iteration introduces a new parameter. Optimizing an m-parameter ansatz requires many energy evaluations (each needing many shots) during the VQE sub-routine [5] [18]. | The cost of optimization scales with the number of parameters and the complexity of the energy landscape. Noisy measurements can slow convergence, increasing total shots [5]. |

| Ansatz Growth | The number of iterations (and thus the cumulative measurement cost) can be large before convergence is reached, especially for strongly correlated systems [18]. | More iterations mean repeated cycles of expensive gradient measurements and optimizations. |

The Core Problem: Scaling of the Operator Pool

The most significant factor is the sheer size of the operator pool. For a UCCSD-type pool, the number of operators scales polynomially with system size. In each iteration, the expectation value of a distinct observable (([H, A_m])) must be measured for every single operator in this pool to identify the one with the largest gradient. Since quantum measurements are probabilistic, a sufficiently large number of shots (repetitions of the circuit) is required for each measurement to achieve a statistically significant result, leading to an immense total shot count [1] [18].

Advanced Protocols: Strategies for Shot Reduction

Research into mitigating ADAPT-VQE's shot overhead is active and diverse. The following table compares several key strategies.

| Strategy | Core Principle | Reported Efficacy |

|---|---|---|

| Reused Pauli Measurements & Variance-Based Allocation [1] | Reuses Pauli measurement outcomes from VQE optimization in subsequent gradient steps. Allocates shots based on term variance. | Reduces average shot usage to 32.29% of the naive approach when combined [1]. |

| Batched ADAPT-VQE [18] | Adds multiple operators with the largest gradients simultaneously in one iteration. | Reduces the number of gradient computation cycles, directly cutting the dominant measurement overhead [18]. |

| Greedy Gradient-Free Adaptive VQE (GGA-VQE) [5] | Replaces gradient measurements with a direct, analytical energy-sorting method to select operators and their parameters. | Avoids noisy gradient measurements entirely, demonstrating improved resilience to statistical noise [5]. |

| Classical Pre-optimization (SWCS) [11] | Uses classical sparse wavefunction circuit solvers to perform ADAPT-VQE and identify a compact ansatz before using a QPU. | Minimizes work on noisy quantum hardware by leveraging high-performance classical computing [11]. |

| AI-Driven Shot Allocation [20] | Employs reinforcement learning to dynamically assign measurement shots across VQE optimization iterations. | Learns to minimize total shots while ensuring convergence, reducing reliance on hand-crafted heuristics [20]. |

Detailed Protocol: Batched ADAPT-VQE

The batched ADAPT-VQE protocol modifies Step 2 of the standard algorithm [18]:

- Gradient Measurement: Compute gradients ( \frac{\partial E}{\partial \theta_m} ) for all operators in the pool, as in the standard algorithm.

- Batch Selection: Instead of selecting a single operator, identify the top-(k) operators with the largest gradient magnitudes.

- Ansatz Growth: Append all (k) selected operators to the ansatz simultaneously, introducing (k) new parameters at once.

- Parameter Optimization: Perform a single VQE optimization to optimize all parameters (old and new).

This protocol reduces the number of iterative cycles required for ansatz construction. Since each cycle involves the expensive gradient measurement over the entire pool, batching operators can lead to a substantial reduction in the total number of these measurements, thereby saving shots [18].

The standard ADAPT-VQE algorithm provides a systematic, chemically motivated path to constructing accurate, problem-tailored ansatzes for quantum simulation. Its iterative structure, which relies on repeated gradient measurements over a large operator pool and subsequent variational optimization, is the fundamental reason for its high demand for quantum measurements. This shot overhead currently presents the primary barrier to its practical application on noisy intermediate-scale quantum devices.

However, as outlined in this guide, the field is responding with a suite of sophisticated strategies—from measurement reuse and batching to classical pre-optimization and machine learning—aimed at taming this overhead. The future of practical quantum chemistry on near-term devices will likely hinge on the continued refinement and integration of these shot-efficient techniques into the robust framework of adaptive algorithms like ADAPT-VQE.

The Role of the Operator Pool and Commutativity in Measurement Requirements

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) represents a promising approach for quantum simulation of molecular systems on Noisy Intermediate-Scale Quantum (NISQ) devices. Unlike fixed-structure ansätze such as Unitary Coupled Cluster (UCCSD), ADAPT-VQE iteratively constructs a problem-tailored ansatz by dynamically appending parameterized unitary operators from a predefined operator pool [1] [2]. This adaptive construction offers significant advantages, including reduced circuit depth and mitigation of barren plateau problems [1] [3]. However, a critical challenge impedes its practical implementation: the algorithm exhibits exceptionally high quantum measurement overhead, requiring thousands to millions of circuit executions (shots) to achieve chemical accuracy [1] [2].

This measurement overhead stems fundamentally from two algorithm components: the operator selection process and the parameter optimization routine. Both components rely heavily on evaluating expectation values and gradients through quantum measurements [2]. The characteristics of the operator pool—particularly the commutativity relationships between operators—directly influence the efficiency of these quantum measurements. This technical analysis examines the intrinsic relationship between operator pool design, commutativity properties, and the resulting shot requirements, framing this discussion within the broader research question: Why does ADAPT-VQE require so many shots?

Theoretical Framework of ADAPT-VQE and Measurement Overhead

ADAPT-VQE Algorithmic Structure

The ADAPT-VQE algorithm follows an iterative procedure where each iteration consists of two core steps:

Step 1: Operator Selection At iteration m, with a current parameterized ansatz wavefunction |Ψâ½áµâ»Â¹â¾âŸ©, the algorithm selects the next operator from a pool 𕌠by identifying the unitary operator ð’°* ∈ 𕌠that maximizes the gradient of the energy expectation value [2]:

This gradient can be expressed as the expectation value of a commutator [21]:

This requires measuring the commutator of the Hamiltonian with every operator in the pool [21].

Step 2: Global Parameter Optimization After appending the selected operator, all parameters in the expanded ansatz are optimized to minimize the energy expectation value [2]:

This requires extensive measurements to evaluate the energy during classical optimization [1].

The high shot requirements in ADAPT-VQE originate from several fundamental aspects of the algorithm:

Gradient Evaluation for Operator Selection: Each iteration requires estimating the gradient for every operator in the pool, which involves measuring the expectation value of the commutator [Ĥ, Â_N] for each pool operator [1] [21]. For large pools, this process dominates the measurement cost.

Energy Evaluation During Optimization: The variational optimization loop requires numerous energy evaluations, each requiring significant quantum measurements [2]. As the ansatz grows with each iteration, the optimization becomes increasingly costly.

Statistical Precision Requirements: Quantum measurements are inherently probabilistic, requiring many shots (circuit repetitions) to obtain statistically precise estimates of expectation values [22] [23]. The default shot count on platforms like IBM Q Experience is 1,024, reflecting this fundamental statistical requirement [23].

Operator Pool Design and Commutativity Effects

Operator Pool Characteristics and Measurement Costs

The design of the operator pool directly influences measurement requirements through multiple mechanisms:

Pool Size and Composition The number of operators in the pool determines how many gradient evaluations must be performed each iteration. Early ADAPT-VQE implementations used fermionic excitation pools with generalized single and double (GSD) excitations, leading to pools that scale as O(Nâ´) with qubit count N [3]. Each operator requires measuring its gradient with the Hamiltonian, creating substantial overhead.

Novel Pool Designs Recent research has introduced more efficient pool designs to reduce measurement requirements:

Coupled Exchange Operator (CEO) Pool: This novel approach uses coupled exchange operators to dramatically reduce quantum computational resources. Compared to early ADAPT-VQE versions, CEO pools reduce CNOT count, CNOT depth, and measurement costs by up to 88%, 96%, and 99.6%, respectively, for molecules represented by 12 to 14 qubits [3].

Qubit-Excitation-Based (QEB) Pools: These hardware-efficient pools exploit qubit connectivity to reduce measurement overhead while maintaining convergence properties [3].

Table 1: Impact of Operator Pool Design on Resource Requirements for Selected Molecules

| Molecule | Qubit Count | Algorithm Version | CNOT Count Reduction | CNOT Depth Reduction | Measurement Cost Reduction |

|---|---|---|---|---|---|

| LiH | 12 | CEO-ADAPT-VQE* | 88% | 96% | 99.6% |

| H₆ | 12 | CEO-ADAPT-VQE* | 85% | 94% | 99.4% |

| BeHâ‚‚ | 14 | CEO-ADAPT-VQE* | 87% | 92% | 99.5% |

Commutativity in Measurement Optimization

Commutativity relationships between operators play a crucial role in optimizing measurement strategies:

Qubit-Wise Commutativity (QWC) Grouping Operators that qubit-wise commute can be measured simultaneously in the same circuit execution, significantly reducing shot requirements [1]. This grouping strategy is particularly effective for the Pauli strings that result from the commutator [Ĥ, Â_N] evaluations in the gradient measurements.

Commutator-Based Grouping Recent advances group commutators of single Hamiltonian terms with multiple pool operators, resulting in approximately 2N or fewer mutually commuting sets [1]. This approach leverages the algebraic structure of the operators to minimize distinct measurement bases.

Measurement Reuse Strategy Pauli measurement outcomes obtained during VQE parameter optimization can be reused in subsequent operator selection steps, leveraging overlapping Pauli strings between the Hamiltonian and the commutator expressions [1]. This strategy can reduce average shot usage to 32.29% when combined with measurement grouping, compared to naive full measurement schemes [1].

Table 2: Shot Reduction Strategies and Their Effectiveness

| Strategy | Method Description | Key Mechanism | Reported Shot Reduction |

|---|---|---|---|

| Measurement Reuse | Reusing Pauli measurements from VQE optimization in gradient evaluations | Overlapping Pauli strings between Hamiltonian and commutators | 32.29% of original shots (with grouping) [1] |

| Variance-Based Shot Allocation | Allocating shots based on variance of Hamiltonian and gradient terms | Theoretical optimum budget allocation [1] | 6.71-51.23% reduction (vs uniform) [1] |

| Commutativity Grouping | Grouping commuting terms from Hamiltonian and gradient observables | Qubit-wise commutativity (QWC) | 38.59% of original shots (grouping alone) [1] |

| Gradient-Free Optimization | GGA-VQE using analytic curve fitting instead of gradient measurements | Eliminates direct gradient measurement | 2-5 measurements per iteration [12] |

Experimental Protocols and Methodologies

Shot-Efficient ADAPT-VQE Protocol

Recent research has developed integrated protocols to reduce shot requirements in ADAPT-VQE:

Protocol 1: Reused Pauli Measurements with Variance-Based Allocation

- Initial Setup: Perform commutativity analysis of Hamiltonian and pool operators (can be done once during setup) [1]

- VQE Optimization Phase: Execute quantum circuits with optimal shot allocation based on term variances [1]

- Measurement Storage: Cache Pauli measurement outcomes for reuse [1]

- Operator Selection: Reuse relevant Pauli measurements for gradient evaluations of pool operators [1]

- Iterative Update: Repeat steps 2-4 for each ADAPT-VQE iteration [1]

This protocol was tested on molecular systems from Hâ‚‚ (4 qubits) to BeHâ‚‚ (14 qubits), and Nâ‚‚Hâ‚„ with 16 qubits, demonstrating consistent shot reduction [1].

Protocol 2: Greedy Gradient-Free Adaptive VQE (GGA-VQE)

- Candidate Sampling: For each pool operator, measure energy at 2-5 different parameter angles [12]

- Curve Fitting: Analytically determine optimal angle for each candidate using trigonometric fitting [12]

- Operator Selection: Choose the operator with lowest minimum energy [12]

- Parameter Locking: Add selected operator with optimal angle without future re-optimization [12]

GGA-VQE dramatically reduces measurements to just 2-5 circuit executions per iteration, regardless of system size, while maintaining noise resilience [12].

Experimental Validation on Quantum Hardware

25-Qubit Ising Model Implementation GGA-VQE was successfully executed on a 25-qubit trapped-ion quantum computer (IonQ's Aria system), representing a milestone for adaptive VQE methods on real hardware [12]. The implementation achieved over 98% fidelity compared to the true ground state, despite hardware noise, using only five observable measurements per iteration [12].

Molecular Simulation Studies Numerical simulations demonstrate the effectiveness of shot-reduction strategies:

- Variance-based shot allocation applied to both Hamiltonian and gradient measurements achieved shot reductions of 6.71% (VMSA) and 43.21% (VPSR) for Hâ‚‚, and 5.77% (VMSA) and 51.23% (VPSR) for LiH, relative to uniform shot distribution [1].

- CEO-ADAPT-VQE* reduced measurement costs by up to 99.6% compared to original ADAPT-VQE formulations while maintaining chemical accuracy [3].

Visualization of Measurement Workflows

Figure 1: Shot-Efficient ADAPT-VQE Workflow Integrating Commutativity Analysis and Measurement Reuse

Table 3: Essential Research Tools for ADAPT-VQE Implementation

| Tool/Resource | Type | Function/Purpose | Example Implementations |

|---|---|---|---|

| Operator Pools | Algorithmic Component | Provides candidate operators for ansatz construction | Fermionic GSD, Qubit Excitation, CEO Pool [3] |

| Commutativity Analyzers | Computational Tool | Identifies commuting operator groups for simultaneous measurement | Qubit-Wise Commutativity (QWC) Checkers [1] |

| Variance Estimators | Statistical Tool | Calculates term variances for optimal shot allocation | Hamiltonian Variance Analysis [1] |

| Quantum Simulators | Software Platform | Emulates quantum circuits for algorithm development | CUDA-Q, Qiskit, Perceval [22] [24] |

| Measurement Caches | Data Structure | Stores Pauli measurement outcomes for reuse | Pauli String Result Databases [1] |

| Hardware Backends | Quantum Hardware | Executes quantum circuits on physical processors | Superconducting QPUs, Photonic (Quandela), Trapped-Ion (IonQ) [22] [12] |

The measurement requirements in ADAPT-VQE are fundamentally intertwined with the design of the operator pool and the commutativity relationships between operators. The high shot overhead originates from the need to evaluate gradients for all pool operators during selection and to optimize parameters through iterative energy evaluations. Through strategic operator pool design—such as CEO pools that reduce measurement costs by up to 99.6%—and exploitation of commutativity via grouping and measurement reuse, significant reductions in shot requirements are achievable.

Advanced protocols like GGA-VQE that eliminate direct gradient measurements altogether offer an alternative pathway, demonstrating practical implementation on 25-qubit hardware with as few as 2-5 measurements per iteration. These developments address the core question of why ADAPT-VQE requires so many shots while providing actionable strategies for mitigating this bottleneck. As operator pool design and measurement strategies continue to evolve, the prospects for practical quantum advantage in chemical simulation grow increasingly promising.

The Adaptive Derivative-Assembled Problem-Tailored Variational Quantum Eigensolver (ADAPT-VQE) has emerged as a leading algorithm for molecular simulations on noisy intermediate-scale quantum (NISQ) devices, offering advantages over traditional variational approaches by systematically constructing more compact, problem-specific ansätze [1] [9]. However, a critical bottleneck threatens its practical implementation: an exponential explosion in the number of quantum measurements (shots) required for both operator selection and parameter optimization [1] [3]. This "shot crisis" originates from the fundamental process of mapping fermionic operators to Pauli strings—a necessary step for executing quantum chemistry problems on quantum hardware [25]. As molecular system size increases, this mapping produces an overwhelming number of Pauli terms that must be individually measured, creating a resource barrier that currently prevents practical quantum advantage [1] [3].